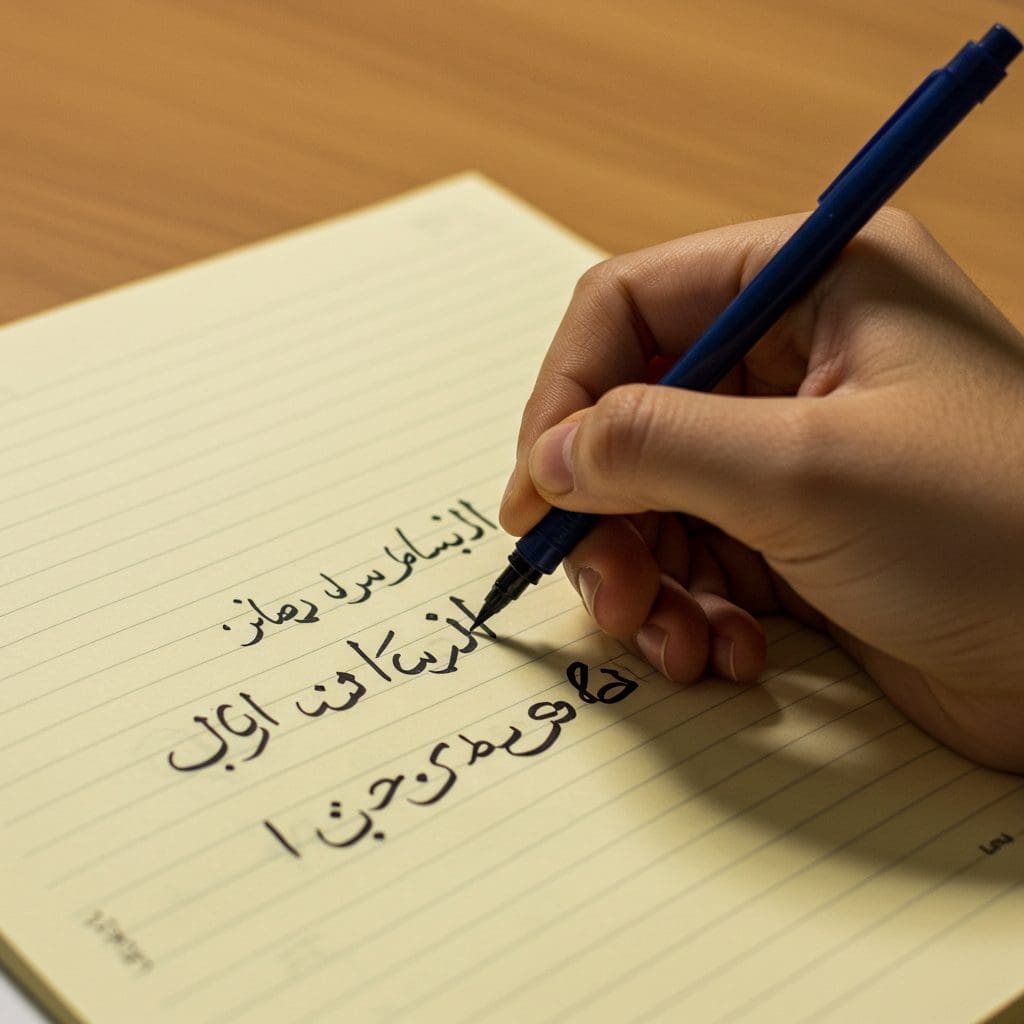

Mutarjim, a compact translation model based on Kuwain-1.5B, achieves performance comparable to models twenty times its size on Arabic-English translation. This was demonstrated using Tarjama-25, a new 5,000 sentence-pair benchmark designed to address limitations in existing datasets, where Mutarjim outperforms models including GPT-4o mini on English-to-Arabic tasks.

The pursuit of accurate and efficient machine translation continues to drive innovation in natural language processing. Researchers are increasingly focused on developing models that achieve high performance without the substantial computational demands of very large language models (LLMs). A team from misraj.ai – Khalil Hennara, Muhammad Hreden, Mohamed Motaism Hamed, Zeina Aldallal, Sara Chrouf, and Safwan AlModhayan – detail their work on Mutarjim: Advancing Bidirectional Arabic-English Translation with a Small Language Model, presenting a compact model that challenges the assumption that scale is the primary determinant of translation quality. Their research introduces both a new model, Mutarjim, built upon the Kuwain-1.5B architecture, and Tarjama-25, a novel benchmark dataset designed to address shortcomings in existing evaluation metrics for Arabic-English translation.

Mutarjim Advances Arabic-English Translation with Efficient Model and Novel Benchmark

Recent advancements in machine translation continually refine cross-lingual communication, yet challenges persist, particularly for languages with limited resources or complex linguistic structures. Researchers consistently strive to develop models that achieve high translation quality while minimising computational demands – a critical factor for accessibility and deployment in diverse environments. A new bidirectional Arabic-English translation model, Mutarjim, demonstrates competitive performance despite its relatively small size.

The development of Mutarjim addresses a crucial need for resource-efficient translation systems, particularly for Arabic, a language with complex morphology – the study of word formation – and a relatively limited availability of parallel data. Existing models often require substantial computational resources for training and inference, hindering their widespread adoption. Mutarjim overcomes these limitations by achieving comparable, and in some instances superior, translation quality to models up to twenty times larger, representing a substantial reduction in computational cost and training time. This efficiency broadens accessibility for resource-constrained environments and facilitates further research.

To ensure robust evaluation and facilitate future advancements, researchers introduced Tarjama-25, a novel benchmark specifically designed for Arabic-English translation. Existing Arabic-English datasets often suffer from limitations such as domain specificity, short sentence lengths, and a bias towards English-sourced sentences, hindering accurate assessment of translation systems. Tarjama-25 addresses these shortcomings by comprising 5,000 expert-validated sentence pairs, providing a more comprehensive and balanced evaluation framework.

Researchers meticulously developed Mutarjim utilising Kuwain-1.5B as a base model, focusing on an optimised two-phase training strategy and a carefully selected training corpus. This approach allowed them to achieve competitive performance despite the model’s relatively small size, demonstrating the effectiveness of their training methodology. The evaluation employed established datasets – OPUS-TED, OPUS-OpenSubtitles, JW30, ParaCrawl, and CCAligned – alongside Tarjama-25, ensuring a comprehensive assessment of the model’s capabilities. Quantitative analysis, utilising the BLEU score – a metric for evaluating the quality of machine-translated text – as the primary metric, reveals Mutarjim consistently outperforms several compared models, including c4ai, Yehia, ALLam, Cohere, AceGPT, LLaMAX3, SILM, GemmaX, XALMA, Gemma2, and GPT-4o mini.

Notably, Mutarjim achieves competitive results with a smaller model size. Researchers meticulously designed the two-phase training strategy to optimise performance and efficiency. The first phase focuses on pre-training the model on a large corpus of monolingual Arabic and English text, enabling it to learn the underlying linguistic patterns and representations of both languages. The second phase involves fine-tuning the model on a parallel corpus of Arabic-English sentence pairs, specifically training it to translate between the two languages.

The development of Mutarjim and Tarjama-25 underscores the importance of addressing the specific challenges posed by low-resource languages and the need for tailored evaluation benchmarks. By focusing on these critical aspects, researchers can unlock the full potential of machine translation and ensure that its benefits are accessible to all languages and communities.

The public release of both Mutarjim and Tarjama-25 represents a significant contribution to the research community, fostering collaboration and accelerating progress in Arabic-English machine translation. By making the model and benchmark openly available, researchers can readily replicate the results, conduct further experiments, and build upon the existing work. This open-source approach promotes transparency, reproducibility, and innovation.

Future research directions include exploring techniques to further improve the model’s handling of complex linguistic phenomena, such as idiomatic expressions and cultural nuances. Investigating methods to incorporate contextual information and domain-specific knowledge can enhance translation accuracy and relevance. Additionally, exploring the use of unsupervised or semi-supervised learning techniques can reduce the reliance on large parallel corpora, addressing the data scarcity issue for many language pairs.

The development of Mutarjim and Tarjama-25 provides a solid foundation for future advancements in Arabic-English machine translation and serves as a valuable resource for the broader research community. The continued pursuit of innovation in this field promises to revolutionise communication, education, and access to information, fostering a more connected and inclusive world.

👉 More information

🗞 Mutarjim: Advancing Bidirectional Arabic-English Translation with a Small Language Model

🧠 DOI: https://doi.org/10.48550/arXiv.2505.17894