Researchers investigate large and small language models (LMs) for code authorship attribution, analysing over 12,000 code snippets from 463 developers across six datasets. Results demonstrate LMs’ capacity to understand stylometric code patterns, offering insights into behaviour and future directions for automated authorship identification.

Determining the authorship of code presents a significant challenge, crucial for maintaining software integrity, detecting plagiarism, and supporting forensic investigations. Subtle variations in coding style, known as stylometry, can serve as identifying markers, yet discerning these patterns becomes increasingly complex in collaborative software projects with multiple contributors. Researchers are now investigating the potential of large language models (LLMs), originally developed for natural language processing, to automate this process of code authorship attribution (CAA). A study by Atish Kumar Dipongkor from the University of Central Florida, Ziyu Yao from George Mason University, and Kevin Moran from the University of Central Florida, detailed in their article, ‘Reassessing Code Authorship Attribution in the Era of Language Models’, presents an extensive empirical evaluation of both larger and smaller state-of-the-art code LLMs applied to six diverse datasets comprising over 12,000 code snippets authored by 463 developers. Their work utilises machine learning interpretability techniques to analyse how these models understand and utilise stylometric code patterns during authorship attribution, offering insights into the future development of this technology.

Code authorship attribution (CAA) represents a considerable challenge within cybersecurity, software forensics, and the detection of plagiarism, necessitating reliable techniques to distinguish subtle stylistic variations within source code. Researchers are actively exploring large language models (LLMs) as a potential solution, undertaking extensive empirical studies to assess their efficacy in capturing the intricate relationships that define individual coding styles. This work addresses a key knowledge gap concerning the accuracy with which these models identify authorship, applying both current, advanced larger and smaller code LLMs to six varied datasets comprising over 12,000 code snippets created by 463 developers.

Traditional methods, often reliant on manually engineered features like identifier naming conventions or code complexity metrics, struggle with this task due to the inherent complexity and variability of coding styles. LLMs, however, offer a promising alternative by learning representations directly from the code itself, potentially capturing more nuanced stylistic patterns. The models employed are trained on vast corpora of code, enabling them to develop an understanding of common coding practices and individual deviations from those norms.

Interpretability analysis reveals that the models sometimes concentrate on superficial characteristics, such as code formatting—including indentation and whitespace—rather than deeper stylistic elements indicative of an author’s unique approach. This suggests a need for further investigation into techniques that can encourage the learning of more robust and meaningful representations, potentially through the incorporation of attention mechanisms or contrastive learning. Attention mechanisms allow the model to focus on the most relevant parts of the code when making predictions, while contrastive learning encourages the model to distinguish between different coding styles.

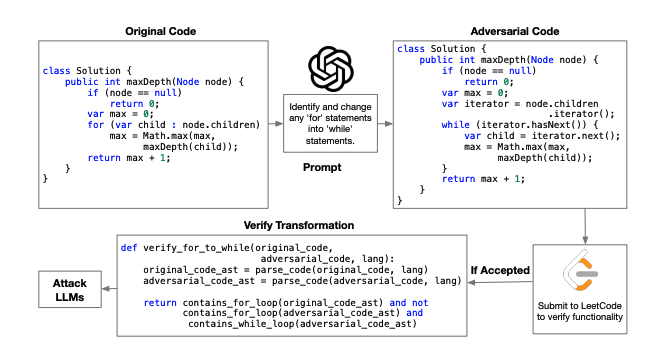

The research demonstrates that LLMs effectively identify coding styles across diverse datasets, frequently surpassing the performance of traditional feature-based methods. This capability stems from the models’ ability to capture subtle stylistic nuances, such as the use of specific programming constructs, variable naming conventions, and commenting styles. The findings point towards promising avenues for future research, including the development of more interpretable models, the integration of domain-specific knowledge—for example, focusing on code written in a particular programming language or for a specific application—and the creation of more resilient defences against adversarial attacks, where malicious actors attempt to disguise their code to evade detection.

👉 More information

🗞 Reassessing Code Authorship Attribution in the Era of Language Models

🧠 DOI: https://doi.org/10.48550/arXiv.2506.17120