The pursuit of reliable computation drives ongoing research into error correction, building on the foundational work of pioneers like von Neumann, but creating practical, fault-tolerant circuits presents significant challenges. Anirudh Krishna from IBM Quantum and Gilles Zémor from Institut de Mathématiques de Bordeaux, along with their colleagues, demonstrate a fundamental limitation in balancing the key requirements for these circuits. Their research reveals an inherent trade-off between the rate at which information can be processed, the robustness of the code against errors, and the complexity of the circuits needed to perform calculations on encoded data. This discovery establishes that simultaneously achieving high speed, strong error protection, and simple circuit designs is impossible, a finding with important implications for the development of future quantum and classical computing architectures.

Locally Decodable Codes Enable Fault Tolerance

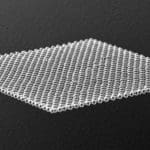

Scientists have demonstrated how locally decodable codes can provide a foundation for building computational systems that tolerate errors, even when hardware components fail. These codes possess a unique property: they allow recovery of the original message by examining only a small portion of the encoded data, unlike traditional codes requiring a full scan. This locality is crucial for designing efficient and robust computational circuits. Researchers focused on erasure errors, where bits are simply missing or unknown, rather than flipped to incorrect values. The approach involves dividing the support of codewords into smaller subsets and making local inferences about the encoded data, allowing for efficient error correction. The study establishes a strong connection between the ability to perform fault-tolerant computation and the existence of specific types of locally decodable codes, known as (q, r)-local codes. The team developed an explicit circuit for implementing an encoded CNOT gate, designed to be robust against a certain level of erasure errors, and analysis reveals that this circuit can tolerate a constant fraction of errors, meaning the number of errors it can correct doesn’t increase with the size of the input.

Circuit Volume Limits for Fault Tolerance

Scientists investigated the fundamental limits on the size of fault-tolerant circuits, exploring how efficiently computations can be performed despite component failures. Researchers defined circuit width as the total number of bits used and depth as the total number of time steps required for execution, using the product of these two, known as volume, as a measure of circuit size. The goal was to determine if this volume could be minimized while maintaining robustness against errors. The team designed circuits to simulate other circuits, creating a ‘fault-tolerant’ version to simulate an original circuit, even when the original circuit contains errors. Crucially, the research demonstrates that achieving a low volume does not guarantee the ability to tolerate a large number of errors. To explore this trade-off, the study employed binary linear codes to encode information within the circuits, proving that if the volume of the fault-tolerant circuit is kept proportional to the original circuit, the error-correcting code used cannot simultaneously have a good rate and a large distance, revealing an inherent conflict between these two properties.

Fault Tolerance Limits Sparse Circuit Depth Rate

Scientists have established fundamental limits on the efficiency of fault-tolerant circuits, demonstrating a trade-off between code rate, distance, and circuit depth. The research rigorously proves that a fault-tolerant circuit cannot simultaneously achieve constant rate, growing distance, and short-depth gadgets needed to perform encoded gates, revealing inherent constraints on circuit design. Experiments demonstrate that if the volume of a fault-tolerant circuit, simulating a circuit of volume V, obeys a certain relationship, the circuit can only tolerate a bounded number of errors. The team focused on sparse circuits, where only one gate operates between registers in each time step, and specifically considered circuits utilizing only CNOT gates. Results show that when using a binary linear code to construct the fault-tolerant circuit, the code cannot possess both a large distance and a high rate, formalizing the intuition that densely packed codewords complicate encoded computation. Measurements confirm that the volume overhead cannot be kept constant, even when employing codes with good rate, necessitating accepting a limited number of correctable errors.

Coding Rate Limits Robust Error Correction

This research establishes a fundamental limitation in the design of error-correcting codes for robust computation, demonstrating that achieving both high coding rate and strong error resilience is inherently difficult. Scientists proved that a code family cannot simultaneously possess constant rate, growing distance, and short-depth gadgets needed to perform operations on encoded data, meaning improving coding rate and error correction capabilities often necessitates accepting more complex, deeper circuits. The team demonstrated this limitation by showing that any code supporting targeted operations must contain a specific type of code known as a (q, r) local code, which are known to have poor coding rates. Consequently, the space overhead required for fault-tolerant circuits is fundamentally linked to the code’s rate; a code with a high rate is necessary to keep space overhead manageable. The findings suggest a trade-off between the complexity of the circuit and the robustness of the computation.

👉 More information

🗞 Tradeoffs on the volume of fault-tolerant circuits

🧠 ArXiv: https://arxiv.org/abs/2510.03057