Referring segmentation, the task of identifying objects within an image or video based on natural language descriptions, typically demands extensive training or complex system designs. Anna Kukleva from the Max Planck Institute for Informatics, Enis Simsar from ETH Zurich, Alessio Tonioni, Muhammad Ferjad Naeem, Federico Tombari, and Jan Eric Lenssen from Google address this challenge with a novel approach that leverages the rich semantic understanding already embedded within large generative diffusion models. Their work introduces RefAM, a training-free framework which exploits attention mechanisms within these models to pinpoint referred objects with unprecedented accuracy. By identifying and filtering out noise from attention ‘magnets’, specifically stop words, and strategically redirecting attention sinks, the team achieves state-of-the-art performance on zero-shot referring image and video segmentation benchmarks, demonstrating a significant advance in the field without requiring any additional training or architectural modifications.

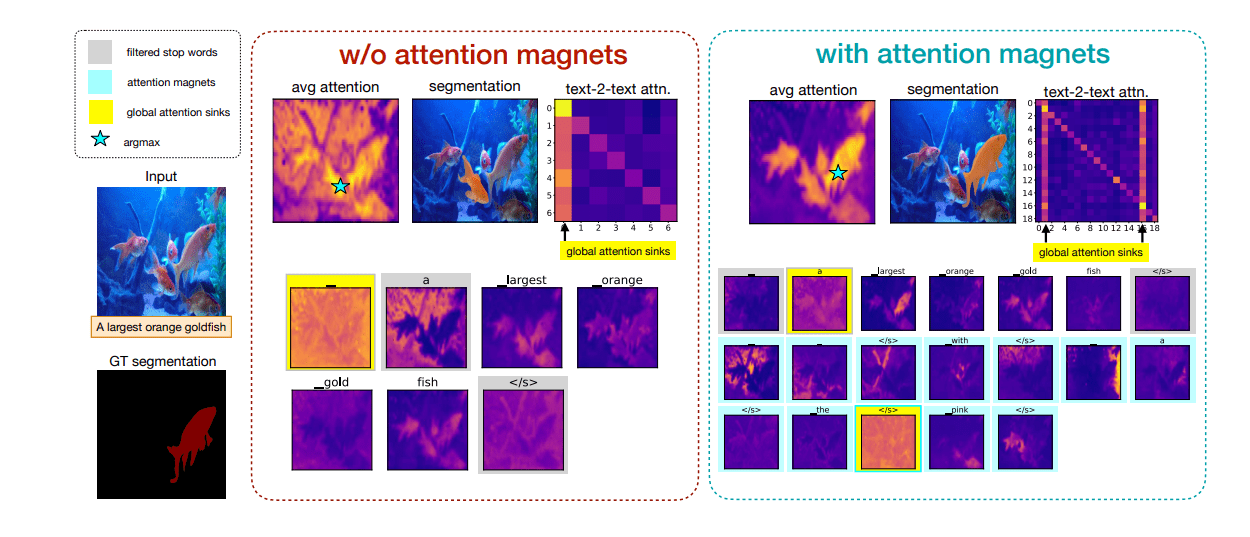

nks avg attention text-2-text attn. global attention sinks segmentation with attention magnets. The research highlights tokens, such as tokens #1 and #16, which function as Global Attention Sinks (GAS) in late layers of the DiT architecture. These tokens allocate disproportionately high and nearly uniform attention across all text and image tokens simultaneously, a phenomenon absent in early layers but consistently emerging in deeper blocks. GAS serve as indicators of semantic structure, although they are themselves uninformative and can suppress useful signals when occurring on meaningful tokens. Most existing approaches to referring segmentation achieve strong performance only through fine-tuning or by composing multiple models.

Diffusion and Attention for Segmentation

Scientists developed REFAM, a novel training-free framework for referring image and video object segmentation. The method leverages cross-attention features from large pre-trained diffusion models and combines them with a new attention mechanism that uses attention magnets, specifically stop words and extended stop words, to focus on relevant regions. Experiments demonstrate that REFAM achieves strong performance on standard benchmarks without requiring task-specific training. The team validated their approach through quantitative results, qualitative examples, and detailed ablation studies. The method utilizes cross-attention features extracted from large pre-trained diffusion models and refines attention maps using attention magnets.

This involves removing attention maps corresponding to common stop words to reduce noise and focus on content words. Researchers also introduced additional stop words to capture background details and further refine the attention maps. The method incorporates noun phrase and spatial relation extraction to guide attention towards semantically relevant regions and incorporate spatial cues. The final segmentation mask is generated using Segment Anything Model 2, using the most prominent point from the refined attention map as a prompt. The method was evaluated on RefCOCO, RefCOCO+, RefCOCOg, and Ref-DAVIS17.

REFAM achieves competitive or state-of-the-art performance on these benchmarks without task-specific training. Ablation studies demonstrated the effectiveness of each component, including attention magnets, noun phrase extraction, and spatial bias extraction. Qualitative examples showcase the method’s ability to accurately segment objects based on natural language descriptions. The method relies on large pre-trained diffusion models and SAM2, which may have inherent biases. While not strictly required, the method can benefit from language model-generated captions when high-quality captions are unavailable.

Using a single point from the attention map as a prompt for SAM2 can lead to undersegmentation in some cases. The current implementation does not explicitly model the temporal aspect of referring expressions in video referring object segmentation, always localizing in the first frame. The authors acknowledge potential biases in the underlying foundation models and emphasize the importance of training these models on diverse and carefully curated datasets. They emphasize that the method is intended for research applications and not for high-risk or sensitive decision-making domains. Key contributions include a training-free framework for referring object segmentation, the novel use of attention magnets to refine attention maps, demonstration of the method’s effectiveness on standard benchmarks, and detailed analysis of the method’s components through ablation studies. This research presents a promising approach to referring object segmentation that leverages the power of pre-trained diffusion models and a novel attention mechanism to achieve strong performance without requiring task-specific training.

Attention Sinks Hinder Referring Segmentation Performance

Scientists achieved a breakthrough in referring image and video segmentation by developing a method that directly exploits features from diffusion transformers, requiring no architectural modifications or additional training. The research team identified and analyzed global attention sinks, tokens that accumulate attention across both language and visual streams, and demonstrated that filtering these sinks does not harm performance. Experiments revealed that these sinks carry no useful signal for grounding tasks, effectively acting as distractions within the model. Further investigation showed that certain stop words function as local background attractors, drawing attention towards irrelevant regions of an image or video.

Surprisingly, appending additional stop words actually redistributes background attention, yielding cleaner and more localized heatmaps, indicating a method for refining focus within the model. Replacing stop words with random vectors also improved results, but real stop words consistently performed better, likely due to their repeated presence during the pre-training process. This suggests stop words can serve as a simple yet effective tool for attention redistribution. Building on these findings, the team developed RefAM, a training-free grounding framework that augments referring expressions with stop words, filters attention maps, and aggregates remaining cross-attention for accurate localization.

Tests demonstrate that RefAM achieves state-of-the-art zero-shot referring segmentation on both image and video benchmarks, consistently outperforming prior training-free methods. Specifically, the method unifies both image and video tasks under a single framework, operating without any training or additional components. The research team extracted cross-attention maps from rectified-flow diffusion transformers, identifying and filtering attention sinks, and then redistributing surplus attention through additional tokens. Analysis of transformer block IDs 7 and 42 revealed the behavior of text-to-text and text-to-image attention, particularly focusing on the “_patches” token. This work establishes a new standard in zero-shot referring segmentation, offering a robust and efficient method for localizing objects based on natural language descriptions.

Stop Word Filtering Improves Segmentation Accuracy

The team introduces RefAM, a new training-free framework for referring segmentation that leverages cross-attention features from diffusion transformers. Researchers discovered that certain words, termed ‘stop words’, function as attention magnets within these transformers, accumulating significant attention and potentially introducing noise. By filtering these stop words and addressing ‘global attention sinks’, areas where attention concentrates, the team developed a method to sharpen localization accuracy without requiring any retraining or architectural modifications to the underlying models. This approach consistently outperforms existing training-free methods on standard benchmarks for both image and video segmentation, achieving state-of-the-art results and improvements of up to 2.

5% on key metrics. These findings demonstrate the potential of diffusion attention as a robust foundation for grounding referring expressions in visual data. The authors acknowledge that while the method effectively reduces noise and improves localization, the underlying mechanisms of attention sinks and stop words require further investigation. Future work could explore the relationship between these phenomena and the broader context of language processing within diffusion models, potentially leading to even more refined and accurate segmentation techniques.

👉 More information

🗞 RefAM: Attention Magnets for Zero-Shot Referral Segmentation

🧠 ArXiv: https://arxiv.org/abs/2509.22650