The inner workings of self-attention, a cornerstone of modern natural language processing, remain surprisingly mysterious despite its success in creating advanced language-learning machines. Tal Halevi, Yarden Tzach, and Ronit D. Gross, along with Shalom Rosner and Ido Kanter, all from Bar-Ilan University, now shed light on how these systems ‘pay attention’ to language. Their research introduces a novel method for quantifying information processing within self-attention mechanisms, revealing a fascinating specialisation of different processing heads. By analysing the BERT-12 architecture, the team demonstrates that attention progressively shifts from identifying broad, long-range relationships to focusing on short-range connections within sentences, and importantly, that each head concentrates on unique linguistic features and specific tokens within the text. This work provides a crucial step towards understanding the quantitative characteristics of machine learning and offers a practical approach to text segmentation based on semantic features.

Transformers Learn, Signal and Noise Identified

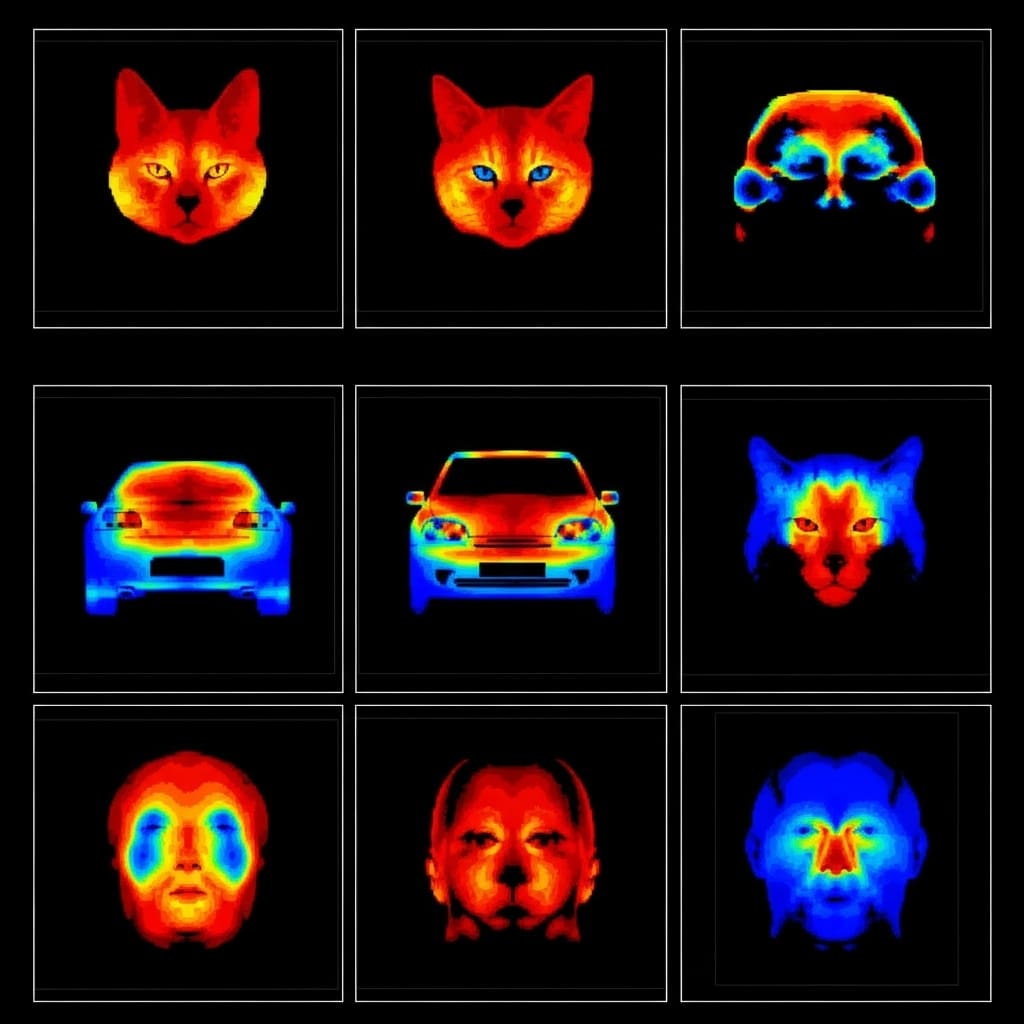

This research investigates how Transformer models, such as BERT, learn and represent language, moving beyond simple performance metrics to examine internal mechanisms and identify inefficiencies. The team aims to create a quantifiable language for assessing learning, focusing on distinguishing meaningful signals from random noise within these models. A central concept is analyzing context similarity matrices, which visualize how the model relates different parts of the input text and provide insight into its internal understanding. The study confirms that different attention heads specialize in various aspects of sentence structure, mirroring observations in computer vision.

Researchers found that the vector space created by Transformers contains noise, indicated by high similarity scores between unrelated tokens, suggesting potential inefficiencies in representation. As information passes through the Transformer blocks, the model appears to reduce language entropy by attempting to predict masked words. The research questions whether the current vector space is optimal, suggesting opportunities for improvement in efficiency and clarity, and highlights the impact of tokenization methods on model performance. Drawing parallels to computer vision and physics, the authors connect attention mechanisms to a generalized Potts model, proposing a physical interpretation of the learning process.

They emphasize the need to define and measure the signal-to-noise ratio within Transformer architectures to better understand learning efficiency. Potential improvements include pruning techniques, efficient architectures like Mamba, and a universal mechanism underlying successful deep learning models, alongside quantifiable methods for measuring signal-to-noise ratio. Further research could compare Transformers to other architectures, such as encoder-decoder models, and examine performance on NLP classification tasks to determine if current learning methods are optimal. This deep dive into Transformer models aims to move beyond simply using them to understanding how they learn and how to improve their efficiency and interpretability.

Token Vector Dynamics in BERT’s Attention Layers

Scientists developed a new method for quantifying information processing within the self-attention mechanism of Transformer models, focusing on the BERT-12 architecture. Instead of examining attention weights, they analyzed alterations to token vector representations as they propagate through the network, providing a comprehensive view of the learning process. The team engineered a context similarity matrix, calculated as the scalar product between token vectors, to measure relationships between tokens within each attention head and layer. This approach generated a matrix for each head, where higher values indicated stronger alignment between token representations and lower values signified weaker similarity, providing a quantifiable measure of contextual relationships.

Analysis of these matrices revealed that initial layers exhibited long-range similarities between tokens, but as information progressed, a preference for short-range similarity developed, culminating in attention heads creating strong similarities within the same sentence. Individual head analysis demonstrated that each head tended to focus on a unique token, building similarity pairs centered around that element, highlighting functional specialization. The study also pinpointed that the final layers prioritize sentence separator tokens, suggesting a potential application of this method for text segmentation based on semantic features. This detailed analysis of the vector space provides a new understanding of the dynamics of self-attention and its role in achieving effective language learning.

BERT Learns Sentence Boundaries Through Attention

This work quantifies information processing within transformer architectures, specifically examining the BERT-12 model to understand how learning occurs across its layers and attention heads. Scientists developed a method to analyze the vector space created by the self-attention mechanism, revealing insights into linguistic feature identification and contextual understanding. Experiments demonstrate that the final layers consistently focus attention on sentence separator tokens, suggesting a robust approach to text segmentation based on semantic features. The team derived a context similarity matrix, measuring the scalar product between token vectors, and discovered distinct similarities between token pairs within each attention head and layer.

Results show that different heads specialize in identifying specific linguistic characteristics, some focusing on repeated tokens, while others prioritize tokens appearing in common contexts. This specialization is reflected in the evolving distribution of distances between token vectors, transitioning from long-range similarities in initial layers to short-range similarities as the architecture progresses, ultimately favoring strong similarities within the same sentence. Analyzing individual head behavior, scientists recorded that each head tends to focus on a unique token, building similarity pairs around that element. Measurements confirm that attention centralizes along the layers, culminating in a focus on sentence separation tokens, a trend observed across many architectures. The context similarity matrix highlights areas of greater similarity and vector size between token representations, enabling the extrapolation of relationships between different token vector representations and revealing high-context similarity around sentence separators. This detailed analysis provides a quantifiable understanding of the learning process within transformer models.

Transformer Layers Segment Text Semantically

This research presents a novel method for quantifying information processing within transformer networks, specifically examining the vector space created by the self-attention mechanism. Through analysis of the BERT-12 architecture, scientists demonstrate that later layers of the network prioritize sentence separator tokens, offering a potential pathway for text segmentation grounded in semantic understanding. The team derived a context similarity matrix, measuring relationships between token vectors, and revealed that different attention heads within each layer specialize in distinct linguistic characteristics, such as identifying repeated tokens or recognizing contextual commonalities. The findings indicate a progression in how the network processes information, moving from long-range similarities in initial layers to a focus on short-range relationships within sentences in later layers.

Each attention head concentrates on unique tokens and builds similarity pairings around them, contributing to a refined understanding of contextual relationships. While acknowledging some noise in the vector space, the authors suggest the context similarity matrix offers a more fundamental and explainable view of transformer behavior than attention maps alone. Future work should focus on enabling the model to capture long-range contextual relationships, potentially drawing inspiration from biological learning systems, and on reducing noise within the vector space to optimize performance.

👉 More information

🗞 Self-attention vector output similarities reveal how machines pay attention

🧠 ArXiv: https://arxiv.org/abs/2512.21956