Diffusion Transformers represent a powerful approach to image and video generation, but researchers have discovered a fundamental limitation in their ability to effectively process data at varying resolutions. Haoyu Wu, Jingyi Xu, and Qiaomu Miao from Stony Brook University, along with Dimitris Samaras and Hieu Le, now demonstrate that standard positional encoding methods cause the attention mechanism to fail when combining information from different spatial grids, leading to blurred images and noticeable artifacts. This team identifies the root cause as a mismatch in how positional information is interpreted across resolutions, destabilising the generative process. To address this, they introduce a novel technique that aligns positional encodings, ensuring consistent interpretation of spatial relationships regardless of resolution, and successfully restores stability and significantly improves the quality and efficiency of mixed-resolution image and video generation, surpassing existing state-of-the-art methods.

Mixed Resolution Generation with Saliency Guidance

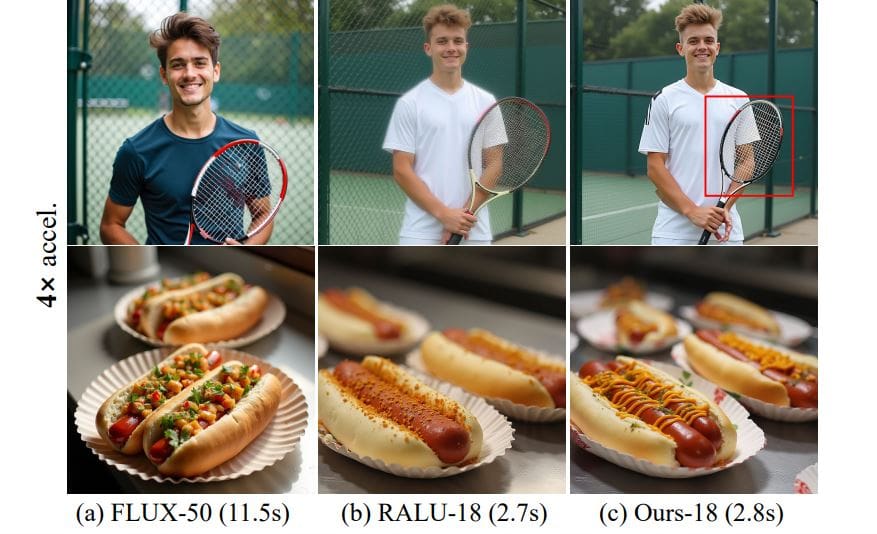

This research details a novel method for generating images and videos at varying resolutions, improving efficiency without sacrificing quality. The core idea involves selectively rendering parts of an image or video at high resolution while processing the rest at lower resolutions, intelligently guided by techniques like RoPE interpolation and saliency prediction. Diffusion models, specifically FLUX and Wan2. 1, efficiently resize latent representations using convolutional networks to achieve this.

Resolving Phase Aliasing in Diffusion Transformers

Researchers identified a critical failure mode in Diffusion Transformers (DiTs) when processing mixed-resolution data, where standard linear interpolation of rotary positional embeddings (RoPE) causes the attention mechanism to collapse, resulting in blurred images or instability. This occurs because the interpolation forces attention heads to compare RoPE phases sampled at incompatible rates, creating phase aliasing that destabilizes the generative process. To resolve this, scientists developed Cross-Resolution Phase-Aligned Attention (CRPA), a training-free method that eliminates the failure by modifying only the RoPE index map, expressing all key and value positions on the query’s stride. This ensures equal physical distances induce identical phase increments, restoring precise phase patterns. A Boundary Expand-and-Replace step further improves local consistency near resolution transitions by softly exchanging features between low- and high-resolution sides.

Diffusion Transformers Fail with Mixed Resolution Data

Researchers identified a core failure mode in Diffusion Transformers (DiTs) when processing mixed-resolution data, where linear interpolation on rotary positional embeddings (RoPE) causes the attention mechanism to collapse. This structural issue arises because the interpolation forces attention heads to compare RoPE phases sampled at incompatible rates, creating phase aliasing. Pretrained DiTs proved particularly vulnerable, exhibiting strong sensitivity to specific phases. To address this, the scientists developed Cross-Resolution Phase-Aligned Attention (CRPA), a training-free method that eliminates the failure by modifying the RoPE index map, expressing all positions in terms of the query’s stride. Detailed analysis of attention head responses demonstrated that CRPA restores precise phase patterns, stabilizing all heads and layers uniformly. Measurements of head response using cosine similarity confirmed that even small distortions in offset, introduced by mixed-resolution interpolation, produce large deviations in expected kernel response.

Phase Alignment Resolves Mixed Resolution Issues

Researchers identified a fundamental limitation in Diffusion Transformers when processing images or videos at mixed resolutions, where standard methods of interpolating positional embeddings cause the attention mechanism to fail. To address this, the researchers developed Cross-Resolution Phase-Aligned Attention, a technique that ensures consistent phase patterns regardless of resolution by modifying how positional information is indexed. Combined with a Boundary Expand-and-Replace procedure to smooth transitions, the approach enables high-fidelity image and video generation with improved efficiency.

👉 More information

🗞 One Attention, One Scale: Phase-Aligned Rotary Positional Embeddings for Mixed-Resolution Diffusion Transformer

🧠 ArXiv: https://arxiv.org/abs/2511.19778