Multimodal large language models consistently favour textual information when interpreting images, hindering their ability to draw meaningful conclusions from visual data. Xinhan Zheng, Huyu Wu, and Xueting Wang, from the University of Science and Technology of China, along with Haiyun Jiang from Shanghai Jiao Tong University, investigated the origins of this preference, challenging the prevailing view that it stems from imbalances in training data. Their research demonstrates that the bias is actually built into the models’ internal architecture, specifically within the attention mechanisms that process information. The team discovered that visual information receives lower priority during attention calculations because its internal representation differs significantly from that of text, effectively creating a mismatch in how the model weighs different types of input. This finding represents a crucial step towards building more balanced and reliable multimodal AI systems, moving beyond simply addressing data imbalances to tackle fundamental architectural limitations.

Current vision-language models exhibit a strong preference for textual inputs when processing multimodal data, limiting their ability to reason effectively from visual evidence. This research proposes that this text bias originates from the model’s internal architecture, not from external factors like data imbalance. The team hypothesizes that visual key vectors, representations of visual information used during attention calculations, are fundamentally different from the text key space learned during language-only pretraining, leading to their under-utilization. To validate this hypothesis, the team conducted experiments designed to isolate and quantify the impact of visual key distribution on model performance.

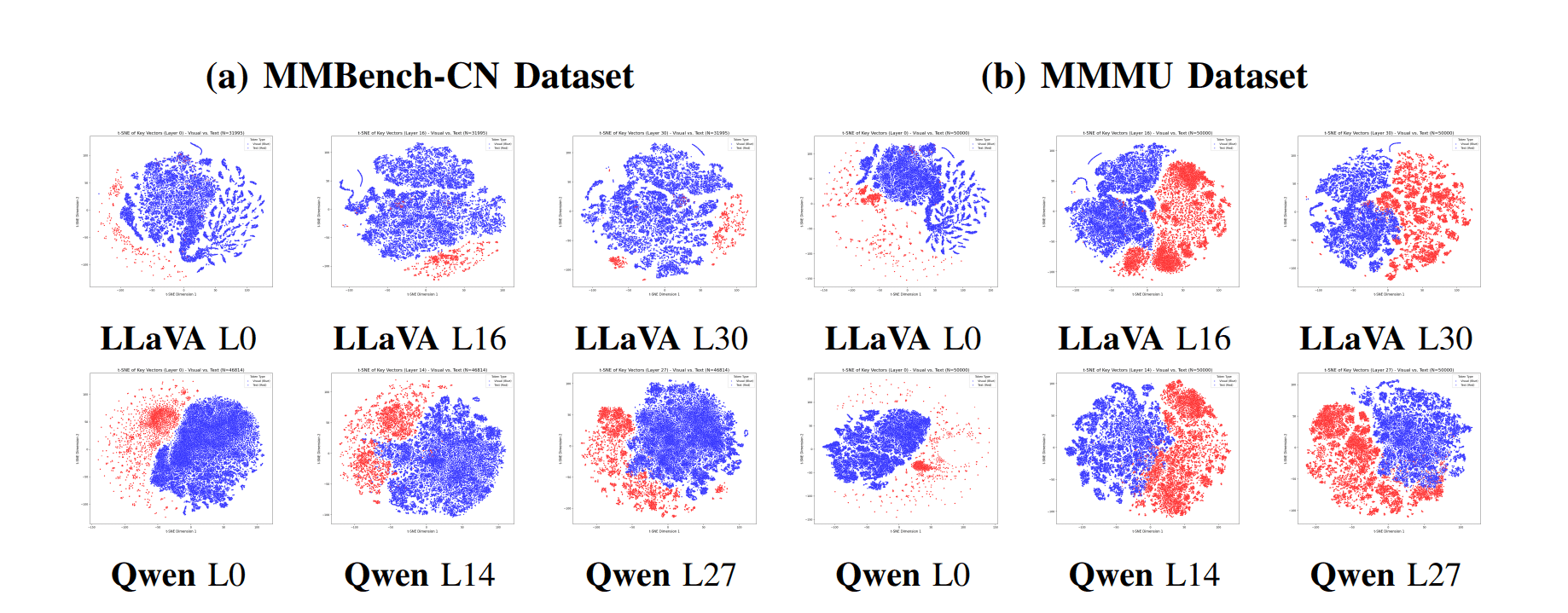

Image and Text Tokens Exhibit Key Space Separation

This research investigates the intrinsic modality bias present in Multimodal Large Language Models (MLLMs) like LLaVA and Qwen. The authors demonstrate that this bias isn’t due to data imbalances, but stems from a fundamental separation in how image and text tokens are encoded within the model’s key space. Essentially, the decoder, trained primarily on text, favours in-distribution text keys, leading to underutilization of visual information. Analysis reveals that image and text tokens occupy significantly different subspaces within the attention key space, with visual tokens forming compact clusters separate from the textual manifold. This bias is consistent across different benchmarks and is not easily explained by noise, suggesting that addressing this intrinsic key-space disparity is crucial for building more balanced and interpretable multimodal systems. This research shifts the focus from solely addressing data imbalances to tackling the architectural roots of the modality bias.

Textual Dominance in Multimodal Model Attention

Researchers have uncovered a fundamental architectural cause of text bias in multimodal large language models (MLLMs), demonstrating that these models systematically prioritize textual information over visual evidence. The study reveals that visual and textual information occupy distinctly separate spaces within the model’s attention mechanism, leading to a preference for text during processing. Scientists extracted key vectors from the LLaVA-1. 5 and Qwen2. 5-VL models, and analysis using dimensionality reduction techniques confirmed the separation of visual and textual key vectors. Quantitative analysis revealed a statistically significant gap between the distributions of visual and textual keys, exceeding intra-modal variation. By analyzing these internal representations, the research establishes that the textual bias stems from an inherent misalignment within the attention key space, rather than external data factors, delivering a new understanding of MLLM behavior.

Textual Bias Arises From Key Subspace Separation

This research demonstrates that a pronounced text bias exists within multimodal large language models, identifying its origin not in external factors like data imbalance, but in the internal architecture of these models. Scientists discovered that visual and textual information are encoded into distinct key subspaces within the attention mechanism, causing the models to systematically favour textual inputs during reasoning. The study examined LLaVA and Qwen2. 5-VL, revealing that even a more complex architecture could not entirely eliminate this inherent disparity in key space distribution. This work shifts the focus of remediation strategies away from simply balancing datasets and towards addressing this fundamental architectural issue, offering a critical direction for developing truly balanced and interpretable multimodal systems.

👉 More information

🗞 Unveiling Intrinsic Text Bias in Multimodal Large Language Models through Attention Key-Space Analysis

🧠 ArXiv: https://arxiv.org/abs/2510.26721