Charts form a vital means of conveying complex information, yet current Vision-Language Models (LVLMs) still struggle with accurately interpreting them, often focusing on unimportant details. Ali Salamatian, Amirhossein Abaskohi, and Wan-Cyuan Fan, all from the University of British Columbia, alongside colleagues, present a new dataset called ChartGaze that captures how people actually look at charts when answering questions. The team discovered a significant mismatch between human gaze patterns and where LVLMs direct their attention, which hinders both accuracy and understanding. To solve this, they developed a method that refines the model’s focus, aligning its attention with human fixations and achieving substantial improvements in answer accuracy across various chart types, demonstrating the value of incorporating human visual behaviour into these powerful AI systems.

Recognizing that existing datasets often rely on less precise methods like tracking mouse movements, scientists used high-precision eye-tracking equipment to record where participants focused their gaze while answering chart-based questions. This approach provides more accurate and consistent attention maps, as previous work confirms that even extensive mouse-tracking data cannot match the performance of eye-tracking from a smaller group. The team collected 4,638 attention maps, creating a large-scale resource for analyzing and improving LVLM performance.

To build ChartGaze, researchers utilized chart images from the VisText and ChartQA datasets, which contain real-world charts sourced from platforms like Statista and Pew Research. For charts lacking questions, the team generated 3 to 5 question-answer pairs per chart caption using GPT-4o, guided by specific instructions to ensure diverse phrasing, balance true/false answers, and maintain a strict JSON output format for automated processing. This created a comprehensive dataset grounded in detailed, semantically rich chart content. Scientists then conducted gaze data collection, meticulously recording participant eye movements as they answered the generated questions. The team categorized questions to understand dataset composition and performed detailed error analysis to ensure data quality. This rigorous methodology enables detailed comparisons between human and model attention, revealing how LVLMs diverge from natural human gaze patterns and identifying opportunities to refine their reasoning abilities.

Human-aligned Attention Improves Chart Question Answering

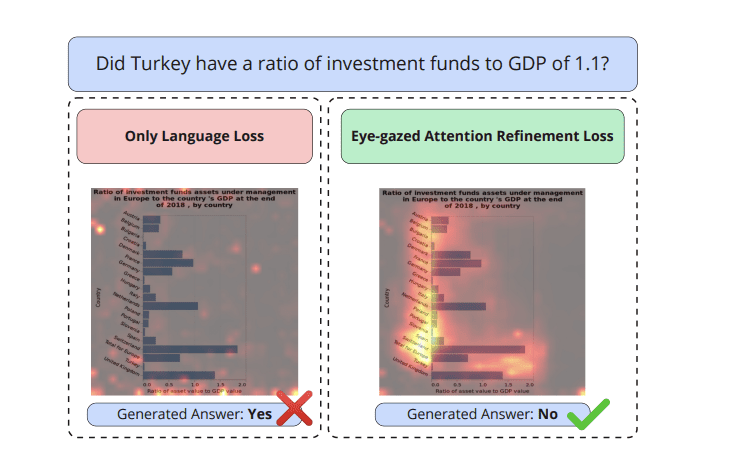

Scientists have achieved a significant breakthrough in chart question answering (CQA) by aligning the attention mechanisms of large vision-language models (LVLMs) with human gaze patterns. This work introduces ChartGaze, a novel eye-tracking dataset that captures where human participants focus their attention while reasoning about charts, providing ground-truth data for model training. Through systematic analysis, researchers observed that LVLMs frequently attend to irrelevant chart elements, leading to reduced interpretability and inaccurate responses. To address this misalignment, the team developed a gaze-guided attention refinement technique, training models to prioritize regions where humans typically fixate during chart analysis.

Empirical results demonstrate that this approach improves CQA accuracy by up to 2. 56 percentage points compared to traditional fine-tuning methods using language loss alone. The study reveals that models trained with gaze supervision produce more interpretable attention maps, mirroring human visual focus and leading to more reliable answers. This alignment with human gaze not only enhances the accuracy of CQA systems but also improves their transparency and trustworthiness, particularly in high-stakes domains like finance and scientific research.

Human Gaze Improves Chart Reasoning Models

This research introduces ChartGaze, a new dataset capturing human gaze patterns during chart reasoning, and demonstrates a method for refining the attention mechanisms of large vision-language models (LVLMs). By systematically comparing human and model attention, the team found that models often diverge from where humans focus when interpreting charts, impacting both accuracy and interpretability. The proposed gaze-guided attention refinement aligns model attention with human fixations, leading to improvements in answer accuracy across several model architectures. While the method proved effective for models not specifically pre-trained on chart-related tasks, gains were more limited for models already exhibiting strong performance in this area. The authors acknowledge the study’s focus on simple chart types and yes/no questions, which may limit the generalizability of the findings to more complex visualizations and open-ended queries. Future work could explore strategies for integrating attention refinement into instruction-tuned models and extending the research to a wider range of chart types and question formats to better understand how attention varies with task complexity.

👉 More information

🗞 ChartGaze: Enhancing Chart Understanding in LVLMs with Eye-Tracking Guided Attention Refinement

🧠 ArXiv: https://arxiv.org/abs/2509.13282