Yuri Alexeev, Marwa H. Farag, Taylor L. Patti, Mark E. Wolf, and Alán Aspuru-Guzik, among others, investigate the integration of artificial intelligence (AI) with quantum computing (QC) as published in Nature Communications on December 2, 2025. This work addresses the challenges of scaling QC by leveraging AI’s data-driven learning capabilities within the hardware and software stack. The authors propose that tightly integrating fault-tolerant quantum hardware with accelerated supercomputers is crucial for building accelerated quantum supercomputers capable of solving currently intractable problems, particularly as transitioning from noisy intermediate-scale quantum (NISQ) devices to fault-tolerant quantum computing (FTQC) presents significant obstacles.

Artificial Intelligence for Quantum Computing

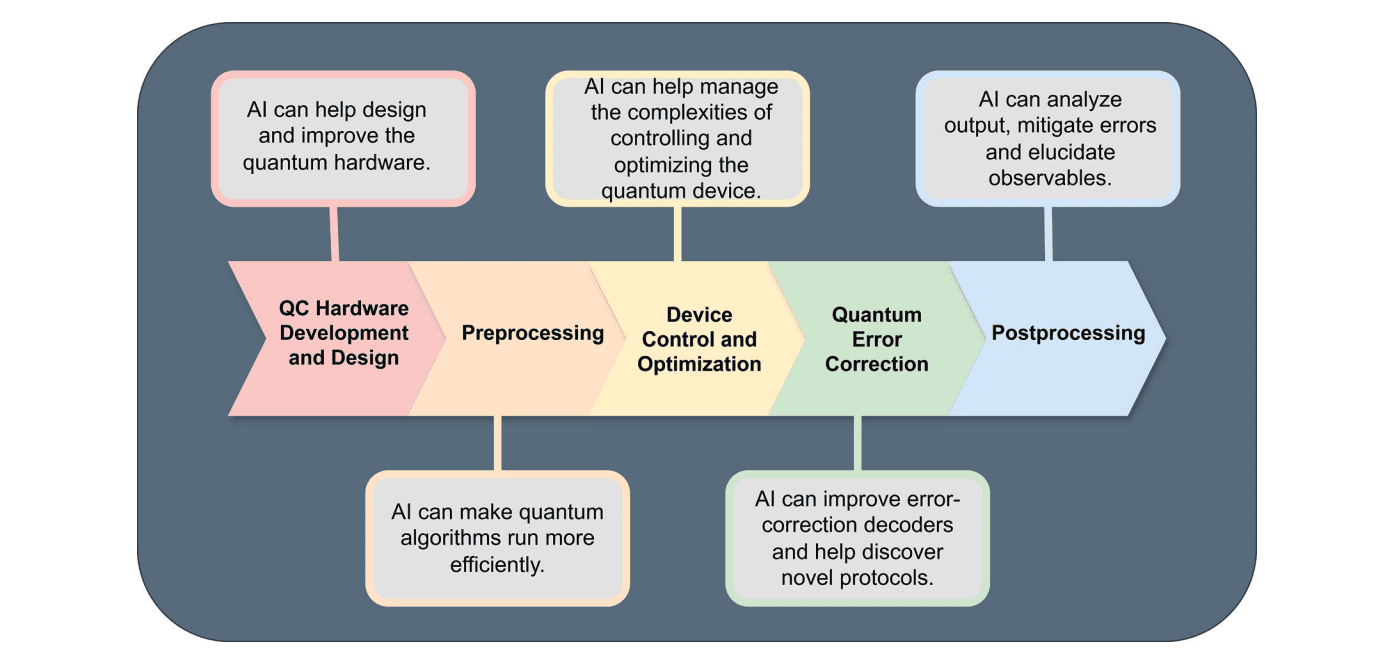

Artificial intelligence (AI) is increasingly relevant to the field of quantum computing (QC), offering potential solutions to scaling challenges. The source highlights that AI’s data-driven learning capabilities are well-suited to QC’s complex, high-dimensional mathematics. AI is being explored to accelerate research into designing and improving quantum hardware, and throughout the entire QC workflow—from preprocessing data to postprocessing results. This integration aims to build accelerated quantum supercomputers capable of solving currently intractable problems.

AI’s utility in QC stems from its ability to address limitations in both hardware and algorithms. While current qubit modalities suffer from hardware noise, hindering fault-tolerant computations, AI techniques can aid in developing more resourceful quantum error correction (QEC) codes and faster decoder algorithms. Furthermore, AI is being applied to algorithm development, potentially leading to more resource-efficient techniques and shortening the roadmap to practical quantum applications.

However, the source emphasizes critical limitations of using AI for QC. As a classical paradigm, AI cannot efficiently simulate quantum systems due to exponential scaling constraints. Tools like GroverGPT-2 demonstrate that AI can relocate, but not eliminate, classical resource bottlenecks when simulating quantum circuits. Therefore, AI’s role in QC is primarily focused on developing and operating quantum computers—not replacing them—and serving as a complementary tool for interpreting and reasoning about quantum processes.

AI’s Impact on Quantum Computer Development and Design

AI is increasingly impacting the development and design of quantum computers, addressing challenges in scaling these complex systems. The source highlights that many of the biggest scaling obstacles for quantum computing may ultimately be overcome with developments in artificial intelligence. Specifically, AI techniques are being applied to accelerate fundamental research into designing and improving quantum hardware, signifying a critical role in building useful quantum devices. This suggests AI is not just a complementary tool but a potential driver of progress in the field.

The inherent complexity of quantum mechanical systems makes them well-suited to the pattern recognition and scalability of AI techniques. While AI cannot efficiently simulate quantum systems due to exponential scaling constraints, it offers significant utility in interpreting, approximating, and reasoning about quantum processes. Foundational AI models, like transformer models popularized by OpenAI’s GPT models, are emerging as effective tools for leveraging accelerated computing for quantum computing applications – spanning from hardware design to algorithm development.

AI’s role extends throughout the entire quantum computing workflow, from preprocessing to postprocessing. The source details applications in areas like device control, optimization, and quantum error correction. Though current AI applications focus on developing and operating quantum computers (“AI for quantum”), the potential for quantum computers to enhance AI techniques (“quantum for AI”) is a separate area of research not covered in this review.

AI for Preprocessing

AI is increasingly being applied to challenges across the quantum computing (QC) hardware and software stack. The source highlights that many of QC’s scaling obstacles may ultimately be overcome through developments in AI. This review focuses specifically on “AI for quantum” – the use of AI techniques to develop and operate useful quantum computers, rather than the potential for quantum computers to enhance AI. The goal is to foster synergy between these two advanced fields, recognizing the potential for AI to accelerate progress in QC.

The QC workflow—encompassing preprocessing, tuning, control, quantum error correction (QEC), and postprocessing—is where AI is showing particular promise. AI techniques are being explored to accelerate fundamental research into designing and improving quantum hardware. The review examines applications of state-of-the-art AI throughout this workflow, noting that AI can impact various algorithmic subroutines across many tasks.

Specifically, the review organizes its findings according to the causal sequence of tasks in operating a quantum computer, with a dedicated section focused on “AI for preprocessing.” While acknowledging limitations – AI cannot efficiently simulate quantum systems in the general case due to exponential scaling – the source positions AI as a complementary tool for interpreting, approximating, and reasoning about quantum processes, rather than a direct substitute for quantum hardware.

AI for Device Control and Optimization

AI is increasingly relevant to advancements in quantum computing (QC), offering potential solutions to scaling challenges. The source highlights a growing synergy between AI and QC, particularly in accelerating research into designing and improving quantum hardware. AI’s high-dimensional pattern recognition capabilities and scalability are well-suited to the complexities of quantum mechanical systems, driving progress across the QC hardware and software stack. This review focuses specifically on “AI for quantum,” meaning AI’s role in developing QC, not QC enhancing AI.

The source details how AI plays a role throughout the QC workflow, specifically mentioning “AI for device control and optimization.” This signifies AI’s application in tuning and refining quantum systems. Further along the workflow, AI assists in quantum error correction (QEC), a critical step towards fault-tolerant quantum computing, requiring resourceful QEC codes, faster decoder algorithms, and carefully designed qubit architectures. AI’s role spans algorithm development, impacting various algorithmic subroutines throughout the entire process.

While powerful, AI’s limitations within this context are noted. As a classical paradigm, AI cannot efficiently simulate quantum systems generally due to exponential scaling constraints. For example, GroverGPT-2, which uses large language models to simulate Grover’s algorithm, is limited by context length and struggles to generalize to larger circuits. Therefore, AI serves as a complementary tool for interpreting and approximating quantum processes, rather than a direct replacement for quantum hardware itself.

AI for Quantum Error Correction

Artificial intelligence is being explored as a tool to address challenges in quantum computing, particularly in scaling up to fault-tolerant quantum computers. The source highlights that many of quantum computing’s biggest scaling obstacles may ultimately be resolved through developments in AI. This review focuses on “AI for quantum” – using AI to develop and operate quantum computers – and distinguishes it from the concept of quantum computers enhancing AI (“quantum for AI”). AI’s potential stems from its ability to handle the complex, high-dimensional mathematics inherent in quantum systems.

AI is proving valuable across the entire quantum computing workflow, from hardware design to post-processing of results. Specific areas of impact include preprocessing, device control and optimization, and crucially, quantum error correction (QEC). The source details that resourceful QEC codes, faster decoder algorithms, and carefully designed qubit architectures are all areas where AI can contribute. AI’s use in algorithm development is also interwoven throughout these tasks, suggesting broad applicability within QC.

Despite its promise, the source emphasizes AI’s limitations in the context of quantum computing. As a classical paradigm, AI cannot efficiently simulate quantum systems due to exponential scaling constraints. Tools like GroverGPT-2 demonstrate this, facing limits in circuit size and generalizability. Therefore, AI serves best as a complementary tool for interpreting and approximating quantum processes, rather than directly replacing quantum hardware.

AI for Postprocessing

The review details how artificial intelligence (AI) is being applied throughout the quantum computing (QC) workflow, from initial development to final results. Specifically, AI techniques are advancing challenges across hardware and software, impacting areas like device design and error correction. The authors emphasize that AI’s role is currently focused on developing and operating quantum computers—referred to as “AI for quantum”—and does not cover the reverse prospect of quantum computers enhancing AI.

AI is utilized in several stages of QC, including “AI for preprocessing,” “AI for device control and optimization,” “AI for quantum error correction,” and “AI for postprocessing.” This demonstrates a broad integration of AI across the entire QC process. While acknowledging limitations – such as AI’s inability to efficiently simulate quantum systems due to exponential scaling – the review highlights AI’s potential for interpreting, approximating, and reasoning about quantum processes.

Despite the promise of AI, the source clarifies it doesn’t replace quantum hardware. For example, GroverGPT-2, which simulates Grover’s algorithm using large language models, is limited by the maximum context length of the model, restricting its ability to handle larger circuits. The review positions AI as a complementary tool, assisting with specific tasks within the QC workflow, rather than a full substitute for quantum computation itself.

AI in Quantum Algorithm Development

Artificial intelligence is increasingly recognized for its potential to address challenges within quantum computing (QC), particularly as QC transitions from noisy intermediate-scale quantum (NISQ) devices to fault-tolerant quantum computing (FTQC). The source highlights that many of QC’s scaling obstacles may ultimately be resolved through developments in AI. This integration aims to build accelerated quantum supercomputers – heterogeneous architectures capable of solving currently intractable problems with significant scientific and economic impact, like chemical simulation and optimization.

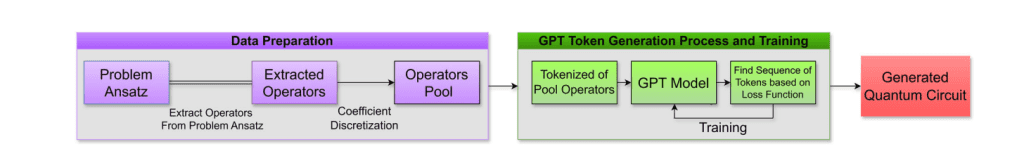

The source details how foundational AI models, like transformer models popularized by OpenAI’s GPT, are emerging as effective tools for leveraging accelerated computing for QC. These models demonstrate utility in a wide range of tasks, from biomedical engineering to materials science, and are now being applied to QC hardware research and algorithm development. AI’s ability to recognize high-dimensional patterns and inherent scalability make it well-suited for the complexities of quantum mechanical systems.

However, the source emphasizes a critical limitation: AI, as a classical paradigm, cannot efficiently simulate quantum systems generally due to exponential scaling constraints. While AI can assist with interpreting, approximating, and reasoning about quantum processes, it cannot replace quantum hardware for simulation. An example is GroverGPT-2, which faces limitations in circuit size due to the context length of the large language model used, demonstrating that resource bottlenecks may simply shift rather than disappear.

Limitations of AI in Quantum Simulation

Despite the promise of AI in advancing quantum computing (QC), limitations exist due to AI being a fundamentally classical paradigm. The source highlights that AI cannot efficiently simulate quantum systems in the general case, citing exponential scaling constraints imposed by quantum mechanics. Classical simulation of quantum circuits faces exponential growth in computational cost and memory, restricting the size of systems AI can model and impacting generalizability to larger problems, as seen with GroverGPT-2.

The example of GroverGPT-2 illustrates a specific limitation: its ability to simulate Grover’s algorithm is constrained by the maximum context length of the large language model (LLM). This means simulating larger quantum circuits becomes infeasible. Furthermore, the model’s performance deteriorates when applied to problem sizes beyond its training data, indicating that resource bottlenecks are simply relocated rather than removed, contributing to scaling costs and deployment hurdles.

The review clarifies its focus is on “AI for quantum” – using AI to develop and operate quantum computers – and not “quantum for AI,” which explores using quantum computers to enhance AI techniques. The source emphasizes that AI serves as a complementary tool for interpreting, approximating, and reasoning about quantum processes, rather than a direct substitute for quantum hardware itself, acknowledging a fundamental limit to its capabilities in full quantum simulation.

Scaling Obstacles in AI for Quantum Computing

Scaling obstacles in quantum computing may be addressed by advancements in artificial intelligence (AI). The source highlights that many of QC’s biggest scaling challenges may ultimately rest on developments in AI, noting the inherent nonlinear complexity of quantum mechanical systems makes them well-suited to AI’s high-dimensional pattern recognition and scalability. AI is emerging as an effective way to leverage accelerated computing for QC, impacting both the development of quantum hardware and workflows needed for useful devices.

Despite AI’s potential, limitations exist when applying it to quantum computing. The source emphasizes AI, as a classical paradigm, cannot efficiently simulate quantum systems due to exponential scaling constraints. For instance, GroverGPT-2, while using large language models to simulate Grover’s algorithm, is limited by the maximum context length of the model, impacting its ability to simulate larger circuits and generalize beyond training data.

This review focuses on “AI for quantum” – the impact of AI on developing and operating quantum computers, rather than the reverse (“quantum for AI”). The analysis examines how AI aids the entire QC workflow, starting with hardware design and extending through preprocessing, device control, quantum error correction, and postprocessing, with algorithmic improvements interwoven throughout these stages. The source organizes its discussion according to the causal sequence of tasks undertaken when operating a quantum computer.

Generative AI and Quantum Computing

Generative AI paradigms are emerging as effective tools for leveraging accelerated computing for quantum computing (QC), building on advancements in artificial intelligence (AI). Transformer models, popularized by OpenAI’s GPT models, demonstrate utility in technical fields like biomedical engineering and materials science, and are now being applied to QC challenges. This review focuses on “AI for quantum,” exploring how AI techniques advance QC hardware and software—from device design to applications—and aims to foster synergy between the two fields.

While promising, AI faces limitations when applied to QC. As a classical paradigm, AI cannot efficiently simulate quantum systems due to exponential scaling constraints. For example, GroverGPT-2, which uses large language models to simulate Grover’s algorithm, is limited by the maximum context length of the model, hindering the simulation of larger circuits and impacting generalizability. AI serves as a complementary tool for interpreting quantum processes, not a direct replacement for quantum hardware.

This review examines AI’s role across the QC workflow—from development and design, through preprocessing and error correction, to postprocessing—and its impact on algorithmic subroutines. The focus is on how AI accelerates research into improving quantum hardware and developing useful devices, recognizing that AI’s utility lies in complementing, not replacing, quantum computation itself. It specifically addresses “AI for quantum”, excluding discussion of “quantum for AI”.

Transformer Models in Quantum Applications

Transformer models are emerging as effective tools for leveraging accelerated computing in quantum computing (QC) research. Characterized by broad training data and adaptability, these models – particularly OpenAI’s generative pre-trained transformer (GPT) – demonstrate utility in technical fields like biomedical engineering and materials science. The source highlights a goal of applying such models to address challenges facing QC development, noting their potential to accelerate research into both hardware and software components needed for useful quantum computers.

The inherent complexity of quantum mechanical systems makes them well-suited to the high-dimensional pattern recognition capabilities and scalability of transformer models and other AI techniques. While AI cannot efficiently simulate quantum systems due to exponential scaling limitations, it offers significant advantages in interpreting, approximating, and reasoning about quantum processes. GroverGPT-2, utilizing large language models, exemplifies this, though its ability to simulate circuits is limited by maximum context length and generalization to larger problems.

This review specifically focuses on “AI for quantum” – the impact of AI techniques on developing and operating quantum computers. It does not cover the potential of quantum computers enhancing AI techniques (“quantum for AI”). The review examines how AI is applied throughout the quantum computing workflow, spanning device design, preprocessing, control, error correction, and postprocessing, with AI impacting various algorithmic subroutines throughout each stage.

High-Performance Computing and Quantum Computing

Artificial intelligence is increasingly important for advancing quantum computing (QC), addressing challenges across hardware and software development. The source highlights that QC’s “high-dimensional mathematics” makes it well-suited for AI’s data-driven learning, potentially solving key scaling problems. AI is being applied to various stages, from designing quantum hardware to optimizing device control and error correction, ultimately aiming to build accelerated quantum supercomputers capable of tackling complex problems in science and industry.

A key application of AI within QC is accelerating the development of fault-tolerant quantum computing (FTQC). While current qubit modalities suffer from hardware noise, preventing operation below a necessary threshold, AI is helping to develop more resourceful quantum error correction (QEC) codes and faster decoder algorithms. AI is also assisting in designing qubit architectures, all contributing to advancements needed for scalable and useful quantum applications, even in the face of current limitations.

Despite the promise of AI, the source emphasizes its limitations in directly simulating quantum systems. Classical AI encounters exponential scaling constraints, hindering the simulation of large quantum systems. Tools like GroverGPT-2, which uses large language models, are limited by context length and generalization ability. Therefore, AI’s role is best suited for complementing quantum hardware – assisting in interpretation, approximation, and reasoning about quantum processes, rather than replacing it entirely.

Quantum Supercomputers: Heterogeneous Architecture

The development of useful quantum computers relies on integrating fault-tolerant quantum hardware with accelerated supercomputers, forming a heterogeneous architecture capable of solving currently intractable problems. This architecture is crucial for realizing the potential impact of quantum computing across science and industry. Delivering on this promise demands advancements across the entire quantum computing stack, from hardware design to application development, and AI is increasingly recognized as a key enabler of progress in both areas.

AI is being explored as a breakthrough tool for QC, particularly for accelerating fundamental research into designing and improving quantum hardware. The source details how AI techniques can advance challenges across the hardware and software stack, spanning tasks from device design to applications. This includes roles in preprocessing, tuning, control, optimization, quantum error correction (QEC), and postprocessing, with AI impacting various algorithmic subroutines throughout the QC workflow.

Despite the promise of AI, the source emphasizes it’s a classical paradigm and cannot efficiently simulate quantum systems due to exponential scaling constraints. While AI can aid in interpreting, approximating, and reasoning about quantum processes, it’s not a substitute for quantum hardware. Classical simulations, like GroverGPT-2, face limitations in generalization and scaling, relocating resource bottlenecks rather than removing them, highlighting AI’s role as a complementary tool, not a replacement, for quantum computation.

NISQ Devices to Fault-Tolerant Quantum Computing

Transitioning from noisy intermediate-scale quantum (NISQ) devices to fault-tolerant quantum computing (FTQC) presents significant challenges. While recent quantum error correction (QEC) demonstrations have been performed, all qubit modalities currently suffer from hardware noise, preventing operation below the threshold needed for fault tolerance. FTQC demands resourceful QEC codes, faster decoder algorithms, and carefully designed qubit architectures – areas where advancements are crucial for scaling quantum systems and realizing useful computations.

Artificial intelligence (AI) is emerging as a potential breakthrough tool for QC, particularly in accelerating research into the design and improvement of quantum hardware. The inherent complexity of quantum mechanical systems makes them well-suited to the high-dimensional pattern recognition capabilities and scalability of AI techniques. AI is being applied across the QC workflow, from preprocessing and device control to QEC and postprocessing, with the goal of shortening the roadmap to practical quantum applications.

Despite AI’s promise, it’s critical to recognize its limitations. As a classical paradigm, AI cannot efficiently simulate quantum systems in the general case due to exponential scaling constraints. Classical simulations suffer from exponential growth in computational cost and memory, limiting the size of quantum systems AI can handle. Therefore, AI serves as a complementary tool for interpreting and approximating quantum processes, rather than replacing quantum hardware itself.

AI’s Role in the Quantum Computing Workflow

Artificial intelligence is increasingly relevant to overcoming challenges in quantum computing (QC), potentially impacting both hardware and software development. The source highlights that QC’s complex, high-dimensional mathematics make it a strong candidate for AI’s data-driven learning capabilities, and suggests that scaling QC may ultimately depend on advances in AI. This review focuses on “AI for quantum”—using AI to develop and operate quantum computers, distinct from the concept of quantum computers enhancing AI itself.

AI is being applied throughout the quantum computing workflow, from initial device design and hardware improvement to post-processing of results. Specifically, the review organizes applications based on a “causal sequence of tasks” including preprocessing, device control & optimization, quantum error correction (QEC), and post-processing. AI also impacts algorithm development throughout these stages, with applications spanning many tasks across the workflow, aiming to accelerate progress toward useful quantum applications.

Despite its promise, AI has limitations when applied to quantum systems. As a classical paradigm, AI cannot efficiently simulate quantum systems in the general case due to exponential scaling constraints. For example, GroverGPT-2, which uses large language models to simulate Grover’s algorithm, is limited by the maximum context length of the model. While AI can help interpret, approximate, and reason about quantum processes, it’s not a substitute for quantum hardware itself, and classical resource bottlenecks can simply shift rather than disappear.