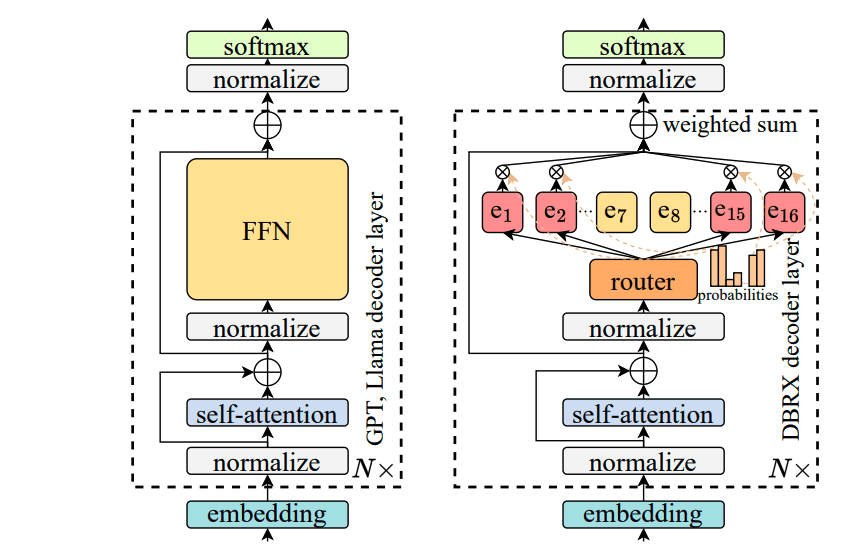

The escalating demand for personalised artificial intelligence services necessitates efficient and cost-effective deployment of large language models (LLMs), a challenge particularly acute for private systems intended for individual or small-group use. Researchers are now investigating novel architectures and hardware configurations to address these limitations, moving beyond reliance on large-scale, centralised computing infrastructure. A collaborative team comprising Mu-Chi Chen and Shih-Hao Hung from National Taiwan University, Po-Hsuan Huang and Chia-Heng Tu from National Cheng Kung University, and Xiangrui Ke and Chun Jason Xue from Mohamed bin Zayed University of Artificial Intelligence detail their investigation into utilising a cluster of Apple Mac Studios equipped with M2 Ultra chips to host and accelerate the pre-trained DBRX model, a large language model employing a Mixture-of-Experts (MoE) architecture. Their work, entitled “Towards Building Private LLMs: Exploring Multi-Node Expert Parallelism on Apple Silicon for Mixture-of-Experts Large Language Model”, explores the performance characteristics of this configuration, identifying bottlenecks related to network latency and software memory management, and proposes optimisation strategies to improve cost-efficiency relative to conventional high-performance computing systems.

Mac Studio clusters offer a viable platform for large language model (LLM) inference; however, network infrastructure often limits performance, particularly when deploying Mixture-of-Experts (MoE) architectures, such as DBRX. Performance analysis reveals that communication time between nodes often equals, and sometimes surpasses, the computational time required by individual experts. This highlights the critical importance of minimising network latency for optimal performance, challenging the conventional focus on bandwidth alone and suggesting that prioritising low latency unlocks the full potential of distributed LLM inference.

The study validates a performance model, represented by Equation 1, which accurately estimates the impact of network upgrades on overall system performance. This model forecasts potential gains achievable through faster networking, providing a valuable tool for system designers and enabling informed decisions regarding network infrastructure investment. By accurately modelling system behaviour, organisations can optimise cost-effectiveness and performance, ensuring efficient resource allocation and maximising return on investment in LLM infrastructure.

Researchers conducted a thorough investigation into the performance of Mac Studio clusters, focusing on the interplay between network performance, memory management, and model parallelism strategies. They discovered that communication overhead has a significant impact on overall system performance, especially when scaling to larger clusters. Optimising network infrastructure, therefore, becomes crucial for unlocking the full potential of distributed LLM inference and achieving optimal performance. Model parallelism is a technique where different parts of a large model are distributed across multiple processing units to accelerate computation.

The team meticulously evaluated various networking technologies, including 10 Gbps baseline connections, 25 Gbps Remote Direct Memory Access over Converged Ethernet version 2 (RoCEv2), and 200 Gbps Infiniband. Results demonstrate that transitioning from a 10 Gbps network to 25 Gbps RoCEv2 substantially increases token generation throughput to 16.3 tokens per second, representing a significant improvement with a modest 5% cost increase. While 200 Gbps Infiniband achieves the same throughput, its associated 20% cost increase renders it less economically viable. Token generation throughput refers to the rate at which the model produces output tokens, a key metric for evaluating LLM performance.

Further research should investigate the scalability of these findings to larger clusters with more nodes, as the current study focuses on a two-node configuration. Understanding how communication overhead scales with increasing node count is crucial for designing larger, more powerful LLM systems capable of handling complex workloads and delivering real-time performance. Investigating alternative networking technologies, such as higher-speed Ethernet standards or advanced interconnect topologies, could also reveal further opportunities for performance improvement and unlock even greater scalability.

Optimising the Apple software stack’s memory management logic, which currently introduces significant overhead, represents another promising avenue for future work. Reducing this overhead would further enhance the cost-efficiency of the Mac Studio cluster, potentially surpassing the performance of even more expensive AI supercomputers equipped with H100 GPUs. Exploring techniques for more efficient memory allocation and data transfer within the Apple ecosystem could yield substantial benefits and unlock the full potential of the hardware.

To develop holistic optimisation strategies, a comprehensive analysis of the interplay between network performance, memory management, and model parallelism strategies is warranted. Understanding how these factors interact is essential for maximising the overall performance and cost-efficiency of private LLM systems. This research will contribute to the development of more accessible and sustainable AI infrastructure, enabling organisations to deploy and scale LLMs without incurring exorbitant costs.

👉 More information

🗞 Towards Building Private LLMs: Exploring Multi-Node Expert Parallelism on Apple Silicon for Mixture-of-Experts Large Language Model

🧠 DOI: https://doi.org/10.48550/arXiv.2506.23635