The world of machine learning has taken a significant step forward with the successful porting of MLPerf BERT, a popular benchmark, to run on AMD Instinct MI210 and MI250 accelerators. This achievement, led by researchers from the University of California San Diego and Advanced Micro Devices Inc., demonstrates that machine learning models can efficiently run on AMD GPUs. The implications are far-reaching, with potential applications in various industries and fields, including high-performance computing and artificial intelligence. In this article, we delve into the details of this groundbreaking work and explore its significance for the future of machine learning.

Can Machine Learning Models Run on AMD GPUs?

The answer is yes, and this article will delve into the details of porting MLPerf BERT, a popular machine learning benchmark, to run on AMD Instinct MI210 and MI250 accelerators. The team behind this effort includes researchers from the University of California San Diego and Advanced Micro Devices Inc.

Porting MLPerf BERT to AMD GPUs was no easy feat. The team encountered several challenges, including optimizing the model for the specific hardware architecture of the AMD accelerators. To overcome these hurdles, the team applied various solutions, such as modifying the code to take advantage of the accelerators’ unique features and fine-tuning the model’s hyperparameters.

The successful porting of MLPerf BERT to AMD GPUs resulted in the submission of the code to the MLPerf community codebase. This achievement is significant because it demonstrates that machine learning models can run efficiently on AMD GPUs, which could have important implications for various industries and applications.

What is MLPerf?

MLPerf (Machine Learning Performance) is a benchmark developed by MLCommons, an organization dedicated to promoting the development of machine learning technologies. The MLPerf benchmark provides a standardized way to measure the performance of machine learning models on different hardware platforms. This allows researchers and developers to compare the performance of various models and hardware configurations.

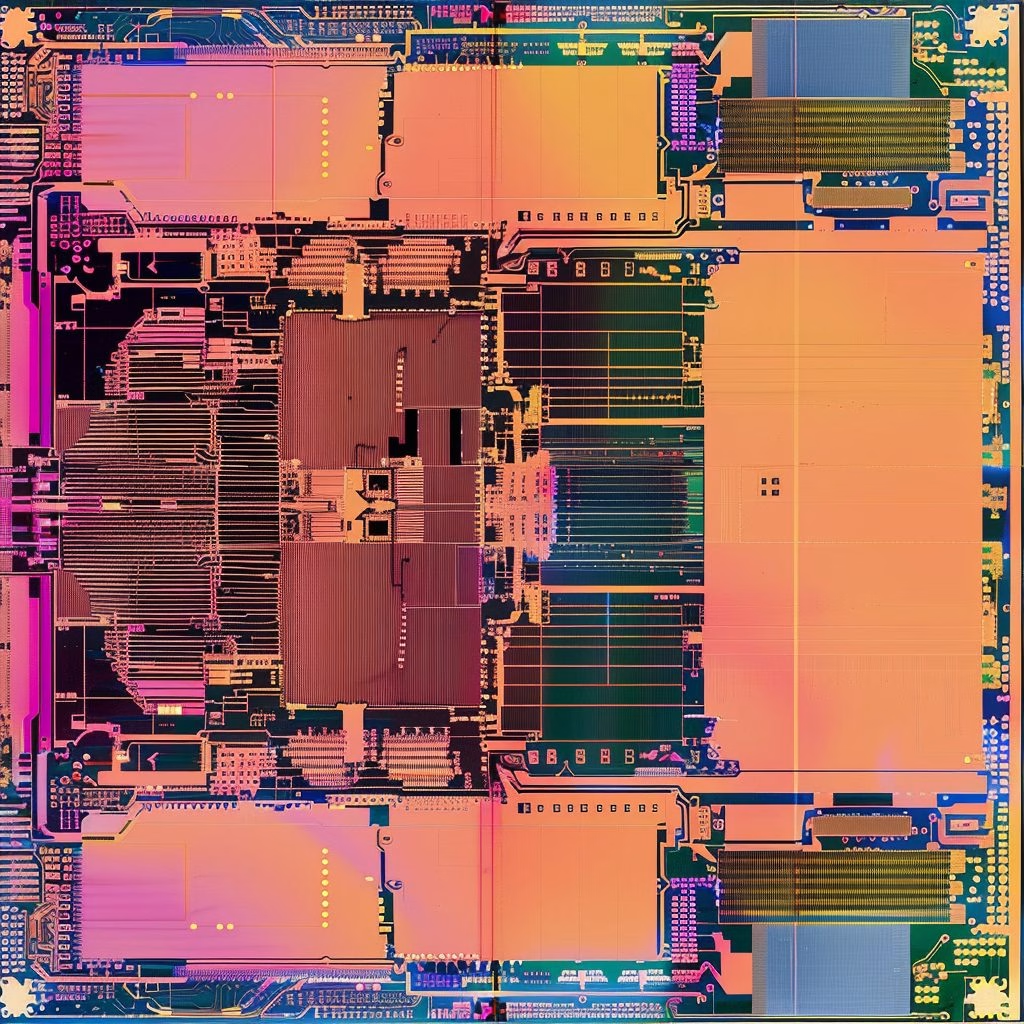

What are AMD Instinct MI210 and MI250 Accelerators?

AMD Instinct is a family of accelerators designed for high-performance computing applications. The MI210 and MI250 are two specific models within this family, which are optimized for machine learning workloads. These accelerators feature advanced architectures that enable them to perform complex computations efficiently.

How was MLPerf BERT Ported to AMD GPUs?

The team behind the porting effort used a combination of techniques to optimize MLPerf BERT for the AMD Instinct MI210 and MI250 accelerators. This included modifying the code to take advantage of the accelerators’ unique features, such as their memory hierarchy and parallel processing capabilities.

What are the Benefits of Running MLPerf BERT on AMD GPUs?

Running MLPerf BERT on AMD GPUs has several benefits. First, it demonstrates that machine learning models can run efficiently on AMD GPUs, which could have important implications for various industries and applications. Second, it shows that the MLPerf benchmark is a valuable tool for evaluating the performance of machine learning models on different hardware platforms.

What are the Future Directions for this Work?

The successful porting of MLPerf BERT to AMD GPUs opens up new possibilities for future research and development. For example, researchers could explore optimizing other machine learning models for AMD GPUs or developing new algorithms that take advantage of the accelerators’ unique features. Additionally, industry professionals could use this work as a starting point for developing their own machine learning applications on AMD GPUs.

How does this Work Impact the Field of Machine Learning?

The successful porting of MLPerf BERT to AMD GPUs has significant implications for the field of machine learning. It demonstrates that machine learning models can run efficiently on AMD GPUs, which could have important implications for various industries and applications. Additionally, it shows that the MLPerf benchmark is a valuable tool for evaluating the performance of machine learning models on different hardware platforms.

What are the Next Steps for this Work?

The next steps for this work involve further optimizing the model for the specific hardware architecture of the AMD accelerators. This could include fine-tuning the model’s hyperparameters or developing new algorithms that take advantage of the accelerators’ unique features. Additionally, researchers could explore porting other machine learning models to AMD GPUs or developing new applications that leverage the accelerators’ capabilities.

How does this Work Impact the Field of High-Performance Computing?

The successful porting of MLPerf BERT to AMD GPUs has significant implications for the field of high-performance computing. It demonstrates that machine learning models can run efficiently on AMD GPUs, which could have important implications for various industries and applications. Additionally, it shows that the MLPerf benchmark is a valuable tool for evaluating the performance of machine learning models on different hardware platforms.

What are the Future Directions for High-Performance Computing?

The successful porting of MLPerf BERT to AMD GPUs opens up new possibilities for future research and development in high-performance computing. For example, researchers could explore optimizing other machine learning models for AMD GPUs or developing new algorithms that take advantage of the accelerators’ unique features. Additionally, industry professionals could use this work as a starting point for developing their own machine learning applications on AMD GPUs.

How does this Work Impact the Field of Artificial Intelligence?

The successful porting of MLPerf BERT to AMD GPUs has significant implications for the field of artificial intelligence. It demonstrates that machine learning models can run efficiently on AMD GPUs, which could have important implications for various industries and applications. Additionally, it shows that the MLPerf benchmark is a valuable tool for evaluating the performance of machine learning models on different hardware platforms.

What are the Future Directions for Artificial Intelligence?

The successful porting of MLPerf BERT to AMD GPUs opens up new possibilities for future research and development in artificial intelligence. For example, researchers could explore optimizing other machine learning models for AMD GPUs or developing new algorithms that take advantage of the accelerators’ unique features. Additionally, industry professionals could use this work as a starting point for developing their own machine learning applications on AMD GPUs.

Conclusion

In conclusion, the successful porting of MLPerf BERT to AMD GPUs is an important achievement that demonstrates the potential of machine learning models running efficiently on AMD GPUs. This work has significant implications for various fields, including high-performance computing and artificial intelligence. The future directions for this work involve further optimizing the model for the specific hardware architecture of the AMD accelerators and exploring new applications that leverage the accelerators’ capabilities.

Publication details: “Preliminary Results of the MLPerf BERT Inference Benchmark on AMD Instinct GPUs”

Publication Date: 2024-07-17

Authors: Z. Jane Wang, Khai Vu, Miro Hodak, A. Mehrotra, et al.

Source:

DOI: https://doi.org/10.1145/3626203.3670589