The increasing demands of artificial intelligence place significant strain on the energy and speed of data transfer between processing units, a challenge traditionally addressed by routing and switching infrastructure. Madhuvanthi Srivatsav R, Chiranjib Bhattacharyya, Shantanu Chakrabartty, and Chetan Singh Thakur, from institutions including the Indian Institute of Science and Washington University, investigate a fundamentally different approach, termed processing-in-interconnect, which moves computation directly into the network fabric itself. Their work demonstrates that standard operations within routers and switches, delays, packet handling, and broadcasting, can be repurposed to perform the calculations needed for artificial intelligence, effectively turning the network into a massive, energy-efficient processor. This innovative paradigm, unlike conventional architectures, exhibits improved energy scaling with increasing interconnect bandwidth, potentially enabling brain-scale AI inference with power consumption measured in hundreds of watts and paving the way for a new generation of scalable and sustainable AI systems.

Routing, switching, and the interconnect fabric are essential components in implementing large-scale neuromorphic computing architectures. While this fabric plays a supporting role in computing, for large AI workloads, it ultimately determines the overall system’s performance, such as energy consumption and speed. This research addresses a potential bottleneck in system performance by investigating how computing paradigms within these systems can be exploited to accelerate neuromorphic workloads.

Ethernet Hardware Accelerates Spiking Neural Networks

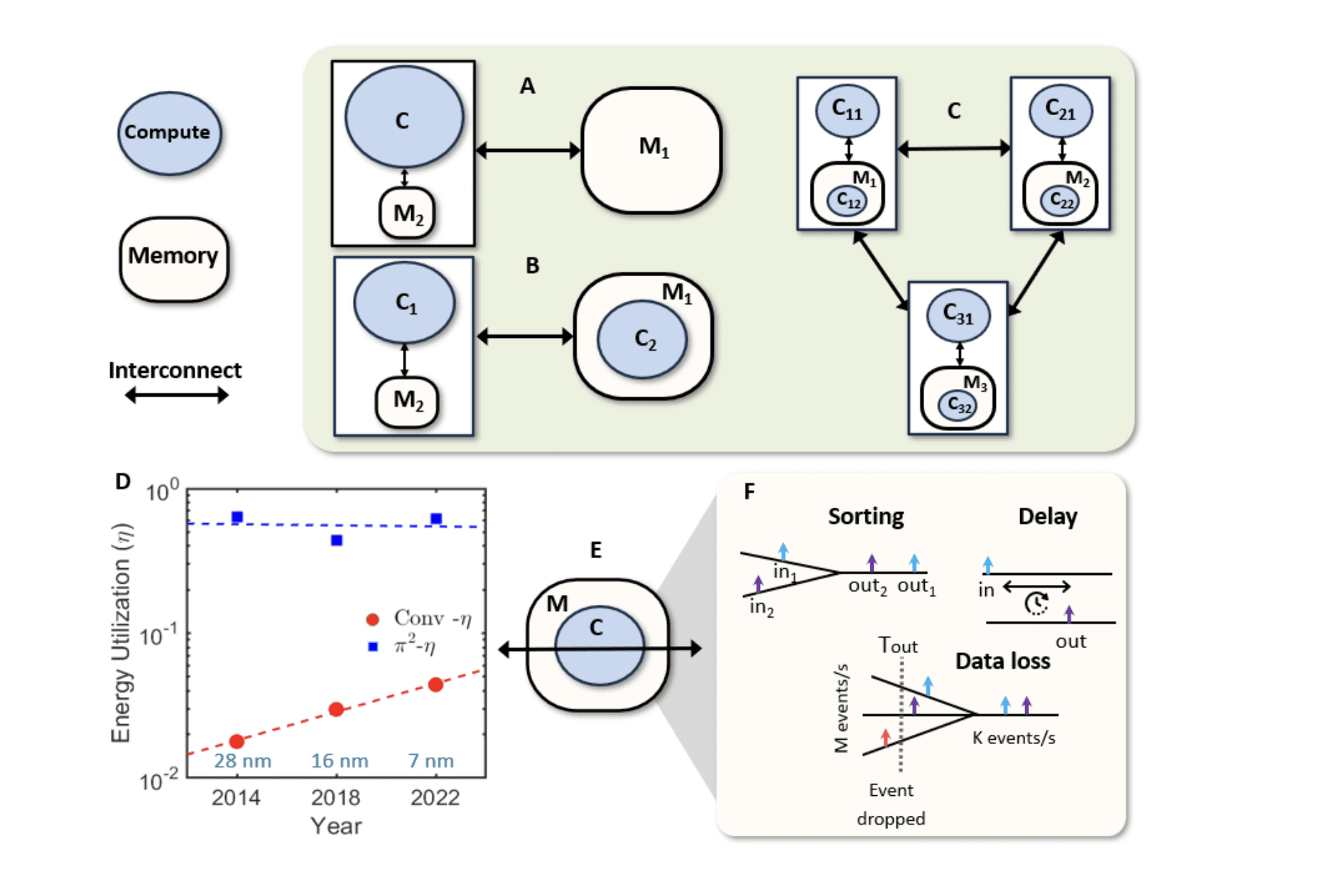

Researchers have developed a novel neural network architecture, π², designed to be efficiently implemented on existing Ethernet networking hardware. This approach leverages Time-Sensitive Networking (TSN) protocols for computation, offering a new pathway for accelerating artificial intelligence. The π² network draws inspiration from spiking neural networks (SNNs), mimicking biological neuron behaviour in a way that’s amenable to hardware acceleration. Key principles include representing synaptic weights as delays, sorting inputs based on arrival time to represent weighted activations, employing event dropping as a form of non-linearity, and using thresholding to determine neuron firing.

This represents a significant departure from traditional neural network implementations that rely on GPUs or specialised AI accelerators. The architecture utilises Asynchronous Time Shaper (ATS) for delaying inputs and Credit-Based Shaping (CBS) for implementing neuron membrane potential accumulation and firing thresholds. The authors successfully trained a three-layer π² network on the MNIST dataset, demonstrating its ability to perform image classification, and weight distillation further improved accuracy. The π² K neuron achieved a 6. 5x reduction in runtime and a 3x reduction in memory usage compared to a traditional neuron implementation, stemming from its reliance on a partial sort of inputs. The team projects that the π² architecture can scale to handle much larger networks and datasets, potentially reaching 4859 trillion synapses, and emphasises its potential for energy efficiency due to the use of standard networking hardware. This architecture is well-suited for edge computing applications, where low latency, low power consumption, and cost-effectiveness are critical, and could eventually scale to the level of brain-scale computing.

Computation Within Network Interconnects Improves AI

Researchers have developed a processing-in-interconnect (π²) architecture that fundamentally reimagines how computation and communication are handled in large-scale systems, particularly for artificial intelligence workloads. The team demonstrates that existing interconnect hardware, traditionally seen as overhead, can be repurposed to perform computational tasks directly, bypassing bottlenecks inherent in conventional architectures. This innovative approach leverages operations already embedded in packet-switching and routing systems, such as delays, timeouts, and packet manipulation, to mimic neuronal and synaptic functions. The research addresses a critical limitation of current AI systems, where communication infrastructure consumes a disproportionate amount of power.

The team quantified this inefficiency with a new metric, η, representing the ratio of energy used for computation to total system energy, and found that conventional architectures struggle to improve this ratio beyond a certain point. In contrast, the π² architecture achieves near-ideal energy utilisation, approaching η = 1, by integrating computation and memory directly into the interconnect fabric. Increasing interconnect bandwidth effectively scales computational power, and the interconnects themselves serve as memory elements. This architecture can be implemented using standard packet-switching networks and established network protocols, avoiding the need for custom hardware development.

Furthermore, credit-based traffic shaping, a common technique in Ethernet switches, can be adapted to function as a π² neuron. By integrating temporal input events, the system generates output events based on a time-to-first-spike encoding scheme, mirroring biological neural processing. This promises to scale brain-scale AI inference workloads with power consumption levels in the range of hundreds of watts, unlocking the next generation of intelligent systems.

Computation via Existing Network Interconnects

This research introduces a processing-in-interconnect (π²) paradigm, a neuromorphic computing approach that repurposes existing interconnect hardware for neural network operations. The core innovation lies in mapping neuron and synapse functions onto fundamental communication protocols already present in packet-switching networks, such as programmable delays and packet dropping. By transforming signal propagation and scheduling into computation, the π² architecture offers a potentially scalable route to physical AI systems without requiring entirely new hardware development. The team demonstrates that neural network operations can be effectively implemented using established Ethernet traffic shaping protocols, specifically Constant Bit Rate (CBS) and Asynchronous Transfer Mode (ATS).

Simulations show that accuracy remains stable even as model complexity increases, suggesting the potential for scaling to brain-scale AI inference with power consumption in the hundreds of watts. Modern Ethernet switches, with their advanced packet processing capabilities, can serve as viable hardware for realising the π² paradigm, leveraging ongoing advances in networking technology. The current work acknowledges limitations stemming from the use of a network simulator, which restricted demonstrations to a three-layer neural network with limited synaptic delay encoding. Future research should focus on implementing the π² architecture on actual switching hardware, utilising commercial or custom chipsets, to fully realise its scaling potential.

👉 More information

🗞 When Routers, Switches and Interconnects Compute: A processing-in-interconnect Paradigm for Scalable Neuromorphic AI

🧠 ArXiv: https://arxiv.org/abs/2508.19548