Large language models excel at producing convincing text, yet frequently make errors or generate nonsensical statements without recognising their own failings. Amirhosein Ghasemabadi from the University of Alberta and Di Niu address this critical limitation by investigating whether these models can predict their own failures through internal self-assessment. Their work introduces Gnosis, a novel mechanism that allows language models to verify their own outputs by analysing hidden states and attention patterns during the generation process. This approach consistently improves accuracy and reliability across a range of challenging tasks, from mathematical reasoning to open-domain question answering, and importantly, achieves this with minimal additional computational cost, offering a pathway towards more trustworthy and efficient artificial intelligence.

Predicting LLM Correctness From Internal Signals

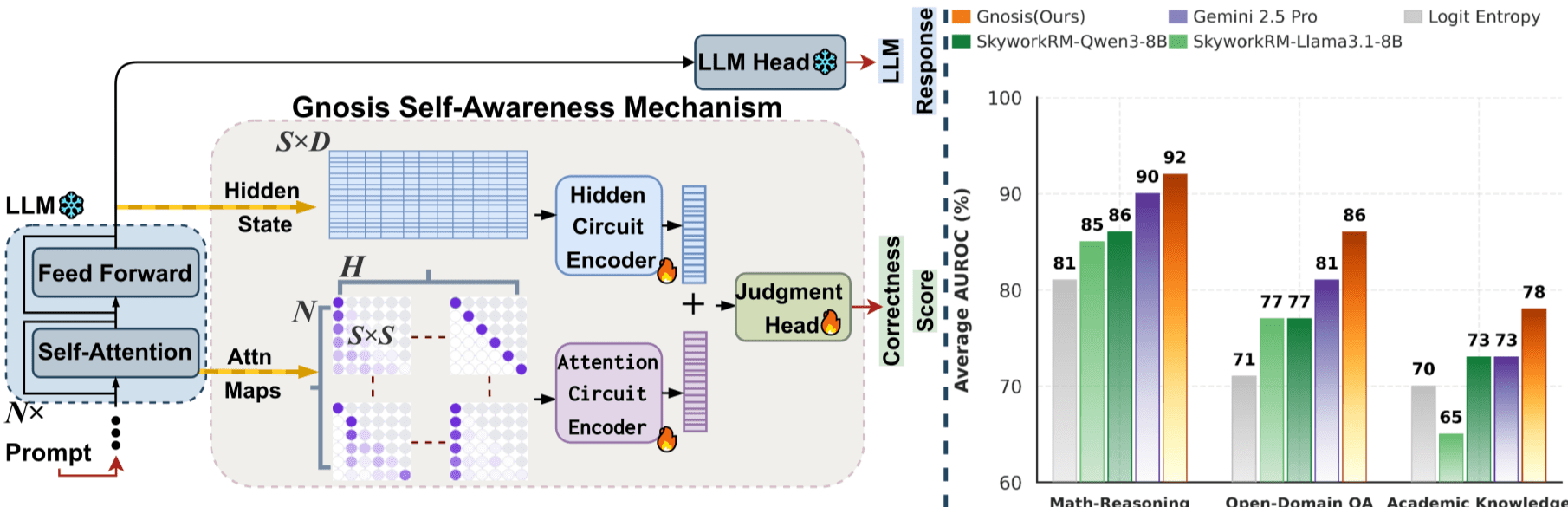

Scientists have developed a method to predict the correctness of large language model (LLM) outputs using internal signals, features extracted from the LLM’s hidden states and attention mechanisms, without relying on external validation. This approach is crucial for building trustworthy AI systems, particularly in safety-critical applications. The method involves extracting features from hidden states and attention maps, then training a binary classifier to predict answer correctness, assessed using metrics like AUROC, AUPR, BSS, and ECE. Experiments across math reasoning, open-domain question answering, and academic knowledge benchmarks demonstrate that combining hidden state and attention map features provides the clearest separation between correct and incorrect answers.

The method achieves strong performance and reliable calibration, comparable to or exceeding the performance of using another LLM as a judge, without the associated costs and biases. This research provides a crucial step towards building more trustworthy AI systems by enabling reliable correctness prediction and allowing developers to identify and mitigate potential errors in LLM outputs. The extracted features can also be used for internal monitoring and debugging, providing insights into the LLM’s reasoning processes.

Predicting LLM Failure with Internal Signals

Scientists developed Gnosis, a novel self-awareness mechanism that enables large language models to predict their own failures during text generation without external verification or additional computational expense. Gnosis decodes signals from internal states and attention patterns, adding approximately 5 million parameters to existing models. This innovative approach moves beyond techniques relying on external judges, multiple samples, or text-based self-critique. The core of Gnosis involves extracting information from hidden states and attention maps, compressing these signals into fixed-budget descriptors, and predicting correctness with minimal latency.

Researchers engineered a dual-stream architecture that jointly models hidden-state evolution and attention routing, capturing reliability cues independent of sequence length. This allows Gnosis to analyze the entire generation trajectory and consistently outperforms strong internal baselines and external judges, including 8 billion parameter Skywork reward models and Gemini 2.5 Pro. This research establishes that reliable correctness cues are intrinsic to the generation process and can be extracted efficiently, paving the way for practical deployment of reliable and compute-aware language systems. By leveraging internal dynamics, Gnosis offers a significant advancement in LLM self-assessment, eliminating the need for external supervision.

LLMs Gain Intrinsic Self-Assessment Capability

Scientists have developed Gnosis, a new mechanism that enables large language models (LLMs) to assess the reliability of their own outputs without external verification. This breakthrough delivers intrinsic self-awareness to frozen LLMs by decoding signals from internal states and attention patterns during the generation process. The core of Gnosis involves extracting reliability cues from the model’s internal dynamics, adding approximately 5 million parameters with negligible computational overhead. Experiments demonstrate that Gnosis consistently outperforms both internal baselines and large external judges in accuracy and calibration across math reasoning, open-domain question answering, and academic knowledge benchmarks.

Specifically, Gnosis surpasses 8 billion parameter Skywork reward models and a Gemini 2.5 Pro judge. The team achieved this performance by jointly modeling hidden-state evolution and attention-routing patterns through a compact architecture independent of sequence length. Furthermore, Gnosis exhibits zero-shot generalization to partial generations, enabling early detection of failing reasoning trajectories and facilitating compute-aware control. A Gnosis head trained on a smaller LLM successfully transfers to larger variants without retraining.

Self-Verification Improves Language Model Reliability

Scientists have developed Gnosis, a new mechanism that enables large language models to self-verify their own outputs by interpreting internal signals during the generation process. This approach allows models to detect potential errors without relying on external judges or extensive computational resources, adding only a small number of parameters to existing models. Across a range of tasks including mathematical reasoning and question answering, Gnosis consistently outperforms both internal benchmarks and larger, established models in terms of accuracy and reliability. Gnosis achieves this self-awareness by analyzing hidden states and attention patterns within the model itself, demonstrating that strong signals of correctness are intrinsic to the generation process. This offers a new standard for efficient reliability, creating systems capable of identifying failing outputs with minimal overhead. While Gnosis transfers well to similar models, future work may explore extending its applicability to a broader range of models and tasks.

👉 More information

🗞 Can LLMs Predict Their Own Failures? Self-Awareness via Internal Circuits

🧠 ArXiv: https://arxiv.org/abs/2512.20578