Scientists are increasingly investigating whether artificial intelligence can acquire a human-like understanding of how the physical world operates. Luca M. Schulze Buschoff, Konstantinos Voudouris, and Can Demircan, all from the Institute for Human-Centered AI at Helmholtz Munich, alongside Eric Schulz et al., present research exploring if vision language models can develop intuitive physics through direct interaction with an environment. This study addresses a significant gap in current AI capabilities, as pre-trained models often lack basic physical reasoning, and supervised fine-tuning alone proves insufficient for robust generalisation. Their work investigates whether reinforcement learning, allowing models to learn from experience, can foster the development of transferable intuitions about physical dynamics, a crucial step towards more adaptable and intelligent artificial systems.

Current pre-trained vision language models exhibit limited intuitive physics, struggling with tasks that humans find simple. While supervised fine-tuning improves performance on specific tasks, it does not reliably produce models capable of generalising physical rules to new situations.

This work explores a different approach, hypothesising that models require active engagement with an environment to learn its underlying dynamics, mirroring how humans acquire intuitive understanding. The study centres on training models using reinforcement learning, allowing them to learn through trial and error within a simulated environment.

Models were tasked with building towers of coloured blocks generated by a physics engine, receiving rewards based on the stability of the constructed structure. This interactive training was contrasted with a non-interactive method where models were simply shown examples of optimal tower-building sequences.

The core aim was to determine if learning through interaction would foster more generalisable physical intuitions compared to passive observation. Researchers specifically tested whether models trained interactively would demonstrate improved performance on both building new, unseen towers and judging the stability of existing structures.

Evaluation focused on the textual outputs of the models, assessing their ability to articulate solutions and predictions. To further investigate the learning process, the study also examined the internal activations of the models, attempting to decode how effectively they represent key physical quantities like tower stability at different layers.

Surprisingly, the research revealed no significant differences between the interactive and non-interactive training conditions. Both approaches enabled models to excel at the specific tower-building tasks they were trained on, but neither yielded models that reliably generalised to new physical challenges. Despite the ability to decode physical quantities from model activations, this internal competence did not translate into improved performance on unseen tasks, suggesting a disconnect between knowledge representation and practical application.

Tower block dataset construction and model training parameters

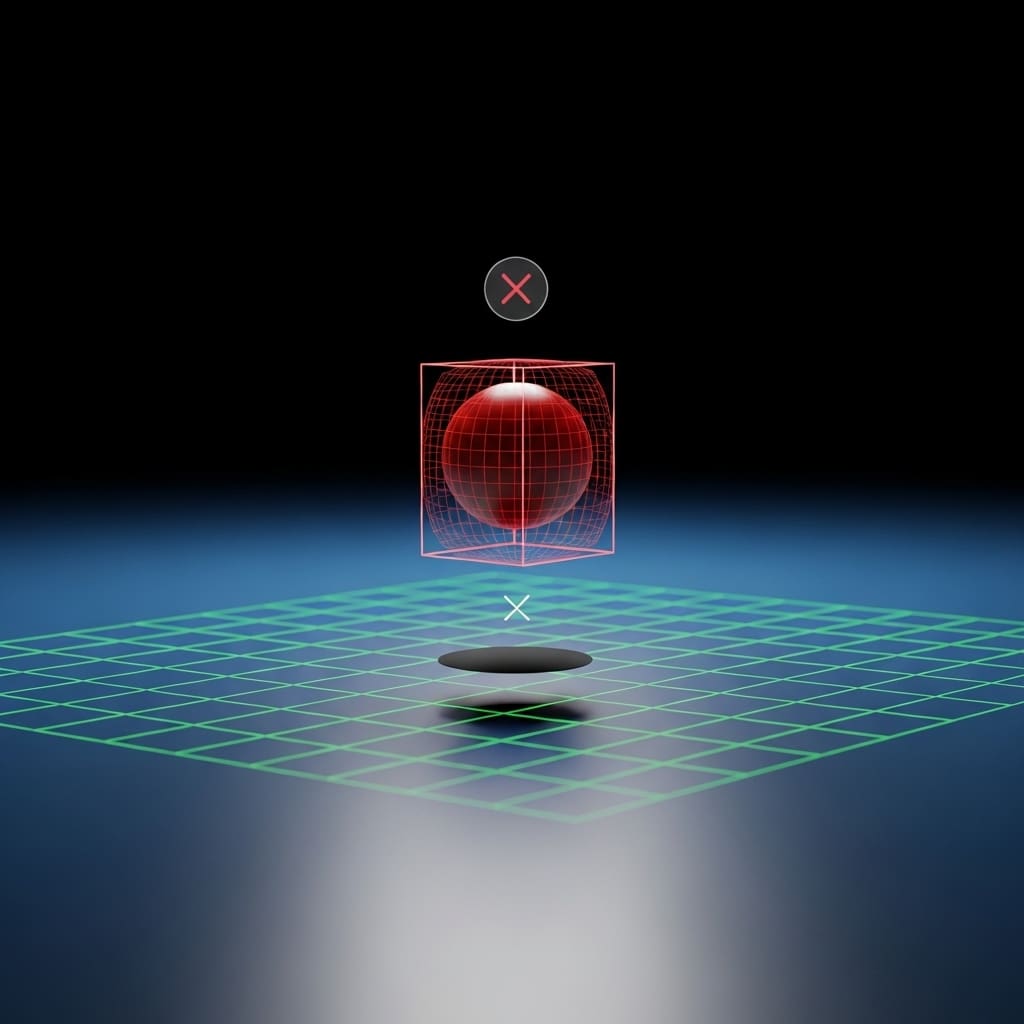

A 0.256×256 pixel RGB image-based experimental setup forms the basis of this research into vision language models and intuitive physics. Two tower block datasets were constructed within the ThreeDWorld environment, each comprising stacks of 2-4 randomly coloured cubes captured from a fixed camera angle.

The camera angle and block sizes remained constant throughout the study to facilitate learning the mapping between pixel space and ground truth distance. Both datasets featured towers with one intentionally displaced block, either on top of the tower or on the floor beside it. Models were trained on four combinations of dataset and action type using the GRPO algorithm to assess whether interaction with an environment fosters generalizable physical intuitions.

One dataset focused on a displaced top block, while the other presented a displaced side block. Action types included binary stability judgements, requiring models to assess tower stability, and x-only/x-y tasks demanding precise displacement values to improve tower stability or size. The x-y task extended the x-only task by adding a vertical displacement component.

To further evaluate generalisation, models were also tested on an external dataset of real wooden block towers sourced from Lerer et al. (2016). Layer-wise decoding of model activations was performed to investigate the distinction between model competence and performance, specifically examining the predictability of key physical quantities.

This analysis aimed to determine if interactive training enhanced the decodability of these quantities at later model layers compared to non-interactive training. The study acknowledges that both training methods achieved ceiling performance on the tasks they were trained on, but neither reliably generalised to new physical tasks.

Adapter-based reinforcement learning and supervised fine-tuning yield equivalent performance on block manipulation tasks

Researchers evaluated performance improvements achieved through reinforcement learning and supervised fine-tuning methods on vision language models. Group-Relative Policy Optimization (GRPO) and Supervised Fine-Tuning (SFT) were implemented using adapters inserted layer-wise into the model, with adapter sizes of 16×16 across all layers.

Training proceeded for 10,000 steps on single 80GB A100 GPUs. The study utilized a reward function incorporating normalized advantages, excluding a KL-divergence term, and employed the Adam optimizer with stochastic gradient descent. On the binary stability top block task, the GRPO model attained a mean test accuracy of 0.969 after the training period, matching the performance of the SFT model which also achieved 0.969.

For the x-only top block task, where models predict a single integer to reposition a block, the GRPO model reached a mean test reward of 19.999, identical to the score obtained by the SFT model. Similarly, on the x-only side block task, requiring integer prediction for block repositioning, the GRPO model achieved a mean test reward of 19.998, again mirroring the SFT model’s result of 19.998.

Reward functions were task-specific, assigning values of −1, 0, and 1 for non-parseable, incorrect, and correct answers respectively on the binary stability task. Gaussian functions were used to reward proximity to optimal positions in the x-only and x-y tasks, with rewards ranging from −5 to 20 based on distance and stability.

Specifically, unstable towers received weaker Gaussian rewards of 2·e(−d2) −2, while stable towers were rewarded with 20·e(−d2), where d represents the distance from the optimal position. The research demonstrates consistent performance across both training methods on the evaluated tasks.

Limited generalisation in vision language models despite interactive training

Researchers investigated whether vision language models could develop generalizable physical intuitions through interaction with an environment. Models were trained using reinforcement learning and a supervised fine-tuning approach, but neither method reliably enabled generalization to related intuitive physics tasks.

Findings indicate that these models learn task-specific shortcuts instead of robust, transferable physical understanding, even when trained through interaction. This research challenges the notion that simply exposing models to an interactive environment, or employing parameter-efficient fine-tuning methods, is sufficient for acquiring human-like reasoning abilities regarding the physical world.

The study acknowledges limitations related to model size and the quantity of training data used, focusing on models of 7, 8, and 32 billion parameters with relatively small datasets. Furthermore, investigations were limited to single-step interactions, and the potential benefits of extended interaction sequences remain unexplored. Future research will examine the impact of larger models, increased data volumes, and multi-step interactions to determine if these factors could facilitate the development of more generalizable intuitions.

👉 More information

🗞 Can vision language models learn intuitive physics from interaction?

🧠 ArXiv: https://arxiv.org/abs/2602.06033