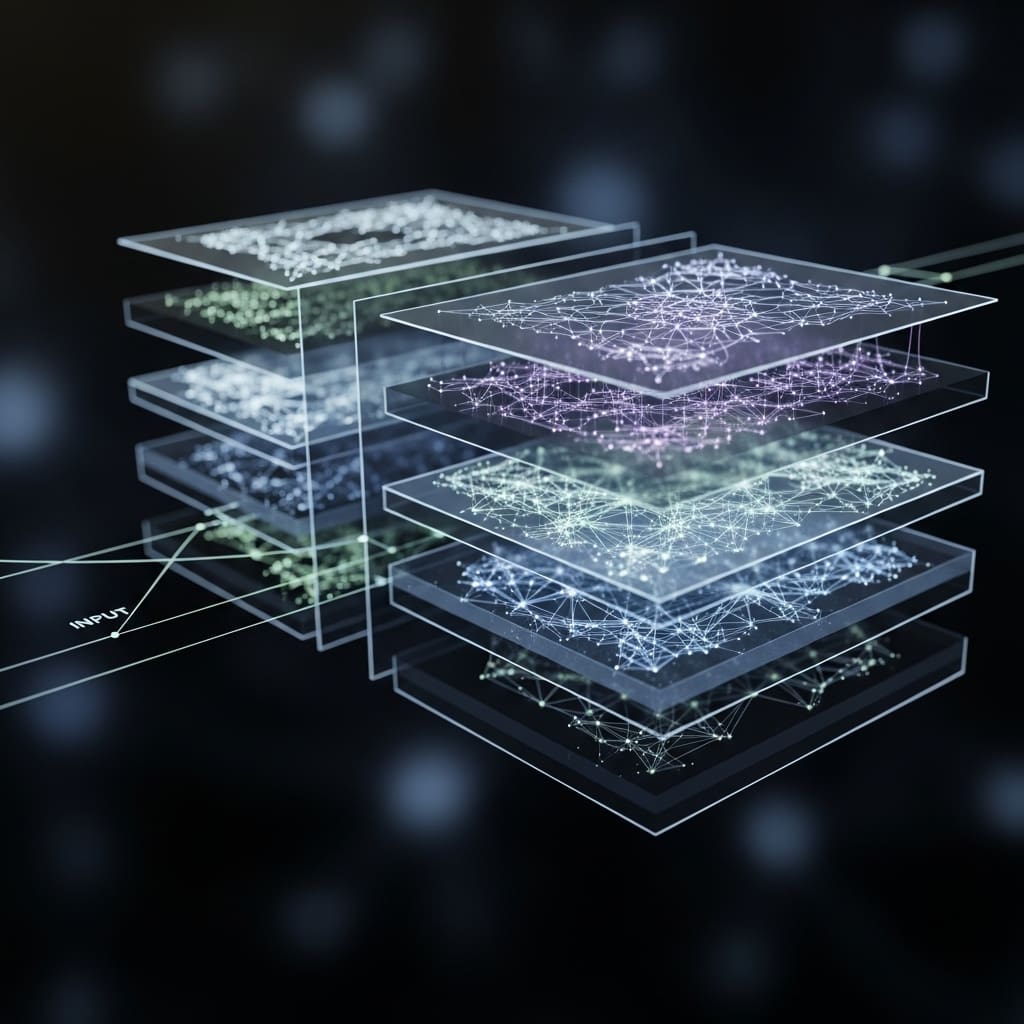

Researchers are increasingly focused on understanding the internal workings of large language models (LLMs). Grace Luo, Jiahai Feng, and Trevor Darrell from UC Berkeley, alongside Alec Radford and Jacob Steinhardt, present a novel approach to analysing LLM activations by training diffusion models on a billion residual stream activations, effectively creating ‘meta-models’ that capture the distribution of internal states. This work represents a significant step forward as it moves beyond methods reliant on strong structural assumptions, instead uncovering inherent structure and improving intervention fidelity. Their findings demonstrate that decreasing diffusion loss correlates with improved fluency in steering interventions and increased conceptual isolation of neurons, suggesting generative meta-models offer a scalable route to LLM interpretability without restrictive priors.

Learning Activation Manifolds with Deep Diffusion for Improved Language Model Control

Researchers have developed a new generative model, termed the Generative Latent Prior (GLP), designed to analyse and manipulate neural network activations within large language models. This work addresses limitations in existing activation analysis methods, such as PCA and sparse autoencoders, which rely on potentially restrictive structural assumptions.

The GLP is a deep diffusion model trained on one billion residual stream activations, learning the distribution of a network’s internal states without imposing such constraints. Findings demonstrate that the diffusion loss consistently decreases with increased computational power and reliably predicts performance on downstream tasks.

Specifically, applying the GLP’s learned prior to steering interventions significantly improves the fluency of generated text, with larger gains observed as the diffusion loss decreases. This suggests the model effectively projects manipulated activations back onto the natural activation manifold, preserving semantic content and avoiding the degradation often seen with direct intervention.

Furthermore, the model’s neurons increasingly isolate concepts into individual units, as evidenced by scaling sparse probing scores that correlate with decreasing loss. These results indicate that generative meta-models offer a scalable route towards interpretability, circumventing the need for restrictive structural assumptions.

The study details the training of GLP, a diffusion model fit on activation data, utilising a deep diffusion MLP architecture. Training involved a substantial dataset of one billion residual stream activations, readily obtainable from the source language model. Model quality was assessed using the Frechet Distance and PCA, confirming the generation of activations nearly indistinguishable from real data.

Application of GLP to interpretability tasks, including activation steering, demonstrated improvements in fluency across benchmarks such as sentiment control, SAE feature steering, and persona elicitation. Across models ranging from 0.5 billion to 3.3 billion parameters, the diffusion loss exhibited a predictable power law scaling with compute, halving the distance to its theoretical floor with each 60-fold increase in computational resources.

This scaling directly translated to enhanced steering and probing performance, with gains closely mirroring the reduction in loss, establishing diffusion loss as a reliable predictor of downstream utility and suggesting continued scaling will yield further improvements. This work contributes to the growing field of meta-modelling, focusing on generative models of neural network components and highlighting the value of a trained model in encoding structural information for use as a prior or feature extractor.

Training a Generative Latent Prior and Manifold Fidelity Assessment

Diffusion models underpin this work, trained on one billion residual stream activations to create a “Generative Latent Prior”, or GLP. The research team trained these deep diffusion MLPs on readily available activation data from a large language model. Model quality was initially debugged using the Frechet Distance and Principal Component Analysis to verify the generated activations closely resembled real activations.

To assess manifold fidelity, the team employed these techniques to confirm GLP’s ability to produce realistic activation patterns. A key methodological innovation involves post-processing steered activations with the diffusion model. This process projects activations that fall off the natural activation manifold back onto it, preserving semantic content and mitigating fluency degradation.

The effectiveness of this approach was benchmarked across sentiment control, SAE feature steering, and persona elicitation tasks. Performance gains were directly linked to the quality of the diffusion model, demonstrating improved fluency alongside equivalent steering effects. Further analysis focused on the interpretability of GLP’s internal representations.

Researchers evaluated “meta-neurons” , the model’s intermediate features, using one-dimensional probing across 113 binary tasks. These meta-neurons outperformed both SAE features and raw language model neurons, suggesting GLP effectively isolates interpretable concepts into distinct units. The study also demonstrated a predictable scaling relationship between compute and performance.

Diffusion loss decreased following a power law as compute increased, with an estimated irreducible error of 0.52. This decrease in loss directly correlated with improvements in both steering and probing performance, establishing diffusion loss as a reliable predictor of downstream utility.

Diffusion loss scaling predicts steering fluency and concept isolation

Diffusion loss decreased smoothly with increasing compute during the training of a generative diffusion model, termed the Generative Latent Prior, or GLP. Specifically, the diffusion loss followed a power law, reaching an estimated irreducible error of 0.52. This model was trained on one billion residual stream activations and exhibits predictable scaling with computational resources.

Steering performance for controlling positive sentiment improved alongside the reduction in diffusion loss, with gains observed across benchmarks including sentiment control, SAE feature steering, and persona elicitation. The research demonstrates that applying the learned prior from the meta-model to steering interventions enhances fluency.

On-manifold sentiment steering achieved a mean fluency score of 0.63, with this value related to compute via the function f(C) = 0.63 −3.92 · 106 · C−0.420, where C represents FLOPs. Furthermore, the model’s neurons increasingly isolated concepts into individual units as loss decreased, as evidenced by 1-D probing results.

Average 1-D probe AUC scores reached 0.80, scaling with compute according to the function f(C) = 1.00 −8.01 · C−0.085. Across models ranging from 0.5 billion to 3.3 billion parameters, the diffusion loss halved with each 60x increase in compute. This predictable scaling directly translated to improvements in both steering and probing performance, with gains closely mirroring the reduction in loss. The diffusion loss therefore functions as a reliable predictor of downstream utility, suggesting that continued scaling will yield further gains in interpretability.

Learned Internal Representations Predict Language Model Behaviour and Conceptual Specialisation

Scientists have developed generative models to understand the internal workings of large language models without relying on pre-defined structural assumptions. These models, termed “meta-models”, are trained on a substantial dataset of one billion residual stream activations to learn the distribution of internal states within the language model.

Results demonstrate that a reduction in diffusion loss, a measure of how well the model reconstructs the activations, correlates with improved performance in downstream tasks. Specifically, incorporating the learned prior from the meta-model into steering interventions, methods for altering a language model’s behaviour, enhances the fluency of the resulting text.

Furthermore, the meta-model’s individual neurons increasingly represent distinct concepts, as evidenced by scaling sparse probing scores alongside decreasing loss. This suggests a scalable approach to interpretability, moving beyond methods that impose rigid structures on the data. The authors acknowledge limitations including the independent modelling of single-token activations and the unconditional nature of the current generative latent prior.

Future research could explore multi-token modelling, conditioning on clean activations, and extending the approach to different activation types or multiple layers within the language model. Analogies with image diffusion techniques also suggest potential applications in identifying unusual or out-of-distribution activations, and importing further advancements from diffusion models into neural network interpretability.

👉 More information

🗞 Learning a Generative Meta-Model of LLM Activations

🧠 ArXiv: https://arxiv.org/abs/2602.06964