Research demonstrates improved detection of online sexism through novel data augmentation techniques. Definition-based augmentation and contextual semantic expansion address data limitations and nuanced language. An ensemble strategy enhances fine-grained classification, achieving a 4.1 point increase in macro F1 score for complex categorisation tasks.

The pervasive issue of online sexism presents a significant challenge for automated content moderation systems, hampered by limited training data and the subtle, context-dependent nature of harmful language. Researchers are now focusing on methods to augment existing datasets and refine classification accuracy. A team led by Sahrish Khan (University of Warwick) and Arshad Jhumka (University of Leeds), with contributions from Gabriele Pergola (University of Warwick), detail their approach in “Explaining Matters: Leveraging Definitions and Semantic Expansion for Sexism Detection”. Their work introduces novel data augmentation techniques – definition-based augmentation and contextual semantic expansion – alongside an ensemble strategy to improve the reliable identification of sexism, achieving performance gains on the Explainable Detection of Online Sexism (EDOS) dataset.

Nuance in Numbers: Refining Automated Sexism Detection

Automated systems increasingly attempt to identify sexism in online content, but limitations in available data and the subtle nature of prejudiced language pose significant challenges. Researchers are addressing these issues by constructing a detailed taxonomy of sexist expression and applying it to a newly annotated dataset, to improve detection accuracy and foster a more granular understanding of online bias. This work delivers a valuable resource for developers and researchers building systems to detect and counter sexism, ultimately promoting safer and more inclusive digital spaces.

The team developed a four-category taxonomy: Threats/Plans to Harm, Derogation, Animosity, and Prejudiced Discussion. Each category branches into specific vectors, providing a detailed framework for consistent annotation. For example, Derogation includes vectors such as ‘Descriptive Attacks’ and ‘Aggressive and Emotive Attacks’, enabling precise identification of different forms of sexist expression beyond simple binary classifications.

Analysis of annotation discrepancies revealed inherent difficulties in classifying sexism. Annotators frequently disagreed on labels, particularly within certain categories, highlighting the ambiguity of language and the subjective interpretation of prejudiced statements. Researchers emphasise the need for exceptionally clear guidelines, thorough annotator training, and a robust adjudication process to resolve conflicting labels. While a fine-grained taxonomy captures nuance, it also increases annotation complexity and the potential for disagreement.

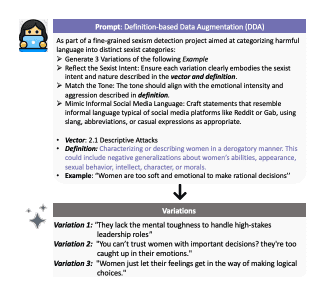

To address limited data and nuanced expression, the team proposes two data augmentation techniques: Definition-based Data Augmentation (DDA) and Contextual Semantic Expansion (CSE). DDA generates synthetic examples based on the category definitions within the taxonomy. CSE enriches existing examples with task-specific semantic features, creating a more robust and comprehensive training dataset.

Evaluation on the Explainable Detection of Online Sexism (EDOS) dataset demonstrates improved performance. The methods achieved a 1.5-point improvement in macro F1 score for binary classification and a 4.1-point improvement for fine-grained classification, confirming their effectiveness. This work provides a valuable resource for researchers and developers aiming to build more accurate and nuanced systems for detecting and combating online sexism, contributing to a safer and more inclusive online environment.

The project successfully implements DDA and CSE, demonstrating a demonstrable improvement in model performance, particularly in fine-grained classification. Furthermore, the research introduces an ensemble strategy to enhance reliability, resolving prediction ambiguities by aggregating perspectives from multiple models, leading to more robust and accurate results. Experimental evaluation on the EDOS dataset confirms state-of-the-art performance across all tasks.

Future work will focus on expanding the taxonomy to encompass an even wider range of subtle and evolving forms of sexism, ensuring the system remains adaptable. Researchers also plan to investigate incorporating contextual information and user behaviour to improve accuracy and robustness further.

👉 More information

🗞 Explaining Matters: Leveraging Definitions and Semantic Expansion for Sexism Detection

🧠 DOI: https://doi.org/10.48550/arXiv.2506.06238