Determining how realistic AI-generated images appear, and pinpointing any flaws within them, represents a significant challenge in advancing artificial intelligence. Lovish Kaushik, Agnij Biswas, and Somdyuti Paul, all from the Indian Institute of Technology, Kharagpur, address this problem with a new framework that both assesses overall image realism and identifies specific areas where inconsistencies occur. Their approach leverages textual descriptions of visual flaws, generated by an AI trained to recognise these imperfections, effectively mimicking the detailed feedback humans would provide. This multimodal technique achieves improved accuracy in predicting image realism and creates detailed maps highlighting which parts of an image appear most and least convincing, representing a crucial step towards more photorealistic AI generation.

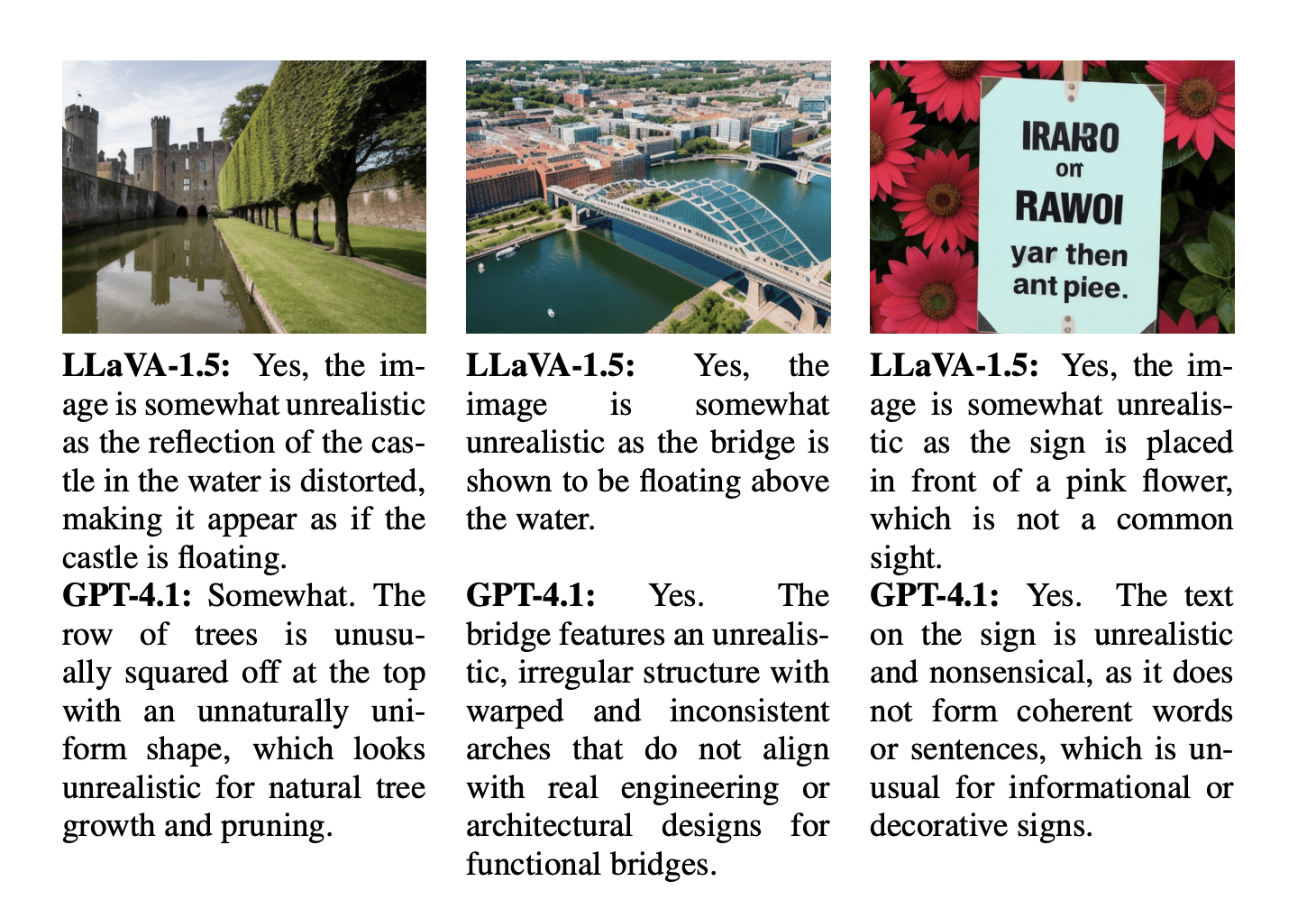

Objective realness assessment and local inconsistency identification of AI-generated images utilise textual descriptions of visual inconsistencies generated by vision-language models trained on large datasets, serving as reliable substitutes for human annotations. The results demonstrate that the proposed multimodal approach improves objective realness prediction performance and produces dense realness maps that effectively distinguish between realistic and unrealistic spatial regions.

Realness and Localization with Multimodal Learning

Scientists are developing new methods to automatically assess the quality and realism of images created by artificial intelligence. This research focuses on building a system that can not only score an image’s believability but also explain why it appears realistic or flawed by identifying unrealistic details. This is increasingly important as AI image generation becomes more prevalent. The team introduces REALM, a framework that combines visual information from the image itself with textual descriptions of its content, leveraging the strengths of both types of data with deep learning and large language models to gain a comprehensive understanding of realistic images. A key feature of REALM is its ability to pinpoint unrealistic regions within an image, creating dense realness maps that visually indicate the level of realism across different areas, providing a detailed, pixel-level assessment of image quality. Experiments demonstrate that combining visual and textual information significantly improves performance compared to relying on either type of data alone, highlighting the importance of multimodal learning for accurate realness assessment.

Dense Realness Mapping of AI Images

Researchers have developed a new framework, REALM, to evaluate and pinpoint inconsistencies in images generated by artificial intelligence, addressing a critical need for assessing generative AI performance and improving photorealistic image creation. The team enhanced existing datasets with textual descriptions of visual inconsistencies, generated using advanced vision-language models, to provide a richer understanding of image realism, serving as a proxy for human assessment. Experiments demonstrate that integrating these textual descriptions with image features significantly improves objective realness estimation, a process termed Cross-modal Objective Realness Estimation (CORE). The team’s approach delivers a dense realness mapping framework, DREAM, capable of pinpointing unrealistic regions at the pixel level, providing interpretable results for understanding image quality. Utilizing state-of-the-art vision-language models, the researchers generated detailed textual descriptions of inconsistencies within images, effectively mimicking human assessment of realism, allowing for a nuanced evaluation of AI-generated images and quantifying the degree of perceived realism. The framework’s performance was validated through detailed analysis, demonstrating the ability to accurately identify and localize areas where AI-generated images deviate from photorealism.

Visual and Textual Realness Evaluation with REALM

Scientists present REALM, a new framework designed to assess and pinpoint inconsistencies in images generated by artificial intelligence. By combining visual features with textual descriptions of image content, the method achieves improved performance in evaluating the perceptual realness of AI-generated images compared to approaches relying on single data types. Importantly, REALM also generates detailed maps highlighting unrealistic regions within an image, offering a degree of explainability regarding its assessment. The research demonstrates that integrating both visual and textual information provides complementary contextual understanding, leading to more accurate realness evaluation. However, the authors acknowledge current limitations stemming from inaccuracies in the text descriptions generated by the language model employed, particularly when depicting specific visual characteristics like facial distortions. Future work will focus on refining the system by fine-tuning open-source vision-language models with human-labeled data and improving the precision of realness maps through advanced image-text matching techniques that consider relational context, leading to a more robust and accurate system for evaluating AI-generated images.

👉 More information

🗞 Image Realness Assessment and Localization with Multimodal Features

🧠 ArXiv: https://arxiv.org/abs/2509.13289