Scientists are tackling the growing problem of data safety and reliability within lakehouse architectures, the now-standard cloud platforms for analytics and artificial intelligence. Weiming Sheng from Columbia University, Jinlang Wang from the University of Wisconsin-Madison, and Manuel Barros from Carnegie Mellon University, alongside colleagues at Bauplan Labs including Aldrin Montana, Jacopo Tagliabue, and Luca Bigon, present a novel approach called Bauplan that prioritises building a ‘correct-by-design’ lakehouse. This research is significant because it addresses the risks associated with concurrent data operations by untrusted actors, preventing runtime errors and data leakage through typed table contracts, data versioning inspired by Git, and transactional pipeline runs. Bauplan effectively applies software engineering principles to data management, guaranteeing atomicity and reproducibility within complex, multi-table pipelines.

Mitigating Data Pipeline Vulnerabilities with Typed Contracts and Versioning requires careful planning and implementation

Researchers have developed Bauplan, a novel lakehouse platform designed to enhance safety and correctness in data analytics and artificial intelligence workloads. This new system addresses critical vulnerabilities that arise when untrusted actors concurrently access and modify production data within traditional lakehouse architectures.

Specifically, Bauplan mitigates issues stemming from runtime upstream-downstream mismatches and the potential for partial effects in multi-table pipelines. Inspired by principles of software engineering, Bauplan aims to make most illegal data states unrepresentable through the implementation of familiar abstractions.

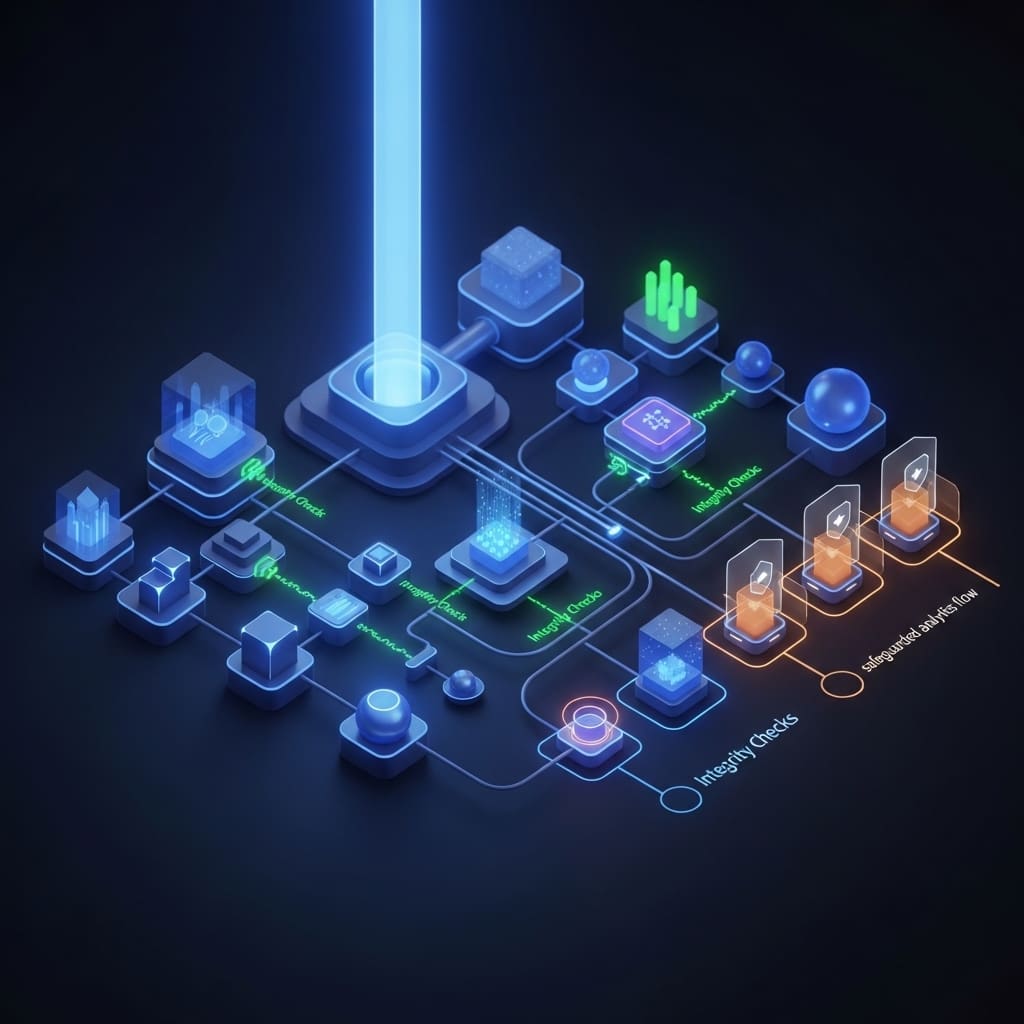

The core of Bauplan lies in three interconnected axes: typed table contracts, Git-like data versioning, and transactional runs. Typed table contracts enable checkable pipeline boundaries, ensuring that data interfaces align as expected between processing nodes. Git-like data versioning facilitates review and reproducibility, allowing for point-in-time debugging and collaborative workflows similar to pull requests in software development.

Crucially, transactional runs guarantee pipeline-level atomicity, meaning downstream systems will consistently observe either all outputs of a pipeline execution or none, preventing inconsistent data views. This integrated approach delivers pipelines that are statically checkable, safe to execute, easily reviewed, and straightforward to reproduce.

A lightweight formal transaction model has been developed and initial results demonstrate the feasibility of these concepts. Bauplan’s design is particularly relevant in the context of increasing adoption of coding agents, where correctness in the face of potentially untrusted actors is paramount. The system’s architecture, positioned at the intersection of programming languages, data management, and distributed systems, offers valuable lessons for a broad range of data practitioners.

Consider a typical data pipeline transforming raw data into refined assets for business intelligence or machine learning. Traditional platforms often struggle with schema changes, where alterations to column types or semantics can break downstream processes. Bauplan’s typed table contracts proactively prevent these interface mismatches.

Furthermore, the platform addresses challenges in collaborative development, where data skew between development and production environments can lead to unexpected failures. By integrating Git-like versioning, Bauplan ensures reproducibility and facilitates effective peer review. Finally, the transactional pipeline feature guarantees data consistency, preventing downstream readers from encountering partial or inconsistent results.

Schema enforcement and Git-based data pipeline versioning ensure data quality and reproducibility

A typed contract system forms the core of Bauplan’s approach to ensuring data integrity within a lakehouse environment. Each node within a directed acyclic graph (DAG) pipeline exposes a schema object that explicitly defines the columns and their expected data types flowing between transformations. This schema acts as an interface, enabling machine-checkable boundaries between pipeline stages and facilitating both schema and lineage inference.

For instance, column two is propagated without modification from Node 1 to Node 3, while Node 2 introduces new columns, col4 and col5, to the data stream. The research implements a Git-like data versioning system to enhance reviewability and reproducibility of data pipelines. Table snapshots are mapped to commits and branches, allowing for a detailed history of data changes and enabling collaborative development workflows similar to those found in software engineering.

This version control extends beyond code, capturing the state of the data itself at various points in the pipeline’s execution. Consequently, a daily pipeline failing on one day but succeeding the next, even without code changes, is prevented by ensuring reproducibility through data versioning. Bauplan defines run semantics that publish multi-table effects atomically via branch-and-merge operations.

This transactional approach guarantees that downstream readers observe a consistent view of the data, preventing the exposure of partial or intermediate results during pipeline execution. The system operates across three key moments: local code environment, control plane preparation, and worker process execution, prioritizing early failure detection to maintain a “correct-by-design” lakehouse. The control plane parses code into a plan and communicates metadata, while the worker process handles data read and write operations to storage such as S3, streaming logs and results back to the user.

Typed contracts, data versioning and transactional runs ensure data pipeline integrity and reliability

Lakehouses represent the prevailing cloud platform for analytics and artificial intelligence workloads, yet they introduce safety concerns when concurrently accessed by untrusted actors. Upstream-downstream mismatches manifest only during runtime, and multi-table pipelines are susceptible to leaking partial effects.

Bauplan, a code-first lakehouse, addresses these issues by making most illegal states unrepresentable through familiar abstractions. The system operates across three key axes: typed table contracts, Git-like data versioning, and transactional runs. Lightweight formal transaction models underpin Bauplan’s functionality, enabling pipeline-level atomicity.

This guarantees that downstream systems observe either all outputs from a pipeline run or none, preventing inconsistent data views. Data contracts, implemented through type checks, prevent interface mismatches between pipeline nodes, addressing a significant source of errors. Git-for-data versioning facilitates point-in-time debugging and pull requests, enabling human-in-the-loop verification of data asset changes.

Consider a sample pipeline transforming a raw table through SQL and Python functions. Schema failures, where column types change in the raw table, can cause downstream code to break if not properly handled. Traditional platforms often struggle with these issues, particularly when pipelines are stateful and data-dependent.

Bauplan’s design mitigates these risks by ensuring that changes to the raw table are reflected and validated before affecting downstream processes. Collaboration failures, where pipeline code functions in development but not in production due to data skew, are also addressed through the system’s versioning and transactional capabilities.

The research highlights that a substantial fraction of pipeline errors stem from schema changes at the intersection of nodes. Bauplan’s approach aims to provide correctness guarantees at the system level, offering a physically distributed system governed by logically atomic APIs designed for maximum verifiability and correctness. This work lays the foundation for more robust and trustworthy data lakehouse architectures, particularly in environments increasingly reliant on automated agents and untrusted actors.

Mitigating data risk through typed contracts and versioned pipelines ensures data integrity and reliability

Bauplan represents a novel lakehouse design prioritising safety and correctness in data analytics and artificial intelligence systems. It addresses vulnerabilities arising from concurrent, untrusted access to production data, specifically issues stemming from upstream-downstream inconsistencies and potential data leakage within multi-table pipelines.

The system employs a code-first approach, integrating typed table contracts, Git-like data versioning, and transactional runs to enforce data integrity and reproducibility. These features collectively aim to create a ‘correct-by-design’ lakehouse where invalid operations are prevented at the planning stage, inconsistent plans are not executed, and failed operations are not published.

Early testing indicates successful implementation at industry scale, with millions of data branches already created and managed within the Bauplan framework. However, the developers acknowledge limitations in the current formal transaction model, particularly regarding the visibility of transactional branches and the complexities introduced by nested branching logic. Future work will focus on refining type inference and the Alloy model, as well as exploring isolation levels to further enhance the system’s robustness and correctness.

👉 More information

🗞 Building a Correct-by-Design Lakehouse. Data Contracts, Versioning, and Transactional Pipelines for Humans and Agents

🧠 ArXiv: https://arxiv.org/abs/2602.02335