Research demonstrates FullFlat network architectures significantly enhance performance and scalability for large language models, including trillion-parameter systems. Optimising co-design elements such as FLOPS, memory bandwidth, and scale-out domains improves Model FLOPS Utilisation and throughput, with a validated performance modelling tool achieving 10% accuracy.

The escalating computational demands of large language models (LLMs) necessitate a fundamental reassessment of data centre infrastructure, moving beyond incremental improvements to holistic system co-design. Current architectures struggle to efficiently support models containing trillions of parameters, impacting both performance and economic viability. Researchers from Intel and the Georgia Institute of Technology address this challenge through a detailed analysis of the interplay between floating-point operations per second (FLOPS), high-bandwidth memory (HBM) capacity, network topology, and parallelism strategies. In their work, entitled ‘Scaling Intelligence: Designing Data Centers for Next-Gen Language Models’, Jesmin Jahan Tithi, Avishaii Abuhatzera, Hanjiang Wu and Fabrizio Petrini present a co-design framework and evaluate the potential of FullFlat network architectures, demonstrating their impact on scalability and throughput for both sparse and dense transformer-based LLMs. Their study incorporates a validated performance modelling tool, offering practical insights for the design of future AI data centres.

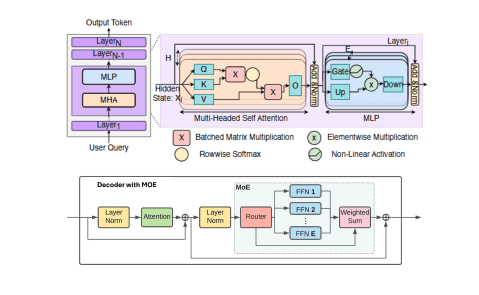

The escalating computational demands of training large language models (LLMs) necessitate innovative approaches to parallelisation and infrastructure design, as models rapidly increase in parameter count and complexity. Researchers actively investigate the interplay between hardware architecture, network topology, and parallelisation strategies to maximise training efficiency and scalability, addressing the challenges posed by models containing trillions of parameters. This work details a co-design framework, extending a performance modelling tool validated to within 10% of real-world measurements, and utilises this to explore a comprehensive range of configurations for optimising LLM training.

Results demonstrate a preference for two-tiered network hierarchies, specifically TwoTier-HBD configurations, over full-flat architectures. This suggests that hierarchical communication patterns deliver superior efficiency for these large models. A flat architecture implies all processing nodes communicate directly with each other, whereas a hierarchical structure introduces intermediary aggregation points, reducing communication bottlenecks. Data parallelism consistently increases as model size grows, effectively distributing the computational workload across available resources and enhancing overall throughput. Data parallelism involves replicating the model across multiple devices and feeding each device a different subset of the training data.

Researchers actively maximise communication and computation overlap, consistently achieving improved performance when both tensor parallelism and data parallelism overlap are enabled, minimising idle time and maximising resource utilisation. Tensor parallelism divides individual tensors, multi-dimensional arrays central to machine learning, across multiple devices, allowing for larger models to fit within the available memory. Combining this with data parallelism further enhances scalability.

For the largest models, researchers employ memory optimisation techniques, including weight offloading, activation offloading, and optimiser offloading, to address memory constraints and enable training on limited hardware. Weight offloading involves storing model parameters on slower, but larger capacity, storage, while activation offloading applies the same principle to intermediate calculations. Pipeline parallelism further enhances throughput and accelerates the training process, particularly as model size increases. Pipeline parallelism divides the model into stages, allowing different stages to process different batches of data concurrently.

Notably, the research demonstrates that the optimal configuration is not static, but dynamically adapts to the specific model size and cluster configuration, requiring a flexible and responsive approach to infrastructure design. For example, the 29 trillion parameter model utilises a higher degree of data parallelism and a larger number of experts compared to the 1.8 trillion parameter model, highlighting the need for tailored configurations. The modelling tool accurately predicts these optimal configurations, offering a practical roadmap for designing AI data centres capable of supporting trillion-parameter models and sustaining the rapid evolution of AI capabilities, ultimately reducing optimisation complexity and improving the efficiency of LLM training.

The research demonstrates a systematic methodology for optimising the parallel training of large language models (LLMs) across substantial computational resources, actively identifying optimal configurations and adjusting parallelisation strategies based on both model size and the number of nodes employed. This adaptive approach consistently favours a two-tier hierarchical parallelism, specifically the TwoTier-HBD8/64 configuration, indicating its efficacy in scaling LLM training and delivering superior performance. As model complexity increases—from GPT-4 1.8T to GPT-4 29T and subsequently to GPT-3 175B—the tool intelligently scales both tensor parallelism (TP) and data parallelism (DP), effectively distributing the computational workload and enhancing overall throughput.

👉 More information

🗞 Scaling Intelligence: Designing Data Centers for Next-Gen Language Models

🧠 DOI: https://doi.org/10.48550/arXiv.2506.15006