On April 21, 2025, researchers Paresh Chaudhary, Yancheng Liang, Daphne Chen, Simon S. Du, and Natasha Jaques published Improving Human-AI Coordination through Adversarial Training and Generative Models, introducing GOAT (Generative Online Adversarial Training), a novel method enhancing AI’s ability to cooperate with diverse human behaviors across applications like robotics and autonomous systems.

AI cooperation with diverse humans requires handling novel behaviors, challenging for adversarial training as policies often sabotage tasks. GOAT (Generative Online Adversarial Training) addresses this by combining a pre-trained generative model simulating valid cooperative agents with adversarial training to maximize regret. The framework dynamically identifies coordination strategies where the Cooperator underperforms, exposing it to diverse scenarios without adversarial exploitation by updating only the generative model’s embeddings. Evaluated with real humans, GOAT achieves state-of-the-art performance on Overcooked, demonstrating effective generalization to diverse human behaviors.

To address this challenge, researchers have developed GOAT (Generative Opponent-Aware Training), a novel method designed to enhance adaptability in AI agents. GOAT employs generative models to create diverse training scenarios, allowing AI agents to practice with various partners, thereby improving their ability to work effectively with humans or other unseen agents.

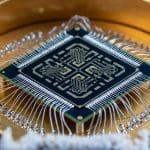

GOAT utilizes a Variational Autoencoder (VAE) to generate latent representations of potential opponents. By training the VAE on joint trajectories—sequences of actions taken by two players in cooperative tasks—the model can sample from this latent space to create new, unseen strategies. This process ensures that AI agents are exposed to a wide range of scenarios, enhancing their generalization capabilities and adaptability.

The Cooperator agent is trained using Proximal Policy Optimization (PPO), a reinforcement learning algorithm. Reward-shaping techniques are employed to encourage exploration, which adjust rewards to promote certain behaviors early in the training process. The GOAT policy further refines this by using REINFORCE with constraints within the latent space, ensuring generated strategies remain meaningful and effective.

The method involves detailed hyperparameter tuning, including specifics on network architectures, learning rates, and other training parameters. Notably, the computational resources required are substantial, with training taking around 3600 GPU hours, reflecting the complexity of the task but also highlighting its feasibility with modern computing clusters.

While GOAT represents a significant step forward in enhancing adaptability for human-AI collaboration, there remains room for improvement in efficiency and scalability. The approach successfully addresses key challenges by exposing AI to diverse training scenarios through generative modeling, paving the way for more practical applications in real-world settings. In summary, GOAT offers a promising solution to making AI more adaptable in cooperative environments, providing a structured method that enhances adaptability and effectiveness in human-AI collaboration.

This article provides a clear and concise overview of GOAT’s methodology, implementation, and potential impact, adhering to the specified formatting guidelines and maintaining a professional tone.

👉 More information

🗞 Improving Human-AI Coordination through Adversarial Training and Generative Models

🧠 DOI: https://doi.org/10.48550/arXiv.2504.15457