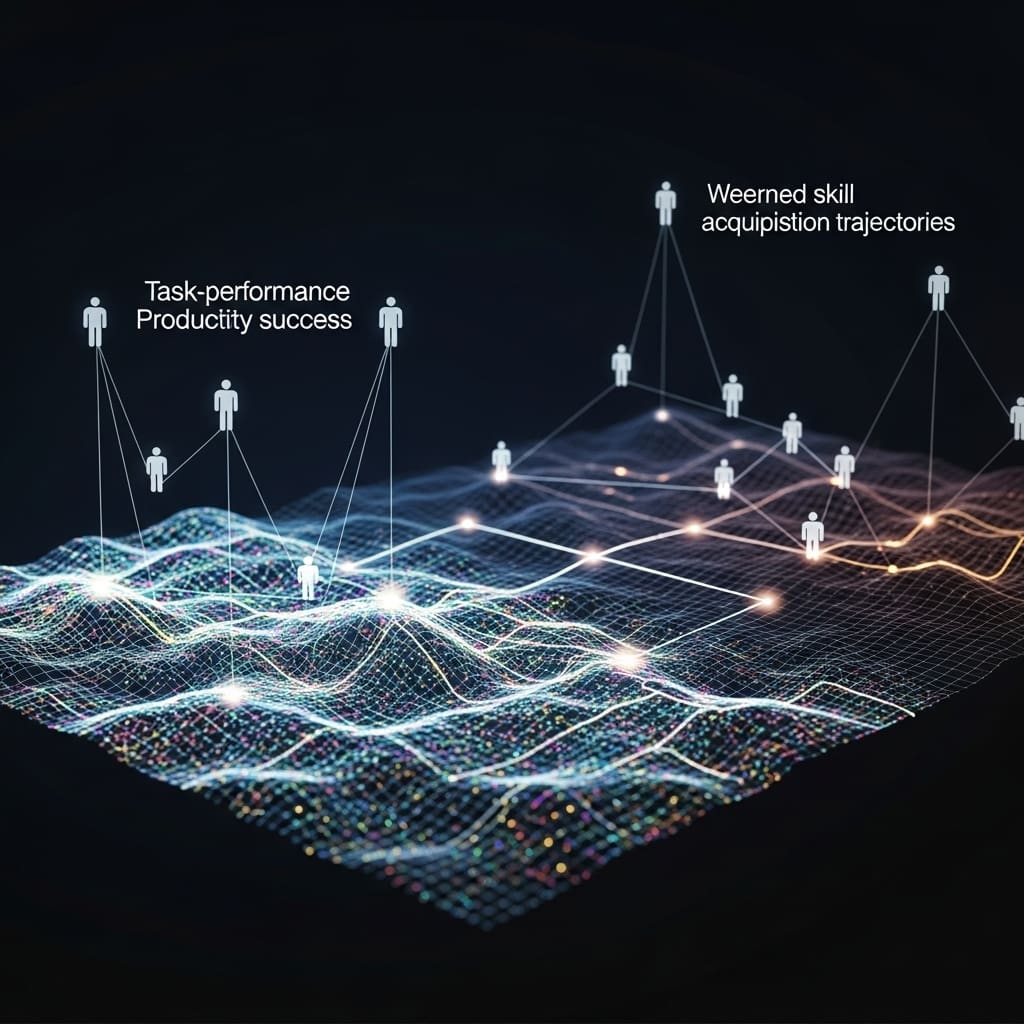

Researchers are beginning to understand how artificial intelligence tools impact the development of crucial workplace skills. Judy Hanwen Shen from Anthropic and Alex Tamkin, also of Anthropic, investigated whether reliance on AI assistance hinders skill acquisition in novice workers. Their randomised experiments focused on developers learning a new programming library, revealing that heavy dependence on AI impaired conceptual understanding, code reading and debugging, without substantial efficiency benefits. This research is significant because it demonstrates that AI-enhanced productivity doesn’t necessarily equate to competence and highlights the need for careful integration of such tools to safeguard skill formation, especially in domains where human oversight is critical.

The team identified six distinct patterns of interaction with AI, crucially discovering that three of these patterns actively preserve learning outcomes by maintaining cognitive engagement. These patterns involve developers who proactively seek explanations or focus on conceptual inquiries, rather than simply accepting AI-generated code. Experiments show a significant decrease of 17%, or two grade points, in library-specific skills among those using AI assistance, as measured by a comprehensive evaluation.

Further analysis revealed that the lack of significant efficiency gains from AI stemmed from the time participants spent interacting with the assistant, with some posing up to 15 questions or dedicating over 30% of their time to query composition. The research attributes the skill development observed in the control group to their independent problem-solving and error resolution processes. By categorising AI interaction behaviours, scientists pinpointed that patterns involving greater cognitive effort and independent thought were most effective in preserving learning. Conversely, a pattern of ‘iterative AI debugging’ resulted in 31 minutes and a 24% score, highlighting the importance of cognitive engagement. This study opens avenues for designing AI workflows that prioritise skill formation, especially in safety-critical domains where human oversight and understanding remain paramount.

Trio Proficiency with and without AI assistance demonstrates

The study employed an online interview platform to facilitate the experiments, prompting participants in the treatment condition to utilize an AI assistant during task completion. Researchers selected Trio due to its relative unfamiliarity compared to asyncio, as indicated by StackOverflow question volume, ensuring participants possessed limited prior knowledge. Participants were tasked with completing coding challenges designed to assess their understanding and application of asynchronous programming concepts within the Trio framework. The experimental design involved a controlled comparison between two groups: one group completed the tasks independently, while the other received assistance from the AI tool.

To quantify skill development, the team measured conceptual understanding, code reading ability, and debugging proficiency through a series of assessments integrated into the experimental setup. These assessments included multiple-choice questions, code analysis exercises, and debugging challenges, all specifically tailored to the Trio library. Data collection focused on both quantitative metrics, such as task completion time and assessment scores, and qualitative analysis of interaction patterns between participants and the AI assistant. Researchers identified and categorized six distinct interaction patterns, with a particular focus on three patterns demonstrating cognitive engagement and preserving learning outcomes despite AI assistance.

This detailed analysis of user behaviour involved observing how participants formulated queries, interpreted AI-generated code, and integrated the assistance into their problem-solving process. The team meticulously tracked the extent to which participants actively engaged with the underlying concepts versus passively accepting AI-provided solutions. This approach enabled the study to differentiate between assistance that facilitated learning and assistance that hindered skill acquisition. Scientists harnessed a combination of quantitative performance metrics and qualitative behavioural analysis to reveal that full delegation of coding tasks, while potentially improving short-term productivity, compromised long-term learning of the Trio library.

AI hinders novice developer skill acquisition by reducing

Experiments did not identify a statistically significant acceleration in task completion time with AI assistance, despite expectations of increased efficiency. Detailed analysis of screen recordings showed that some participants invested considerable time interacting with the AI, with instances of up to 15 questions asked or over 30% of task time spent composing queries. The team measured completion times averaging 24 minutes for ‘Generation-Then-Comprehension’ tasks with an 86% quiz score, contrasting with 22 minutes and a 65% score for ‘AI Delegation’ approaches. Conversely, ‘Iterative AI Debugging’ took 31 minutes and yielded a 24% quiz score, while ‘Hybrid Code-Explanation’ completed in 22 minutes with a 35% score.

Researchers categorised AI usage patterns, identifying six distinct behaviours. Notably, three patterns, involving cognitive engagement and independent thinking, preserved learning outcomes even with AI assistance. These patterns included asking for explanations and focusing on conceptual questions. The control group demonstrated skill development attributed to independently encountering and resolving errors. Measurements confirm that participants employing these high-skill development patterns achieved higher scores in the library competency evaluation. Data shows an average completion time of 19.5 minutes and a 39% quiz score for ‘Progressive AI Reliance’.

AI hinders novice developer skill acquisition by reducing

Participants who delegated coding tasks to the AI showed limited productivity improvements, but at the expense of genuine learning of the library’s functionalities. The research identified six distinct interaction patterns between developers and the AI, with three patterns demonstrating cognitive engagement and preserving learning outcomes despite the use of assistance. However, the authors acknowledge limitations stemming from the controlled experimental setting and the specific programming library used, potentially affecting the generalizability of the results.

👉 More information

🗞 How AI Impacts Skill Formation

🧠 ArXiv: https://arxiv.org/abs/2601.20245