Autonomous systems, capable of completing tasks with minimal human intervention, represent a major frontier in artificial intelligence, but understanding why these systems fail remains a significant challenge. Ruofan Lu from The Chinese University of Hong Kong, Yichen Li from the same institution, and Yintong Huo from Singapore Management University, have undertaken a detailed investigation into the causes of failure in these increasingly complex systems. The team developed a comprehensive benchmark comprising 34 tasks to rigorously evaluate popular autonomous agent frameworks, revealing an average task completion rate of only around 50%. This research moves beyond simply measuring success rates, offering a detailed taxonomy of failure causes, spanning planning errors, execution issues, and incorrect responses, and proposes practical improvements to build more reliable and effective autonomous agents for the future.

Cibench, a Benchmark for Collaborative Agent Evaluation

This research introduces Cibench, a comprehensive benchmark designed to rigorously evaluate Large Language Model (LLM)-based agents, with a particular focus on their ability to collaborate and perform complex tasks involving tool use and real-world data interaction. The authors identified a gap in existing benchmarks, which often lack the complexity and realism needed to truly assess agent capabilities. Cibench comprises 10 diverse tasks spanning data analysis, web interaction, and more, challenging agents to plan effectively, use tools proficiently, and handle errors gracefully. The authors evaluated several state-of-the-art LLM-based agents, including GPT-4o and MetaGPT, analyzing performance across different tasks and identifying strengths and weaknesses.

The results reveal that even the most advanced agents struggle with complex tasks requiring robust planning, error handling, and effective tool use, indicating significant room for improvement in agent capabilities. The paper provides a detailed analysis of common errors, highlighting areas where further research is needed, such as better planning and more robust error recovery. Cibench is released as an open-source resource, allowing other researchers to evaluate their own agents and contribute to the development of more capable AI systems. Key takeaways include that current LLM agents are not yet fully capable of handling complex, real-world tasks, and that agent collaboration is a critical area for research.

Detailed Failure Analysis of Language Agent Performance

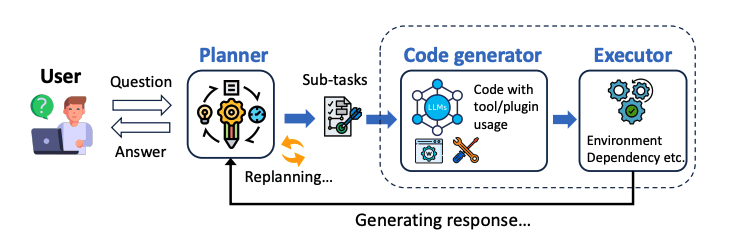

To thoroughly evaluate autonomous agents powered by large language models, researchers developed a comprehensive benchmark consisting of thirty-four diverse and programmable tasks. This approach moves beyond simply measuring success rates, instead focusing on a detailed analysis of how these agents interact and where they encounter difficulties. The team established a three-tiered taxonomy to categorize the types of failures observed, aligning them with specific phases of task completion, planning, execution, and response generation, allowing for targeted improvements to agent design. Furthermore, the research incorporates a focus on self-diagnosis and refinement. Researchers explored methods for agents to identify errors, learn from them, and improve performance on subsequent tasks, investigating techniques where agents leverage execution feedback to refine code or utilize self-editing capabilities to correct faults. This represents a significant step towards building agents that can independently adapt and improve over time.

Detailed Agent Failure Taxonomy Revealed

Recent advances in artificial intelligence have focused on creating autonomous agent systems capable of tackling complex tasks, but evaluating these systems has largely relied on simply measuring success rates. New research addresses this limitation by introducing a benchmark consisting of 34 diverse coding tasks designed to rigorously assess how these agents function internally. The study evaluated three popular open-source agent frameworks, each powered by advanced language models, and found an overall task completion rate of approximately 50%. This research goes beyond simply counting successes and failures by identifying the specific reasons why agents struggle.

Researchers developed a three-tiered taxonomy of failure causes, categorizing issues that arise during task planning, execution, and response generation. The most frequent failures stem from inadequate task decomposition and difficulties with self-refinement. The study reveals that agent performance varies significantly depending on the nature of the task, excelling at structured tasks like data analysis but struggling with reasoning-intensive tasks like web crawling. Interestingly, the research highlights specializations among different agent frameworks, with TaskWeaver and AutoGen demonstrating particular strength in structured tasks, and MetaGPT showing promise in web crawling. This suggests that different architectures are better suited to specific types of problems, opening avenues for developing specialized agent systems. While more powerful language models generally improve performance, the study also notes that they can sometimes lead to overthinking.

Agent Errors, Benchmarks and Improvement Strategies

This research presents a comprehensive evaluation of autonomous agent systems, powered by large language models, and identifies key areas for improvement. The study introduces a benchmark comprising 34 tasks designed to rigorously assess agent performance, revealing an approximate 50% task completion rate across three popular agent frameworks. Through detailed failure analysis, researchers developed a three-tier taxonomy categorizing errors into planning issues, execution problems, and incorrect response generation, providing a nuanced understanding of where these systems struggle. The findings highlight the importance of addressing these failure modes to enhance the reliability of autonomous agents. To this end, the authors propose practical design strategies, including a meta-controller to diagnose and correct errors, and an “early stop” mechanism to conserve resources when repetitive failures occur. The researchers intend to expand the benchmark further and implement the proposed strategies.

👉 More information

🗞 Exploring Autonomous Agents: A Closer Look at Why They Fail When Completing Tasks

🧠 ArXiv: https://arxiv.org/abs/2508.13143