The pursuit of truly versatile artificial intelligence has long been hampered by the limitations of current multimodal systems, which struggle to combine information from diverse sources like text, images, audio and video without extensive and costly retraining. Huawei Lin from Amazon and Rochester Institute of Technology, Yunzhi Shi from Amazon, and Tong Geng from the University of Rochester, alongside their colleagues, present a new framework called Agent-Omni that overcomes these challenges. Agent-Omni coordinates existing foundation models through a master-agent system, enabling flexible reasoning across multiple modalities without requiring any further training. This innovative approach consistently achieves state-of-the-art performance on complex cross-modal tasks, offering a modular and adaptable solution that promises to unlock the full potential of omni-modal artificial intelligence and paving the way for more transparent and reliable systems.

Multimodal Reasoning With Dynamic Agent Orchestration

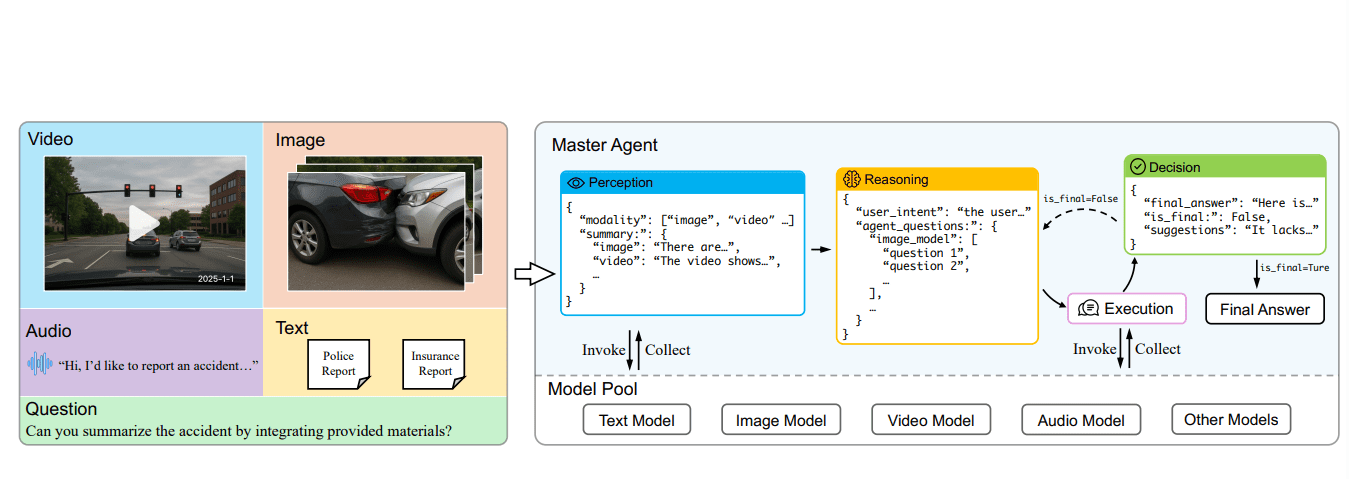

This research introduces a multi-agent system designed to understand information from various sources, including text, images, audio, and video. The system achieves understanding by orchestrating specialized foundation models, often called agents, through iterative reasoning loops. This innovative approach avoids the need to retrain large models, instead relying on dynamic agent selection and carefully crafted prompts. The system functions by decomposing user queries into specific tasks for each modality, then instructing the appropriate agents to process the information. The core of the system is a reasoning module that analyzes user input, identifies the necessary modalities, selects suitable agents, and formulates questions for them.

These questions are then dispatched to the selected agents, which process their respective data and return responses. A decision module synthesizes these responses into a comprehensive answer, determines if the answer is complete, and provides suggestions for improvement. If the answer requires refinement, the process repeats, using previous interactions to enhance understanding. The system utilizes structured data formats, defined by JSON schemas, to ensure clear communication between modules and facilitate consistent results. This modular design promotes flexibility and continuous improvement, allowing for seamless integration of new or enhanced foundation models, offering a promising pathway for building more intelligent and adaptable artificial intelligence systems.

Hierarchical Agents Coordinate Multimodal Foundation Models

Scientists have pioneered Agent-Omni, a novel framework that achieves flexible multimodal reasoning without extensive retraining of large language models. Instead of building a single, unified model, researchers engineered a hierarchical agent architecture that coordinates existing foundation models, enabling the system to process combinations of text, images, audio, and video. This approach circumvents the need for costly, large-scale fine-tuning. At the core of Agent-Omni is a master-agent system that interprets user intent and dynamically delegates subtasks to specialized, modality-specific agents.

These agents, each proficient in processing a single modality, work in parallel to extract relevant information from the input data. The master agent then integrates the outputs from these individual agents into a coherent and comprehensive final answer, effectively bridging the gap between different data types. This modular design allows for seamless integration of new or improved foundation models as they become available, ensuring the system remains adaptable and future-proof. Experiments employed a diverse range of benchmarks to validate the framework’s performance, consistently achieving state-of-the-art results, particularly on tasks demanding complex cross-modal reasoning. The system demonstrated its ability to effectively integrate information from multiple sources, such as aligning spoken descriptions with visual evidence or linking video events with accompanying text. To facilitate continued research, the team released an open-source implementation of Agent-Omni, providing a valuable resource for the broader scientific community.

Agent-Omni Achieves Robust Multimodal Reasoning

This work presents Agent-Omni, a novel framework designed to achieve robust multimodal reasoning across text, images, audio, and video without requiring costly retraining of foundation models. The core of the system is a master agent that coordinates specialized models from a diverse model pool, interpreting user intent and delegating subtasks to modality-specific agents. This agent-based design enables flexible integration of existing foundation models, ensuring adaptability to various inputs while maintaining transparency and interpretability. Experiments demonstrate Agent-Omni’s strong performance across a range of benchmarks, including assessments of language understanding, reasoning, and mathematical problem-solving.

The research systematically assesses the framework’s ability to generalize across diverse modalities and its computational efficiency compared to end-to-end multimodal large language models. Results show the system effectively leverages iterative self-correction through a “master loop”, refining answers through repeated evaluation and refinement until a satisfactory response is achieved. The framework’s performance is further validated through detailed analysis of the impact of different foundation models within the model pool and varying the maximum number of master loops. This analysis reveals that the iterative self-correction mechanism significantly improves answer quality, underscoring its potential for scalable and reliable omni-modal reasoning, offering a promising pathway for future advancements in multimodal artificial intelligence.

Modular Omni-Modal Reasoning with Specialist Agents

Agent-Omni represents a significant advance in omni-modal reasoning, demonstrating a framework capable of integrating text, images, audio, and video without requiring costly retraining of large, unified models. Researchers have developed a system where a ‘master agent’ coordinates specialized foundation models, effectively delegating tasks and synthesizing coherent textual responses from diverse inputs. Extensive experiments across multiple benchmarks confirm that Agent-Omni achieves state-of-the-art performance, particularly on complex tasks involving cross-modal understanding and video analysis. This approach offers a scalable and adaptable solution for omni-modal understanding, as it leverages existing, pre-trained models and avoids the need for expensive, unified training. The modular design of Agent-Omni also facilitates future improvements, allowing researchers to easily incorporate stronger foundation models as they become available. Evaluation has been primarily conducted on curated benchmarks, and further research is needed to assess generalization to real-world scenarios and ensure responsible deployment, particularly regarding data privacy, bias mitigation, and safety considerations.

👉 More information

🗞 Agent-Omni: Test-Time Multimodal Reasoning via Model Coordination for Understanding Anything

🧠 ArXiv: https://arxiv.org/abs/2511.02834