On April 21, 2025, researchers published Dynamic Legged Ball Manipulation on Rugged Terrains with Hierarchical Reinforcement Learning, detailing a novel approach to enhance quadruped robots’ ability to perform complex tasks such as ball manipulation in challenging environments.

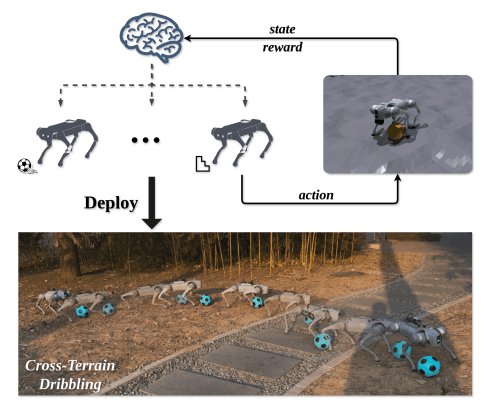

The study addresses challenges in quadruped robots performing dynamic ball manipulation in rugged terrains by proposing a hierarchical reinforcement learning framework. A high-level policy switches between pre-trained low-level skills like dribbling and terrain navigation based on sensory data. To enhance learning efficiency, the researchers introduced Dynamic Skill-Focused Policy Optimization, suppressing gradients from inactive skills. Both simulation and real-world experiments demonstrated superior performance compared to baseline methods in dynamic ball manipulation across challenging environments.

Recent years have witnessed remarkable progress in robotics, enabling machines to execute tasks with increasing precision. However, the challenge of making robots adaptable to complex and dynamic environments persists. This article delves into how reinforcement learning (RL) is enhancing robotic adaptability through three key approaches: hierarchical RL, policy gradient methods, and model-free techniques.

Hierarchical reinforcement learning streamlines task execution by breaking down operations into smaller subtasks. This method allows robots to manage intricate scenarios efficiently without overwhelming their learning systems. By accelerating the learning process and improving scalability, it becomes easier for robots to adapt to new challenges, making it ideal for complex tasks.

Policy gradient methods focus on refining a robot’s decision-making strategy through feedback from its actions. These methods maximize rewards by directly optimizing policies. While they require careful parameter tuning, their effectiveness in enabling optimal decisions across various environments is significant when properly configured.

Model-free reinforcement learning approaches enable robots to adapt dynamically without relying on predefined environmental models. This flexibility is particularly advantageous for real-world applications where unpredictability and constant change are prevalent.

The integration of these RL methods shows promise in addressing challenges such as sample efficiency and safety. Looking ahead, the synergy between robotics and computer vision, exemplified by integrating models like YOLO11, could enhance robots’ environmental perception capabilities. This integration has the potential to lead to more sophisticated and adaptable machines.

👉 More information

🗞 Dynamic Legged Ball Manipulation on Rugged Terrains with Hierarchical Reinforcement Learning

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14989