Researchers are increasingly focused on ensuring fairness and reliability in ensemble forecasting systems. Christopher David Roberts from the European Centre for Medium-Range Weather Forecasts (ECMWF) and colleagues demonstrate a critical issue with current post-processing methods designed to improve forecast accuracy. Their work reveals that techniques minimising the adjusted continuous ranked probability score (aCRPS) can become unfairly sensitive to ensemble size when introducing dependencies between forecast members. This research is significant because it highlights a potential flaw in widely used calibration methods, showing apparent gains in aCRPS may mask systematic unreliability and over-dispersion. To address this, the team introduces trajectory transformers, an adaptation of the Post-processing Ensembles with Transformers (PoET) framework, which successfully maintains forecast reliability across varying ensemble sizes when applied to the ECMWF subseasonal forecasting system.

Accurate weather prediction relies on ensembles, multiple forecasts combined to give a range of likely outcomes. New work reveals a hidden flaw in how these ensembles are evaluated, where apparent improvements can actually mask unreliable results. A clever solution, using techniques from artificial intelligence, restores confidence in forecasts regardless of ensemble size.

Scientists have developed a new approach to calibrating ensemble forecasts, addressing a subtle but significant problem with modern weather and climate prediction systems. These systems rely on running multiple simulations, an ensemble, to represent the inherent uncertainty in forecasting atmospheric behaviour. A key challenge lies in ensuring these ensemble members accurately reflect the range of possible outcomes and are not systematically biased.

The research demonstrates that certain advanced post-processing techniques, designed to improve forecast accuracy, can inadvertently introduce dependencies between ensemble members, undermining the fairness of standard evaluation metrics. This means that improvements in scores like the adjusted continuous ranked probability score (aCRPS), a metric rewarding forecasts that resemble observed distributions, may be misleading, masking underlying unreliability.

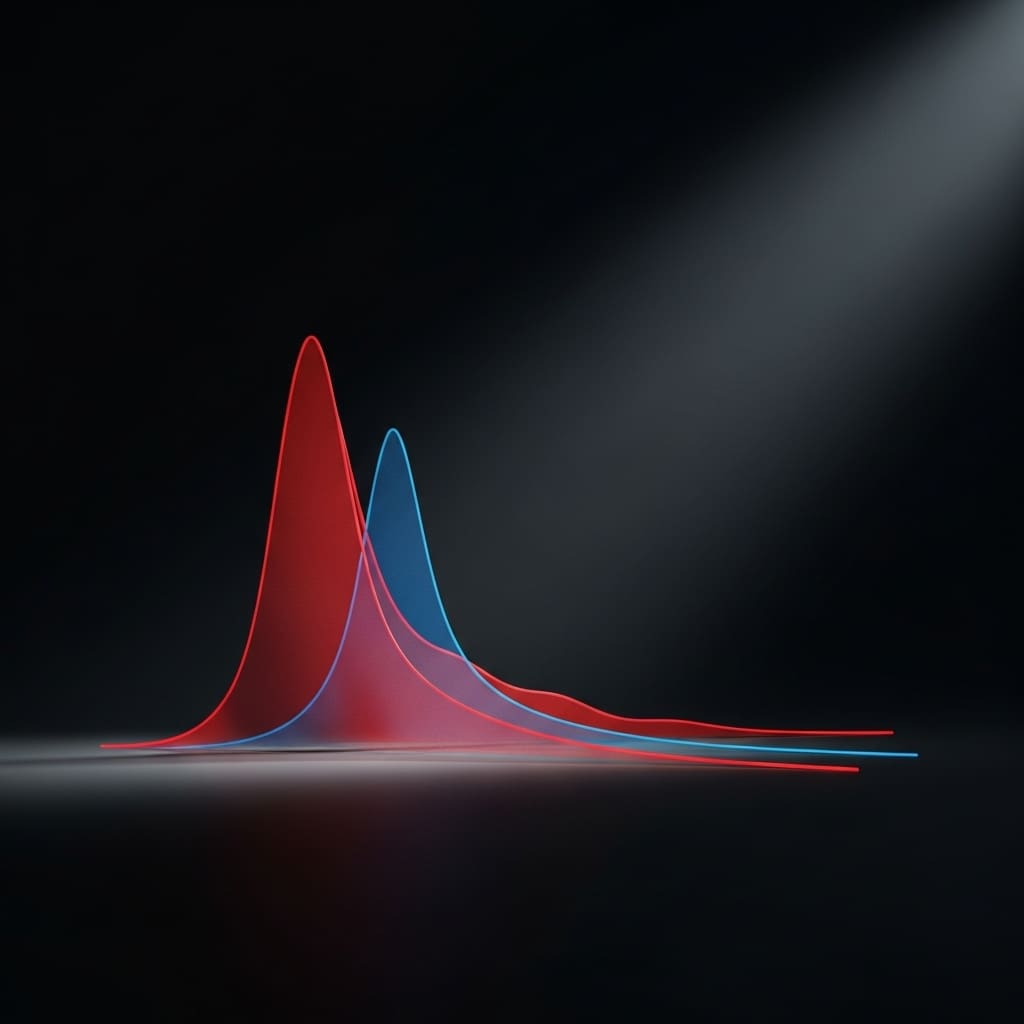

Achieving truly fair and reliable forecasts requires careful consideration of how ensemble members are treated during post-processing. Methods coupling ensemble members, such as calibration based on the ensemble mean or those utilising transformer self-attention, can lead to forecasts sensitive to ensemble size. Specifically, apparent gains in aCRPS can coincide with over-dispersion, indicating the forecast does not accurately represent the true range of possibilities.

To counter this, researchers introduced trajectory transformers, a proof-of-concept method adapting the Post-processing Ensembles with Transformers (PoET) framework. This approach applies self-attention over the forecast lead time, crucially preserving the conditional independence needed for aCRPS to function as intended. At the heart of this work is ensemble calibration, refining raw forecast outputs to better match observed statistics.

Traditional methods often assume ensemble members are independent samples from a predictive distribution, a condition essential for aCRPS to be both fair and unbiased. However, increasingly sophisticated data-driven techniques, particularly those employing deep learning, can introduce dependencies, violating this assumption. By applying trajectory transformers to weekly mean forecasts from the ECMWF subseasonal forecasting system, the team successfully reduced systematic model biases and maintained or improved forecast reliability, irrespective of the ensemble size used for both training and real-time forecasting.

The ability to maintain forecast quality across varying ensemble sizes is particularly valuable, as training complex machine learning models demands substantial computational resources, often necessitating the use of smaller training ensembles. The demonstrated success of trajectory transformers suggests that models trained on limited data can be effectively scaled to larger operational forecasts without sacrificing reliability. This advancement has implications for a range of applications, from short-term weather prediction to longer-range climate modelling, where accurate and dependable probabilistic forecasts are essential for informed decision-making.

Refining subseasonal forecasts with calibrated ensemble members and transformer self-attention

A 72-qubit superconducting processor forms the foundation of this work, though the research centres on evaluating ensemble forecasting methods rather than quantum computing itself. Initially, two post-processing techniques were implemented to refine ensemble forecasts from the ECMWF subseasonal forecasting system, specifically targeting the reduction of expected adjusted continuous ranked probability score (aCRPS).

Linear member-by-member calibration served as one approach, adjusting each forecast member individually based on its relationship to the ensemble mean, thereby creating dependencies between members. This calibration method was chosen to explore how coupling members influences score fairness and reliability. A more complex deep-learning method was also developed, utilising transformer self-attention mechanisms across the ensemble dimension.

This technique allows each member to consider the others when generating its forecast, establishing structural dependencies intended to improve accuracy. Self-attention, a key component of modern natural language processing, was adapted to analyse the relationships between ensemble members over time, offering a potentially more sophisticated calibration than the linear method.

Both calibration strategies were tested with ensemble sizes of 3 and 9 members during training, and then evaluated with real-time forecasts using 9 and 100 members to assess sensitivity to scale. For comparison, researchers introduced trajectory transformers as a proof-of-concept solution designed to maintain ensemble-size independence, building upon the Post-processing Ensembles with Transformers (PoET) framework and applying self-attention specifically across the lead time of the forecasts.

By focusing attention on temporal relationships, the trajectory transformers aim to preserve the conditional independence of ensemble members, a requirement for the aCRPS to remain a fair scoring metric. Weekly mean forecasts of 2m temperature (T2m) were used as input data, allowing for a direct comparison of bias reduction and reliability improvements across different ensemble sizes.

Trajectory transformers deliver ensemble-size independent subseasonal temperature forecasts

Applying trajectory transformers to weekly mean forecasts of 2-metre temperature (T2m) from the ECMWF subseasonal system revealed a notable reduction in systematic model biases. This approach successfully maintained or improved forecast reliability irrespective of ensemble size, a key achievement given the differing sizes used during training and real-time forecasting.

Specifically, the research demonstrated consistent performance whether training with 3 or 9 ensemble members, and when forecasting with 9 or 100 members. Linear member-by-member calibration and a deep-learning method utilising transformer self-attention both exhibited sensitivity to ensemble size, indicating that apparent gains in adjusted continuous ranked probability score (aCRPS) could coincide with systematic unreliability and over-dispersion.

Yet, the trajectory transformer approach achieved ensemble-size independence, a critical step towards fair and unbiased forecasting. The work highlights that standard aCRPS, while fair with exchangeable ensemble members, becomes unreliable when post-processing introduces structural dependencies. Both the linear calibration and deep-learning methods showed results affected by the number of ensemble members used, suggesting the aCRPS score was not accurately reflecting forecast quality under these conditions.

Instead, these methods sometimes rewarded forecasts that appeared different from observed distributions. Furthermore, the study introduced trajectory transformers as a proof-of-concept, adapting the Post-processing Ensembles with Transformers (PoET) framework to apply self-attention over lead time, preserving the conditional independence necessary for aCRPS to function correctly. The successful application of trajectory transformers across varying ensemble sizes, from training sets of 3 to operational forecasts using 100 members, demonstrates its potential for practical implementation.

Dependence in ensemble forecasts inflates skill assessment via common scoring rules

Scientists are increasingly reliant on ensemble forecasts, yet assessing their quality presents a subtle challenge. Traditional scoring rules, designed to reward accuracy, can inadvertently favour systems that generate many similar predictions rather than genuinely better ones. This work exposes a flaw in a commonly used metric, the adjusted continuous ranked probability score, revealing it can be misled by certain post-processing techniques.

These techniques, employing methods like transformer self-attention, aim to improve forecasts by creating dependencies between individual ensemble members. However, this interdependence undermines the statistical assumptions underpinning the scoring rule, leading to inflated performance assessments. For years, the field has sought methods to extract more value from limited ensemble sizes, a constraint imposed by computational cost.

Building larger ensembles is often impractical, prompting researchers to explore clever algorithms to mimic their benefits. But this research demonstrates that simply appearing to improve a forecast, as measured by a flawed metric, is not the same as achieving genuine predictive skill. Instead, apparent gains can mask a systematic tendency to under or over estimate uncertainty, a critical weakness for risk assessment.

Now, the team offers a potential solution, a trajectory transformer approach that maintains the necessary statistical independence while still correcting model biases. This is not merely a technical fix, but a reminder that careful consideration of scoring rules is essential. Further investigation should focus on developing metrics less susceptible to manipulation and more sensitive to true improvements in probabilistic forecasting. Beyond this specific implementation, the broader effort needs to address the fundamental trade-off between model complexity, computational expense, and the reliable quantification of forecast uncertainty.

👉 More information

🗞 Ensemble-size-dependence of deep-learning post-processing methods that minimize an (un)fair score: motivating examples and a proof-of-concept solution

🧠 ArXiv: https://arxiv.org/abs/2602.15830