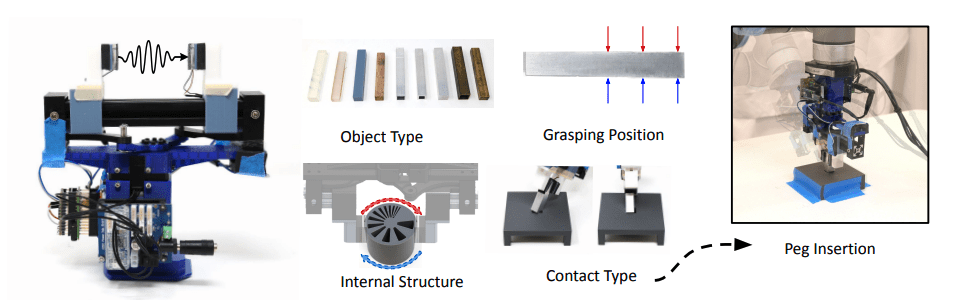

A novel approach in robotics utilizes sound vibrations for object classification and contact state estimation, as detailed in the April 21, 2025 article titled VibeCheck: Using Active Acoustic Tactile Sensing for Contact-Rich Manipulation. This system employs piezoelectric fingers to send and receive acoustic signals through objects, facilitating tasks such as pose estimation of internal structures and robust manipulation strategies like peg insertion.

The research presents an active acoustic sensing gripper with two piezoelectric fingers—one emitting and one receiving signals—to analyze object properties and contact states. The system enables tasks such as object classification, grasping position estimation, internal structure pose estimation, and extrinsic contact type classification. Applied to a peg insertion task, the system uses a simulated transition model and imitation learning policy trained on sensor data for robust performance. Demonstrated on a UR5 robot arm, the gripper successfully executes long-horizon manipulation using acoustic feedback alone.

In recent years, robotics has undergone a significant transformation with the integration of audio and tactile sensing into traditional visual systems. This multimodal approach enhances robots’ ability to navigate, manipulate objects, and interact with their environment more effectively. This article explores how audio-visual robotics is reshaping automation and its implications for future applications.

One notable advancement is the use of sound for detecting contact and proximity. Technologies like SonicFinger utilize microphone arrays to localize contact points, enabling robots to feel their environment through sound waves. Similarly, AuraSense employs audio signals from collisions for full-surface proximity detection, improving collision avoidance and human-robot collaboration safety.

Audio-visual data is also enhancing robot manipulation tasks. The ManiWAV project uses real-world audio-visual datasets to teach robots complex tasks by mimicking human actions, allowing them to understand object properties like texture and weight. Additionally, the Hearing Touch initiative demonstrates how audio-visual pretraining improves contact-rich manipulation, enabling more efficient and reliable performance.

Multimodal sensing offers several advantages over traditional approaches. It enhances robustness by providing redundant information streams, allowing auditory sensors to compensate if visual sensors fail. This approach also facilitates more natural human-robot interactions and reduces costs through simpler hardware requirements.

Despite these advancements, challenges remain. Environmental noise can interfere with audio sensing, while computational demands require efficient processing solutions. Researchers are exploring advanced algorithms and sensor fusion techniques to address these issues, paving the way for more sophisticated applications in healthcare, manufacturing, and service industries.

The integration of audio and tactile sensing into robotics represents a significant leap forward, offering enhanced capabilities and versatility. As technology evolves, audio-visual robotics is poised to play an increasingly important role in various sectors, marking a new era in human-like interactions with machines.

👉 More information

🗞 VibeCheck: Using Active Acoustic Tactile Sensing for Contact-Rich Manipulation

🧠 DOI: https://doi.org/10.48550/arXiv.2504.15535