On April 19, 2025, researchers published Adversarial Locomotion and Motion Imitation for Humanoid Policy Learning, introducing a novel framework that enhances humanoid robotics by separating upper and lower body control. Tested on Unitree’s H1 robot, this approach improves stability and coordination in whole-body movements.

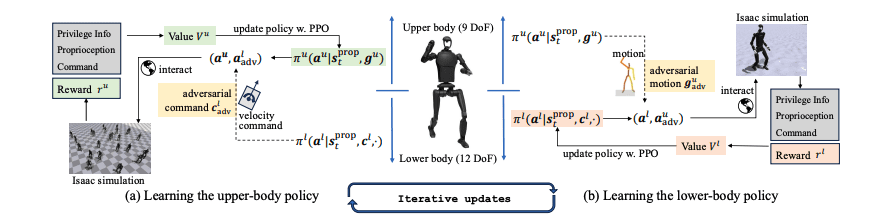

Humans exhibit diverse whole-body movements, but achieving human-like coordination in humanoid robots remains challenging due to conventional approaches neglecting upper and lower body roles. This leads to computational inefficiency and instability. To address this, researchers propose Adversarial Locomotion and Motion Imitation (ALMI), a framework enabling adversarial policy learning between upper and lower body. The lower body focuses on robust locomotion, while the upper body tracks motions. Through iterative updates, coordinated whole-body control is achieved, which is extendable to loco-manipulation tasks with teleoperation systems. Experiments demonstrate robust locomotion and precise motion tracking in simulation and on the Unitree H1 robot. A large-scale dataset of high-quality trajectories from MuJoCo simulations for real-world deployment is also released.

The seamless integration of locomotion and manipulation in robotics presents a significant challenge. While robots have made notable advancements in performing individual tasks such as walking or grasping, combining these abilities effectively in dynamic and unpredictable environments remains complex. Recent research has focused on developing policies that enable robots to simultaneously perform locomotion and manipulation, aiming to create more versatile and practical robotic systems. This article explores a novel approach outlined in recent studies, which combines domain randomization techniques with policy training to achieve robust performance across simulated and real-world environments.

The approach involves training a robot policy using domain randomization, which simulates diverse environmental variations during the training phase. By exposing the robot to varied scenarios in simulation, the policy learns to generalize across different conditions, reducing the need for extensive fine-tuning when deployed in the real world.

Key components of this method include policy training through reinforcement learning, where robots learn tasks by maximizing a reward function prioritizing stability, manipulation accuracy, and task completion. Domain randomization during training involves simulating variations in lighting, textures, object properties, and other factors affecting real-world performance. The trained policy is then deployed on physical robots without additional adjustments, leveraging robustness from domain randomization.

This approach differs from traditional methods, which require extensive sim-to-real fine-tuning or rely on pre-programmed behaviors for specific tasks. The robot adapts more effectively to real-world conditions by learning through diverse simulated environments.

To validate this approach, researchers conducted experiments with a physical robot equipped with both locomotion and manipulation capabilities. The robot performed complex activities such as carrying objects while navigating uneven terrain, manipulating tools in cluttered environments, and maintaining balance during dynamic movements.

Results demonstrated high success rates even when faced with unexpected obstacles or environmental changes. For instance, the robot successfully carried a box over rough terrain, adjusting its gait and grip for stability. In another test, it used a tool to clear debris while moving forward, showcasing seamless integration of manipulation and locomotion.

These experiments highlight the potential for robots trained with domain-randomized policies to operate effectively in real-world scenarios, reducing reliance on pre-programmed behaviors or manual adjustments.

The integration of locomotion and manipulation represents a significant step toward creating versatile and practical robotic systems. By leveraging domain randomization and policy training, researchers have demonstrated that robots can perform complex tasks reliably across diverse environments. This approach reduces the need for extensive fine-tuning and opens new possibilities for deploying robots in logistics, construction, and search-and-rescue operations.

As robotics technology advances, these innovations will play a crucial role in enabling robots to operate more effectively alongside humans in dynamic environments.

👉 More information

🗞 Adversarial Locomotion and Motion Imitation for Humanoid Policy Learning

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14305