Scientists are increasingly focused on understanding why large language models (LLMs) make mistakes, particularly on seemingly simple tasks demanding precision! Suvrat Raju and Praneeth Netrapalli, from various unaffiliated institutions, have developed a new model explaining these errors, suggesting they stem from the accumulation of small inaccuracies exceeding a critical threshold! This research is significant because it moves beyond attributing LLM failures to a ‘collapse of reasoning’, instead offering a quantitative relationship between task complexity and accuracy governed by just two key parameters , an elementary noise rate and the number of plausible, yet incorrect, token predictions! Through extensive testing with models like Gemini 2.5 Flash, Gemini 2.5 Pro and DeepSeek R1, the team demonstrate strong agreement between their model’s predictions and observed performance, and importantly, reveal methods for crafting prompts that minimise error rates.

LLM Errors Linked to Attention Accumulation, raising concerns

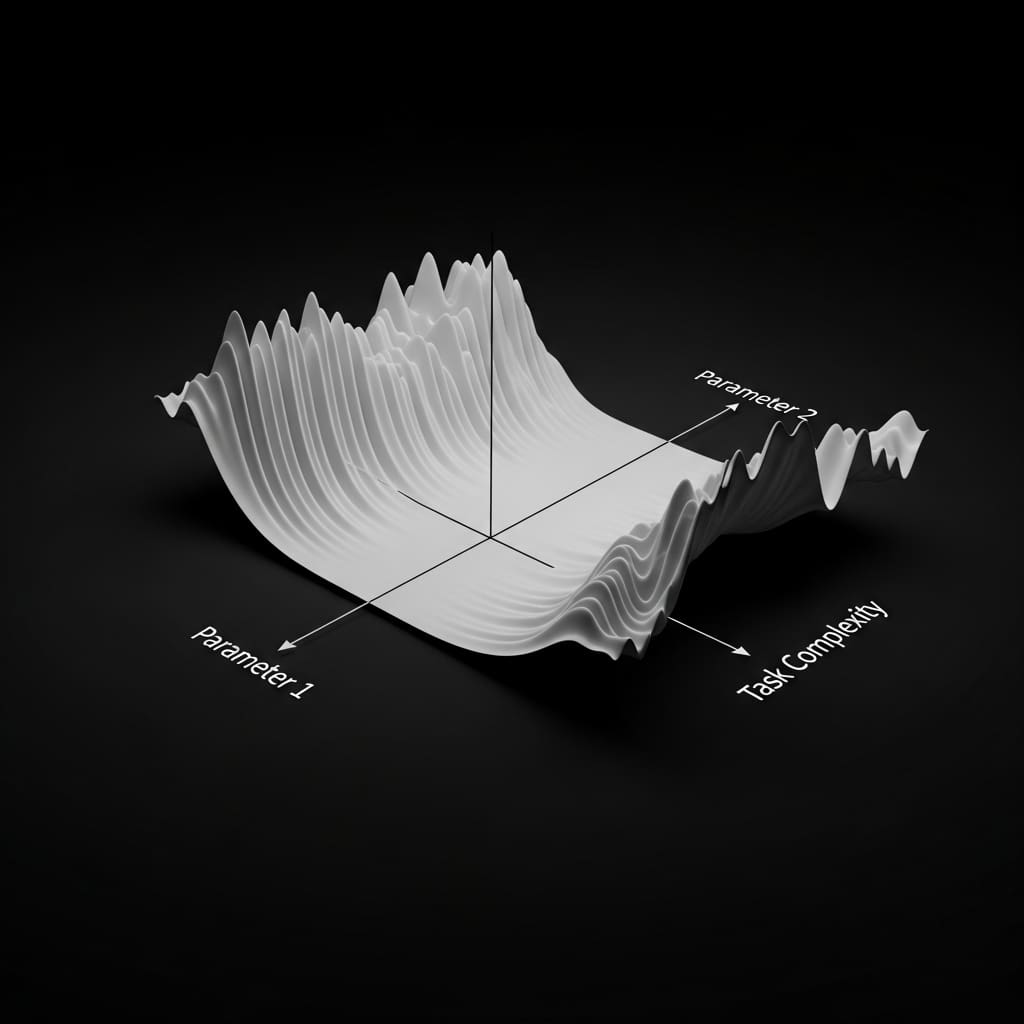

Scientists have demonstrated a novel quantitative model to predict error rates in Large Language Models (LLMs) when performing deterministic tasks requiring repetitive processing of tokens. Their central argument posits that these incorrect predictions stem from the accumulation of small errors within the attention mechanism, eventually exceeding a critical threshold. This insight led to the derivation of a two-parameter relationship linking accuracy to task complexity, offering a new perspective on LLM error analysis.

The team achieved excellent agreement between predicted and observed accuracy across a variety of tasks using Gemini 2.5 Flash, Gemini 2.5 Pro, and DeepSeek R1 models, with tests encompassing over 0.2 million distinct prompts. This breakthrough reveals an “effective field theory” approach, reorganising the vast number of LLM parameters into just two governing the error rate. These parameters are interpretable as an elementary noise rate and the number of plausible erroneous tokens, simplifying the complex internal workings of these models. The study unveils a quantitative formula, a = 1 Γ( q 2)γ(q 2, q 2rc2α ), where accuracy (a) is linked to task complexity (c) through the incomplete gamma function, with α fixed at 1.

Extensive empirical tests, spanning eight different tasks, validated this model, although some deviations were noted, prompting further investigation into specific failure cases. The research establishes a compelling alternative to theories suggesting LLM errors on repetitive tasks indicate a “collapse of reasoning” or an inability to handle “compositional” functions. Experiments show that the model accurately characterises errors made by LLMs, providing a framework for understanding and potentially mitigating these issues. The team’s approach draws parallels with effective field theory in physics, where complex systems are described by a limited set of effective parameters, analogous to the reduction of LLM parameters to just two governing error rates.

This work opens avenues for applying techniques from the natural sciences to quantitatively study LLMs, moving beyond purely empirical observations. Furthermore, the researchers demonstrate how to construct prompts designed to reduce the error rate, offering a practical application of their findings. The study’s innovation lies in its ability to predict LLM performance with a remarkably simple formula, despite the models’ immense complexity. By focusing on the accumulation of errors in the attention mechanism, the researchers provide a mechanistic explanation for observed failures, rather than attributing them to broader cognitive limitations. This model not only accurately predicts error rates but also suggests a pathway for improving LLM reliability through prompt engineering and potentially architectural modifications. The work provides a new lens through which to view LLM errors, shifting the focus from reasoning failures to quantifiable noise and threshold effects within the attention mechanism, and paving the way for more robust and accurate language models.

LLM Error Rates via Noise and Complexity are

Scientists investigated the error rates of large language models (LLMs) when performing arithmetic and repetitive tasks, hypothesising that inaccuracies stem from the accumulation of small errors exceeding a critical threshold. To test this idea, the research team developed a quantitative relationship between task complexity and model accuracy, characterised by two parameters: an elementary noise rate and the number of plausible erroneous tokens. Inspired by effective field theory, this framework reorganises the LLM’s vast parameter space into these two governing factors, enabling a simplified analysis of error propagation. Experiments employed Gemini 2.5 Flash, Gemini 2.5 Pro, and DeepSeek R1 models, subjecting them to systematically controlled arithmetic and repetition tasks of increasing complexity to validate the proposed scaling relationship.

The research team meticulously measured error rates in LLMs when tackling arithmetic problems, specifically focusing on tasks demanding repetitive token processing from limited sets. Experiments revealed that incorrect predictions often stem from the accumulation of small errors exceeding a certain threshold, leading to the development of a two-parameter model governing accuracy. The study established a quantitative link between accuracy and task complexity, demonstrating that the two parameters defining this relationship correspond to an elementary noise rate and the number of plausible erroneous tokens. Tests using Gemini 2.5 Flash, Gemini 2.5 Pro, and DeepSeek R1 consistently showed excellent agreement between predicted and observed accuracy across various tasks, although some deviations were noted.

For instance, in the dynamic programming task, the models exhibited accuracy that closely followed the expected curve, with measurements confirming the model’s predictive power. The team assessed performance on a list-finding task, observing that all models adhered to the anticipated accuracy curve as complexity, denoted by ‘c’, increased. Further investigations into the Tower of Hanoi problem, with a randomised disk labelling, showed that even with a provided algorithm, accuracy diminished with increasing ‘c’, mirroring findings in prior work. Measurements from vanilla addition tasks demonstrated that both Flash and DeepSeek models adhered to the derived formula, achieving high accuracy levels.

However, Gemini Pro failed to conform to this formula, suggesting a potential breakdown of the underlying assumptions of the model. To address this, researchers forced the model to follow a specific algorithmic approach, decomposing numbers into digits and manipulating them step-by-step. This refined approach yielded compelling results; all three models, Flash, DeepSeek, and Gemini Pro, now conformed to the established accuracy curve, bolstering the hypothesis that errors arise from accumulated random errors rather than a failure of reasoning. Binary addition tests, with complexity ‘c’ ranging up to 25, showed all models consistently following the predicted accuracy trend. Finally, multiplication tasks, involving a fixed four-digit number multiplied by varying lengths, also demonstrated strong adherence to the model, with measurements confirming its predictive capability and suggesting a pathway for improving LLM performance through focused attention mechanisms like “tag tokens”.

LLM Accuracy Linked to Noise and Complexity

Scientists have demonstrated a new understanding of error rates in large language models (LLMs) when performing tasks demanding precise, deterministic outputs, such as arithmetic! Researchers propose that errors accumulate and, once exceeding a specific threshold, lead to incorrect predictions. This insight has enabled the derivation of a quantitative relationship, defined by two parameters, linking accuracy to task complexity. These parameters reflect an inherent noise rate within the model and the number of plausible, yet incorrect, tokens it might predict! The study involved extensive testing using Gemini 2.5 Flash, Gemini 2.5 Pro, and DeepSeek R1, revealing strong alignment between predicted and observed accuracy across various tasks, though some discrepancies were noted.

This model offers an alternative explanation to theories suggesting that errors in long, repetitive tasks signify a “collapse of reasoning” or an inability to handle compositional functions. Furthermore, the team showcased methods for crafting prompts designed to minimise error rates. The authors acknowledge a limitation in assuming a continuous space for input and output sequences, which holds true for the studied problems but may not generalise universally. Future research could explore the model’s applicability to a wider range of tasks and investigate the impact of different model architectures on the identified parameters.

👉 More information

🗞 A model of errors in transformers

🧠 ArXiv: https://arxiv.org/abs/2601.14175