Fault detection in complex chemical processes represents a significant challenge for industrial safety and efficiency. Researchers Georgios Gravanis, Dimitrios Kyriakou, and Spyros Voutetakis, working in collaboration with colleagues from the Department of Information and Electronic Engineering and the Chemical Process and Energy Resources Institute at the International Hellenic University, Greece, alongside Simira Papadopoulou from the Department of Industrial Engineering and Management and Konstantinos Diamantaras, present a novel approach to enhance the transparency of fault diagnosis systems. Their work focuses on applying and comparing state-of-the-art explainable artificial intelligence (XAI) methods, Integrated Gradients and SHAP, to interpret the decisions made by a highly accurate Long Short-Time Memory (LSTM) classifier trained on the benchmark Tennessee Eastman Process. This research is significant because it demonstrates how XAI can not only identify faults but also pinpoint the specific process subsystems responsible, offering crucial insights for improved process control and preventative maintenance.

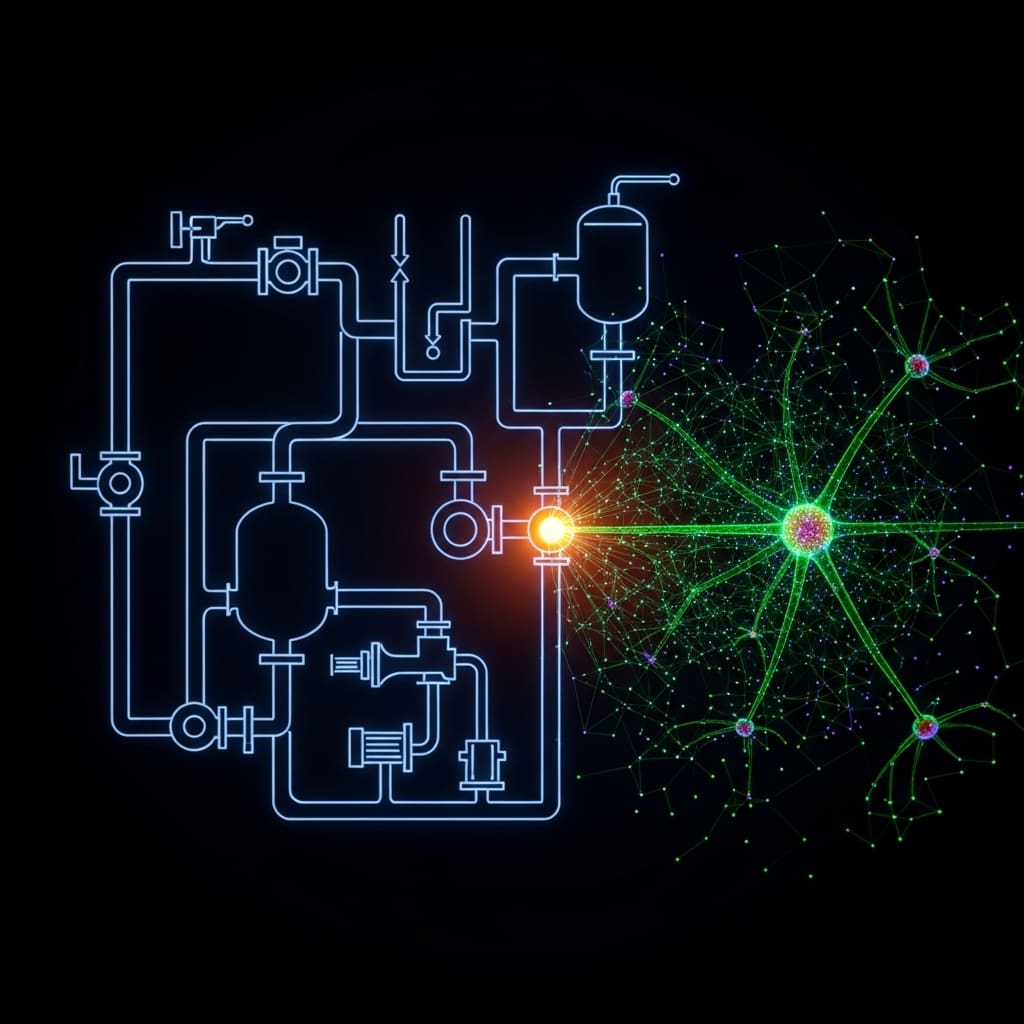

Better fault detection in chemical plants promises safer, more efficient production and reduced environmental impact. Understanding why a system flags an error is as important as detecting it, allowing engineers to quickly pinpoint and resolve problems. New techniques offer a way to open the ‘black box’ of complex process monitoring, revealing the underlying reasons for fault diagnoses.

Scientists are increasingly deploying deep learning models to optimise operations within industrial facilities, encompassing areas such as quality assurance, forecasting of production variables, and fault detection. A persistent challenge remains regarding the transparency of these models and the trustworthiness of the insights they generate for end users.

To address this, a growing field known as eXplainable Artificial Intelligence (XAI) has emerged, aiming to provide understanding into the decision-making processes of complex algorithms. While XAI has been extensively explored in image classification and natural language processing, its application to deep learning models handling multivariate time series data, particularly within chemical processes, has received comparatively little attention.

Research focuses on enhancing the reliability of fault detection systems in complex industrial settings. Understanding why a classifier arrives at a particular decision is central for building confidence in its predictions. This investigation delves into more ambiguous scenarios, unlike previous work that often concentrates on easily detectable faults.

The research seeks to establish a connection between the machine learning model’s decisions and the physical interpretation of each fault, validating the plausibility of the explanations provided. The central questions guiding the work are whether the XAI methods consistently agree on the most important variables and if the resulting explanations align with established knowledge of the chemical process itself.

By comparing IG and SHAP, researchers aimed to determine which method provides more informative insights into the root causes of faults. This classifier served as the foundation for applying explainability techniques, allowing for insight into its decision-making process. The TEP was chosen due to its established complexity and availability, providing a realistic scenario for fault diagnosis research.

Training involved feeding the LSTM classifier historical process data, enabling it to learn patterns indicative of normal operation and deviations signalling faults. Then, two state-of-the-art eXplainability (XAI) methods, Integrated Gradients (IG) and SHapley Additive exPlanations (SHAP), were implemented to interpret the LSTM classifier’s outputs.

IG calculates the gradient of the output with respect to the input features, attributing importance based on the integral of these gradients along a path from a baseline input. SHAP, conversely, uses concepts from game theory to assign each feature a value representing its contribution to the prediction. Applying both methods allowed for a comparative analysis of their strengths and weaknesses in this specific application.

The selection of these model-agnostic XAI techniques was deliberate, providing flexibility beyond the LSTM classifier. Unlike methods tied to specific model architectures, IG and SHAP can be applied to any trained machine learning model, broadening the potential impact of this work. Data from the TEP, encompassing a range of process variables, was then fed into both XAI methods alongside the trained LSTM classifier.

This configuration enabled the generation of feature importance scores for each fault scenario. To validate the plausibility of the explanations, the identified important features were compared against established knowledge of the TEP. Since the process is well-documented, researchers could assess whether the XAI methods correctly pinpointed the subsystems and variables most affected by each fault.

The research team focused on ambiguous fault scenarios, where standard fault detection frameworks often struggle. These cases present a greater challenge for XAI methods, demanding a higher degree of accuracy in identifying the root causes of the faults. Integrated Gradients (IG) and SHapley Additive exPlanations (SHAP) were compared, with both methods used to interpret decisions made by a highly accurate LSTM classifier trained on the TEP benchmark.

Initial assessments focused on identifying which process variables most influenced the fault classifications. In many instances, the XAI methods converged on similar key features, suggesting consistent reasoning behind the model’s predictions. Yet, discrepancies emerged when examining more ambiguous fault scenarios. Here, the SHAP method frequently provided insights more closely aligned with the underlying physical causes of the faults, as understood by process experts.

For example, when diagnosing specific disturbances, SHAP consistently highlighted variables directly involved in the fault mechanism, while IG sometimes indicated less directly related factors. At times, the difference in feature attribution between the two methods was substantial, indicating a potential for improved diagnostic accuracy with SHAP. Evaluating the plausibility of the explanations against known process behaviour was a central aspect of the work.

By comparing the XAI-derived feature importance with established chemical engineering principles, researchers validated the trustworthiness of the model’s reasoning. The study then focused on ambiguous results, explaining the decisions of a fault detection and diagnosis system applied to a complex chemical process. The research did not limit itself to easily detectable faults; instead, it concentrated on scenarios where accurate diagnosis is challenging.

Beyond identifying important features, the study also investigated the agreement between the IG and SHAP methods. Results showed that while there is often overlap in the identified variables, the magnitude of their importance scores can differ. The research team found that the two methods often agreed on the most important variables, but the degree of agreement varied depending on the specific fault. By comparing the two XAI methods, the work provides a means to establish trust between end users and machine learning model decisions.

Explaining machine learning decisions improves fault diagnosis in complex industrial processes

Scientists are increasingly reliant on complex machine learning models to monitor and control critical infrastructure, yet understanding why these models make specific decisions remains a substantial hurdle. This research addresses that challenge by applying explainable AI techniques to the notoriously difficult problem of fault diagnosis in chemical plants.

For years, identifying the root cause of process upsets has demanded expert knowledge and painstaking analysis, often relying on human operators to interpret vast streams of sensor data. Automated systems offer speed and scale, but lack transparency, creating a reluctance to fully trust their outputs. Comparisons between two prominent explanation methods, Integrated Gradients and SHAP, reveal subtle differences in their ability to pinpoint the source of problems within a simulated chemical plant.

Although both techniques successfully highlighted relevant process variables, SHAP sometimes provides a more accurate connection to the underlying fault. This work is part of a wider move towards building trust in AI systems used in safety-critical applications. Further research should focus on developing objective metrics for evaluating explanation quality, independent of human interpretation. Once achieved, this could unlock wider adoption of these tools, not just in chemical engineering, but across any field where opaque AI systems are making high-stakes decisions.

👉 More information

🗞 Explainability for Fault Detection System in Chemical Processes

🧠 ArXiv: https://arxiv.org/abs/2602.16341