Scientists are developing new methods to improve the automated analysis of whole-slide images (WSIs) used in cancer diagnosis and treatment monitoring. Lucas Sancéré from the Faculty of Mathematics and Natural Sciences, University of Cologne, Noémie Moreau and Katarzyna Bozek from the Institute for Biomedical Informatics, Faculty of Medicine and University Hospital Cologne, University of Cologne, present a novel approach utilising scalable Graph Transformers to classify epithelial cells within cutaneous squamous cell carcinoma (cSCC) images. This research addresses a significant limitation of current convolutional neural network and Vision Transformer methods, which often lose crucial tissue-level context when analysing large, complex WSIs through patch-based representations. By constructing cell graphs from full WSIs, and incorporating morphological, textural, and contextual information from surrounding cells, the team demonstrate substantially improved classification accuracy, achieving balanced accuracies of up to with their DIFFormer model, exceeding the performance of state-of-the-art image-based methods like CellViT256.

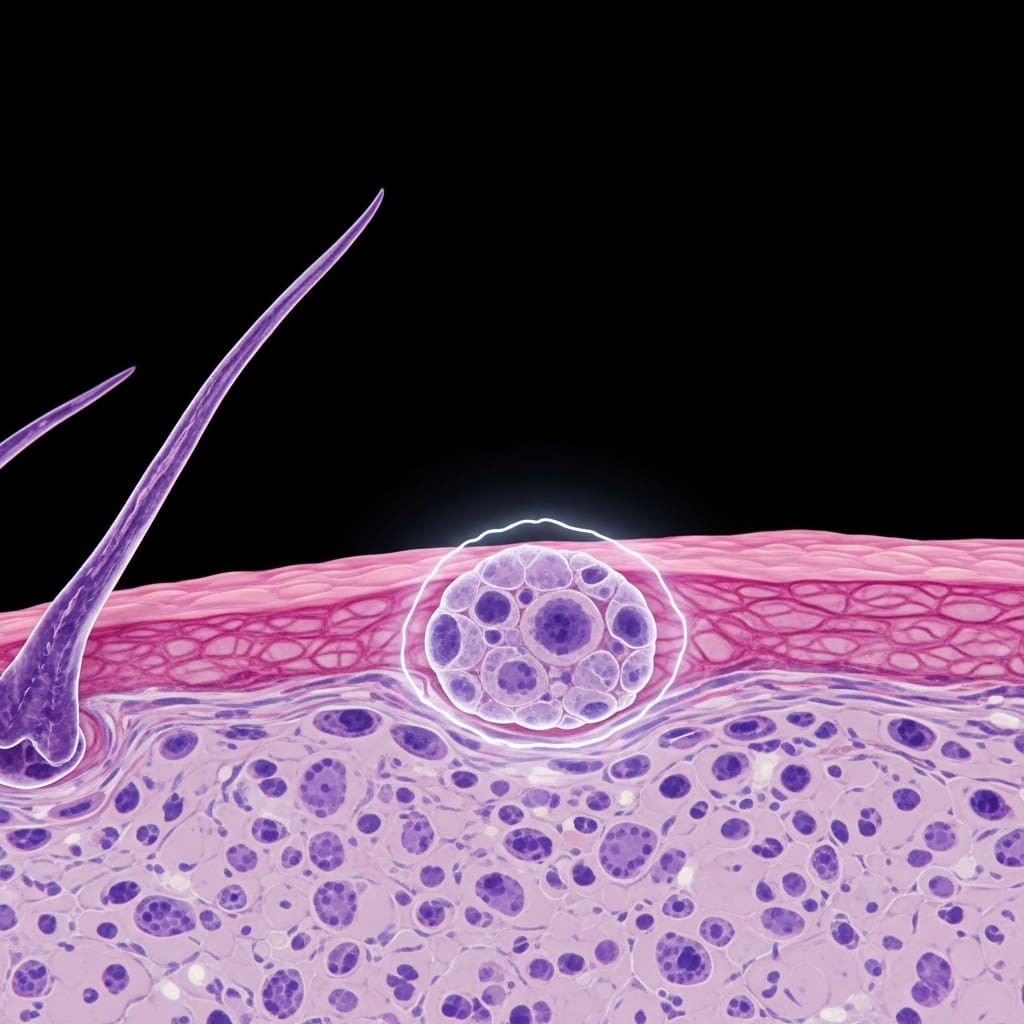

Scientists are developing artificial intelligence to improve diagnosis of skin cancer, a disease where early detection dramatically improves outcomes. Current methods struggle with the complexity of tissue samples, often overlooking vital contextual information. This work centres on differentiating between healthy and tumour epithelial cells in cutaneous squamous cell carcinoma (cSCC), a common skin cancer. Distinguishing these cell types is problematic due to their similar appearances, making image-based approaches less effective.

Initial investigations compared image-based and graph-based methods on a single WSI, revealing promising results. Graph Transformer models, specifically SGFormer and DIFFormer, achieved balanced accuracies of 85.2±1.5 and 85.1±2.5 respectively in 3-fold cross-validation, outperforming the best image-based method which reached 81.2±3.0. Further analysis identified the most informative node features, demonstrating that combining morphological and texture characteristics with the cell classes of surrounding non-epithelial cells significantly improves performance, highlighting the importance of considering the broader cellular environment.

Extending this research to multiple WSIs from several patients presented computational hurdles, prompting the extraction of four 2560 × 2560 pixel patches from each image and their conversion into graphs. In this setting, DIFFormer achieved a balanced accuracy of 83.6 ±1.9, while the state-of-the-art image-based model CellViT256 reached 78.1 ±0.5. Notably, DIFFormer training proved substantially faster, completing a single cross-validation fold in just 32 minutes compared to the approximately five days required for CellViT256.

These findings suggest that graph-based approaches offer a compelling alternative to traditional computer vision techniques for object classification, particularly in the context of cell classification within complex tissue samples. Haematoxylin and Eosin (H&E) staining remains a standard technique for tissue examination, generating these crucial WSIs used in the study.

SGFormer and DIFFormer models surpass CellViT256 in cutaneous squamous cell carcinoma classification

Balanced accuracies of 86.2 ±1.1 and 83.6 ±1.9 percent were achieved using SGFormer and DIFFormer models respectively, in 3-fold cross-validation on full WSI cell graphs. These results represent a substantial improvement over the 78.1 ±0.5 percent accuracy attained by the state-of-the-art image-based CellViT256 model on the same classification task. The work focused on distinguishing between healthy and tumor epithelial cells within cutaneous squamous cell carcinoma (cSCC), a notoriously difficult task due to the similar morphologies of these cell types.

Achieving over 83 percent accuracy demonstrates the potential of graph-based approaches to capture subtle tissue-level context often missed by conventional image analysis. Yet, computational demands remain a significant hurdle for full WSI analysis. To address this, researchers extracted four 2560 × 2560 pixel patches from each image and converted these into graphs, a configuration termed “TILE-Graphs”.

In this setting, DIFFormer still outperformed CellViT256, reaching 83.6 ±1.9 percent balanced accuracy compared to 78.1 ±0.5 percent for the image-based model. This suggests that even with reduced image data, the graph-based methodology retains a performance advantage. Considering training efficiency, DIFFormer completed a single cross-validation fold in just 32 minutes, while CellViT256 required approximately five days to finish the same fold, a difference of over 140times.

This dramatic reduction in training time is a direct result of the scalable Graph Transformer architecture, which allows for efficient processing of large graphs. By evaluating several node feature configurations, the study found that combining morphological and texture features with the cell classes of non-epithelial cells provided the most informative representation, highlighting the importance of considering the surrounding cellular context for accurate classification.

Construction and evaluation of cell graphs from whole-slide images for cancer classification

Initial analysis began with whole-slide images (WSIs) obtained from cancer patients, a standard practice for diagnosis and monitoring of treatment. To move beyond traditional image analysis, the research team constructed cell graphs directly from these WSIs, representing each cell as a node and spatial relationships as edges. This approach contrasts with methods that first divide the image into patches, potentially losing valuable tissue-level information.

Building these graphs required precise cell segmentation and identification within the extensive WSI data. Subsequently, the team evaluated two Graph Transformer models, SGFormer and DIFFormer, alongside a leading image-based classification method on a single WSI. These models processed the cell graph, extracting features from each node, representing individual cells, and considering the connections between them.

Node features incorporated not only the cell’s morphology and texture but also the classification of surrounding non-epithelial cells, acknowledging the importance of cellular context. This careful feature engineering aimed to improve the model’s ability to distinguish between healthy and cancerous epithelial cells in cutaneous squamous cell carcinoma, a particularly difficult task due to their similar appearances.

Yet, computational demands remained a concern when scaling this approach to multiple WSIs from several patients. To address this, the team extracted four 2560x 2560 pixel patches from each WSI before converting them into graphs, balancing the need for a comprehensive, whole-slide analysis with the practical limitations of processing power. DIFFormer, in this configuration, demonstrated not only competitive accuracy but also a substantial reduction in training time, approximately 32 minutes per cross-validation fold, compared to the 5 days required for the CellViT256 image-based model. For years, analysing whole-slide images of tissues has been hampered by their sheer size and complexity, forcing researchers to focus on smaller, isolated patches, inevitably losing valuable information about how cells interact and organise themselves within the broader tissue structure, limiting diagnostic accuracy.

By representing the image as a graph, where cells are nodes and their relationships are edges, researchers can now consider the tissue as a whole, rather than a collection of parts. Yet, scaling these graph-based methods to handle the enormous datasets generated by modern pathology is a considerable challenge. Importantly, the gains weren’t simply about better algorithms; the team found that incorporating information about neighbouring, non-epithelial cells significantly enhanced performance, revealing the importance of contextual awareness.

Still, limitations remain. While the reported improvements are encouraging, the performance boost achieved when working with full slides versus extracted patches requires further investigation. The reduction in computational burden through patch extraction, while practical, may also introduce a degree of information loss that needs careful consideration.

Beyond this specific cancer type, the generalizability of this approach to other histological images and diseases is an open question, demanding testing across diverse datasets. The field could see a move towards more holistic image analysis, integrating graph-based methods with other AI techniques. Future work might explore how these models can be used not just for classification, but also for predicting treatment response or identifying novel biomarkers. Ultimately, the goal is to create AI systems that can assist pathologists in making faster, more accurate diagnoses, improving patient outcomes and easing the burden on healthcare systems.

👉 More information

🗞 Context-aware Skin Cancer Epithelial Cell Classification with Scalable Graph Transformers

🧠 ArXiv: https://arxiv.org/abs/2602.15783