Researchers are increasingly investigating the security implications of Next Edit Suggestions (NES) within AI-integrated Integrated Development Environments (IDEs). Yunlong Lyu from The University of Hong Kong, Yixuan Tang from McGill University, and Peng Chen, alongside Tian Dong, Xinyu Wang, and Zhiqiang Dong, present the first systematic security study of these systems. Their work is significant because NES moves beyond simple autocompletion to proactively suggest multi-line code modifications, creating a more interactive but potentially vulnerable workflow. This research dissects the mechanisms behind NES, revealing expanded context retrieval that introduces new attack vectors, and demonstrates susceptibility to context poisoning through both laboratory experiments and a large-scale survey of over 200 professional developers, ultimately highlighting a critical gap in awareness and the urgent need for improved security measures.

Consequently, NES introduces more complex context retrieval mechanisms and sophisticated interaction patterns. However, existing studies predominantly focus on the security implications of standalone LLM-based code generation, neglecting potential attack vectors posed by NES in modern AI-integrated IDEs.

The underlying mechanisms of NES remain under-explored, and their security implications are not yet fully understood. In this paper, researchers conduct the first systematic security study of NES systems. First, they perform an in-depth dissection of the NES mechanisms of leading AI-integrated IDEs to understand the newly introduced threat vectors.

It is found that NES retrieves a significantly expanded context, including inputs from imperceptible user actions and global codebase retrieval, which increases the attack surfaces. Second, they conduct a comprehensive in-lab study to evaluate the security implications of NES in realistic software development scenarios.

The evaluation results reveal that NES is susceptible to context poisoning and is sensitive to transactional edits and human-IDE interactions. Third, they perform a large-scale online survey involving over 200 professional developers to assess the perceptions of NES security risks in real-world development workflows.

The survey results indicate a general lack of awareness regarding the potential security pitfalls associated with NES, highlighting the need for increased education and improved security countermeasures in AI-integrated IDEs. The shift from traditional code autocompletion to NES represents a fundamental change in the interactive paradigm between IDEs and developers.

Unlike conventional autocompletion, which is limited to passive local infilling triggered by keystrokes, NES operationalizes a more intent-driven workflow. Specifically, it surfaces a suggestion box that can navigate to relevant locations across the codebase and apply proposed edits once the developer accepts them.

To enable this, NES proactively monitors a broader spectrum of user interactions, such as cursor movements, scrolling, and code selection, and combines these signals with project-wide indexing to build a deeper contextual representation of the ongoing task. This expanded context improves the accuracy of predicting the developer’s next step and supports suggestions that span multiple lines, non-contiguous regions, or even multiple files.

While this low-friction NES experience enhances development efficiency, the underlying mechanisms remain under-explored, and their security implications are not fully understood. As user adoption scales, emerging reports of sensitive data leaks and insecure code patterns have raised concerns about the risks introduced by these opaque systems.

For example, a developer reported that after reviewing a secret key in a configuration file, the key was inadvertently exposed in plaintext within the codebase, even when explicitly excluded via a .cursorignore file, as confirmed by IDE developers. This incident highlights the potential for NES to introduce vulnerabilities that are not immediately apparent to users.

Furthermore, as NES introduces richer interaction patterns and increasingly complex edits, the traditional trust model between developers and their IDEs becomes more fragile. In a seamless tab-by-tab workflow, developers can easily enter a low-friction acceptance mode and approve long, non-trivial suggestions without fully auditing their security implications, which increases the chance that subtle vulnerabilities are introduced.

This risk is further amplified when suggestions are highly accurate, because repeated acceptance can trigger repetition suppression in the developer’s cognition and reduce vigilance precisely when careful review is most needed. Collectively, these shifts in trust and attention raise critical questions about the security posture of NES-enabled development environments.

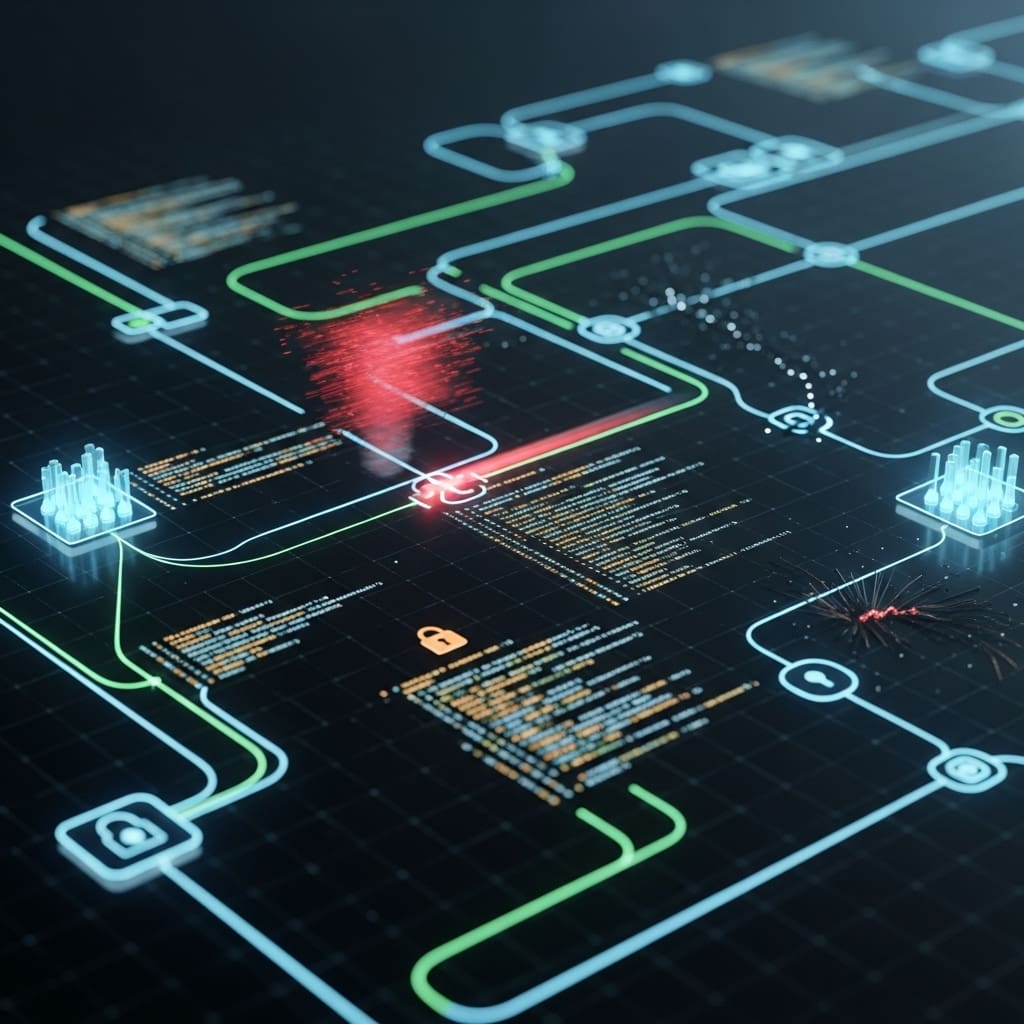

In this paper, researchers present the first systematic security analysis of NES systems, focusing on their underlying mechanisms and the unique risks emerging from human-IDE interactions. First, they dissect the architecture and operational logic of representative open-source NES-enabled IDEs to identify novel attack surfaces and threat vectors introduced by their specific mechanisms and interaction patterns (Table 1).

Second, they perform comprehensive white-box and black-box security assessments to quantify intrinsic vulnerabilities in both open-source systems and leading commercial tools, such as Cursor and GitHub Copilot. Finally, they conduct a large-scale survey of 385 participants, including 241 professional developers, to evaluate user perceptions of these threats and determine how such perceptions influence developer trust and code scrutiny behaviors.

As NES systems have become fundamental components of modern AI-assisted IDEs, providing the primitive capabilities for AI-assisted code editing, understanding their security implications is critical for safeguarding the development lifecycle in the AI era. Their analysis reveals a significant security gap between the new features and interaction patterns introduced by NES and the lack of corresponding defensive measures.

They identify a range of novel vulnerabilities that can be exploited through threat vectors beyond inherent model weaknesses, potentially degrading the security posture of common coding practices. Furthermore, their assessments demonstrate that these vulnerabilities are not merely theoretical but are prevalent across both open-source and commercial implementations, with over 70% of suggestions containing exploitable patterns in certain scenarios.

Finally, their user study highlights a sharp contrast in developer awareness and scrutiny behaviors; while 81.1% of developers had noticed security issues in NES suggestions, only 12.3% explicitly verify the security of suggested code, and even 32.0% admit to only skimming or rarely scrutinizing the output. These findings underscore the urgent need for new security paradigms in AI-integrated development to protect developers from the very tools designed to enhance their productivity.

To thoroughly understand the NES mechanism, researchers selected two representative AI-assisted IDEs with publicly available source code and implemented NES functionality: Visual Studio Code with GitHub Copilot 1 and Zed Editor. Both GitHub Copilot and Zed Editor have been widely adopted in real-world development environments, making them ideal candidates for studying NES mechanisms and their security implications.

While the closed-source implementations of other IDEs like Cursor and Windsurf make in-depth analysis infeasible, the manifestation of NES features in these tools is similar to that of Github Copilot and Zed Editor and thus can be inferred from their analysis. Instead of analyzing differences in engineering details between their implementations, they focus on dissecting the common architecture and operational flow shared in these tools.

Upon in-depth analysis, they observed that these tools share a common high-level architecture, encompassing three key components: user action triggers, context assemblage, and response parsing. When developers interact with the IDE, the event monitor records user actions and triggers the NES pipeline by detecting specific editing behaviors.

Once triggered, the context assemblage module gathers contextual information from the current codebase and Language Server Protocol (LSP) server. Combined with the edit history and viewed code snippets collected from recent user actions, this module assembles these elements to construct a comprehensive prompt for querying the underlying NES models.

After the model generates a prediction, the response parsing component interprets the output, transforming it into actionable code edits that are seamlessly integrated into the developer’s workflow. NES starts by detecting user behaviors that signal an intent to modify code, and actively models diverse interactions to infer intent and predict larger edits.

In IDEs, user actions are captured in an event-driven manner (e.g., insertions, deletions, cursor movements, and file saves), and predefined action patterns trigger the NES to issue a prediction request. For these IDEs, the trigger mechanism is finely tuned to respond to a diverse set of common editing actions that capture the dynamic nature of coding workflows: (1) Text insertions, (2) Text deletions, (3) Text replacements, (4) Auto-indentation, (5) Undo/Redo operations, (6) Empty line insertions, (7) Cursor movements, and (8) Selection changes.

When these actions occur, they signal to IDEs that the developer may be seeking assistance with code edits, prompting the NES components to generate context-aware suggestions. The debouncing strategies implemented in IDEs prevent NES from overwhelming the underlying model with excessive requests during rapid typing or editing sessions.

By monitoring richer user interactions, NES triggers more often than traditional autocompletion, increasing the chance that LLM-generated code is incorporated into the codebase. The research commenced with the construction of an evaluation task suite grounded in a mechanism-driven risk taxonomy, detailed in Table 1 of the work.

These tasks encompassed key vulnerabilities, ranging from fine-grained context poisoning to semantic transactional edits and complex human-IDE interactions, linking underlying mechanisms to observable user behaviours. To ensure generalizability, the evaluation extended to four representative AI-assisted IDEs, assessing whether identified findings applied across diverse NES implementations and development environments.

A white-box assessment of the risk taxonomy was then undertaken, employing a set of test cases manifesting security challenges in realistic coding practices, as detailed in the risk manifestation column of Table 1. Each case was specifically designed to trigger NES behaviours associated with a particular risk vector, enabling systematic assessment of the mechanisms contributing to security pitfalls.

Quantitative evaluation of each risk vector involved designing test cases that mirrored security challenges in practical coding scenarios. These cases were crafted to elicit specific NES behaviours linked to each risk vector, allowing for a holistic characterization of NES security vulnerabilities. The study also considered transactional edits, evaluating how the model replicates editing patterns without security awareness, potentially leading to data leaks or unintended endpoint exposure. NES constructs a richer context from user interactions and the codebase to suggest multi-line or cross-file modifications, introducing complex context retrieval and interaction patterns.

Research indicates that NES retrieves an expanded context, incorporating inputs from imperceptible user actions and global codebase retrieval, thereby increasing potential attack surfaces. A comprehensive in-lab study revealed that NES is susceptible to context poisoning and sensitive to transactional edits and interactions with developers.

The evaluation results demonstrate that NES systems exhibit vulnerabilities in realistic software development scenarios. Over 70% of suggestions contained exploitable patterns in certain scenarios, highlighting a significant security gap between new features and existing defensive measures. A large-scale online survey involving over 200 professional developers assessed perceptions of NES security risks in real-world workflows.

Survey results indicated a general lack of awareness regarding potential security pitfalls associated with NES, emphasizing the need for increased education and improved countermeasures. Specifically, 81.1% of developers reported noticing security issues in NES suggestions, yet only 12.3% explicitly verify the security of the suggested code.

Furthermore, 32.0% of developers admit to only skimming or rarely scrutinizing the proposed edits. This study dissected the architecture and operational logic of representative open-source NES-enabled IDEs to identify novel attack surfaces and threat vectors. The research focused on understanding the security implications of NES, particularly concerning human-IDE interactions and the potential for subtle vulnerabilities to be introduced during seamless workflows.

Context poisoning vulnerabilities in AI-assisted code completion tools

Researchers have conducted the first systematic security analysis of Next Edit Suggestion (NES) systems integrated within modern AI-assisted integrated development environments. This investigation revealed twelve previously unidentified threat vectors that could be exploited, stemming from the expanded context retrieval and real-time interaction inherent in NES functionality.

Both commercial and open-source implementations of NES demonstrated a high vulnerability rate, exceeding seventy percent, when subjected to context poisoning and manipulation through subtle user actions. Further evaluation involved a large-scale online survey of over 200 professional developers, which highlighted a significant lack of awareness regarding the security risks associated with NES.

This suggests that developers are generally unprepared for potential vulnerabilities within these increasingly prevalent tools. The study underscores the urgent need for security-focused design principles and automated defensive mechanisms in future AI-assisted programming environments to mitigate these risks.

Acknowledging limitations, the authors note that the in-lab evaluations were conducted with a specific set of attack vectors and may not encompass all possible threats. Future research should explore more sophisticated attack strategies and investigate the effectiveness of various mitigation techniques. The findings emphasize the importance of proactive security measures as AI integration deepens within software development workflows, and advocate for increased education to ensure developers are equipped to identify and address potential vulnerabilities.

👉 More information

🗞 “Tab, Tab, Bug”: Security Pitfalls of Next Edit Suggestions in AI-Integrated IDEs

🧠 ArXiv: https://arxiv.org/abs/2602.06759