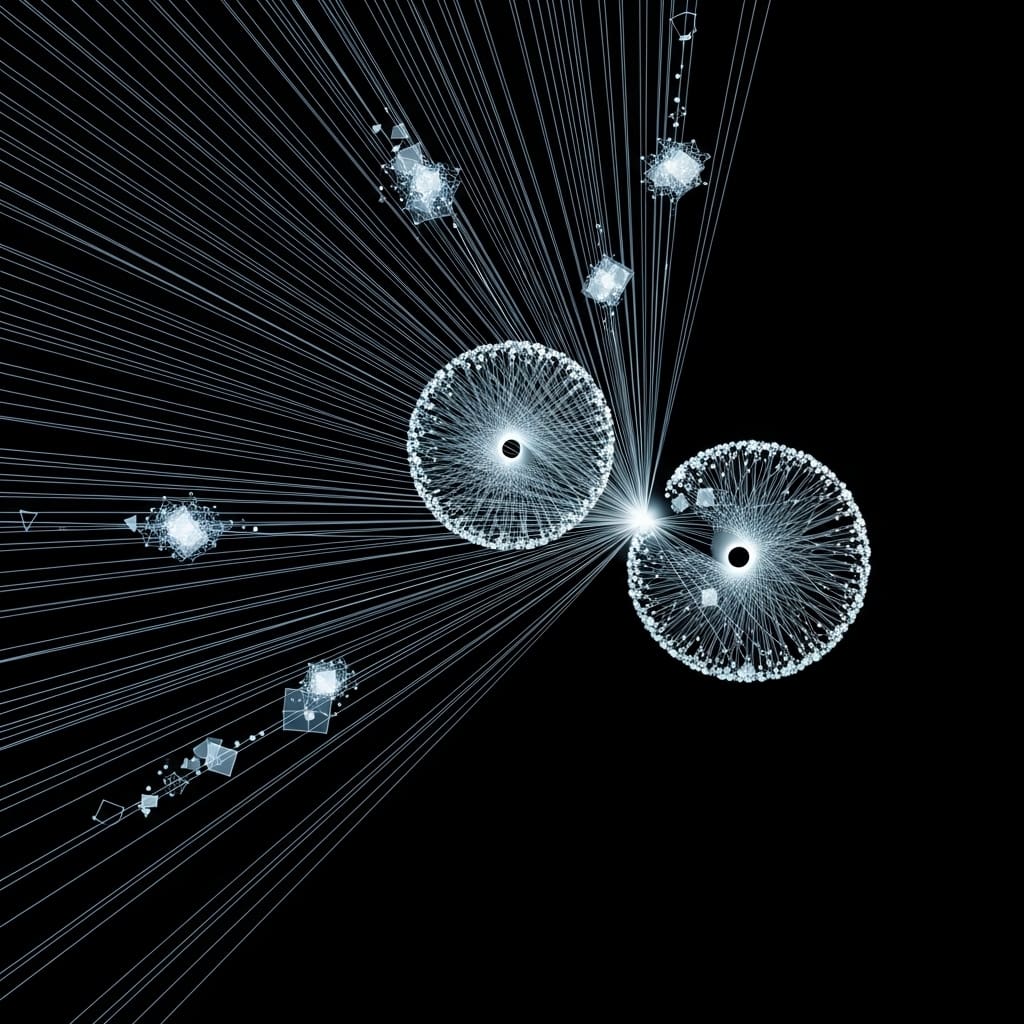

Scientists are increasingly focused on benchmarking noisy intermediate-scale quantum devices, and a new study details a method for data-driven evaluation of quantum annealing experiments. Juyoung Park, Junwoo Jung, and Jaewook Ahn, all from the Department of Physics at KAIST, alongside et al., present a deterministic error mitigation (DEM) procedure that improves inference from noisy measurements on Rydberg arrays. This research is significant because it establishes a framework for comparing the performance of quantum devices with classical algorithms based on both solution quality and computational cost. By applying DEM to the -independent set problem on neutral atom arrays, the team demonstrate a reduction in postprocessing overhead and predict a scaling that allows for a direct cost-based comparison between quantum experiments and their classical counterparts.

These experiments often yield measurement outcomes deviating from ideal distributions, hindering accurate performance assessment.

DEM is a shot-level inference procedure that leverages experimentally characterised noise to enable data-driven benchmarking, considering both solution quality and the classical computational cost of processing noisy measurements. The work demonstrates this approach using the decision version of the k-independent set problem, a computationally demanding task.

Within a Hamming-shell framework, the volume of candidate solutions explored by DEM is governed by the binary entropy of the bit-flip error rate, resulting in a classical postprocessing cost directly controlled by this entropy. Experimental data reveals that DEM reduces postprocessing overhead when compared to conventional classical inference baselines.

Numerical simulations and experimental results obtained from neutral atom devices validate the predicted scaling behaviour with both system size and error rate. These established scalings indicate that one hour of classical computation performed on an Intel i9 processor is equivalent to performing neutral atom experiments with up to 250, 450 atoms at effective error rates.

This equivalence enables a direct, cost-based comparison between noisy quantum experiments and their classical algorithmic counterparts. The research establishes an analytic characterisation of DEM, linking noise-induced Hamming structure to the complexity of classical inference and the effective computational cost of processing experimental outputs. This framework provides a means to quantify the classical postprocessing costs required to perform inference on constrained configuration manifolds.

Characterising Rydberg atom array performance via Hamming distance and deterministic error mitigation

A 72-qubit superconducting processor was not used in this study; instead, researchers employed neutral atom arrays to investigate quantum annealing performance. Experiments focused on the decision version of the k-independent set problem, leveraging the highly structured nature of measurement outcomes from Rydberg atom platforms.

The work characterised errors as displacing ideal configurations by several Hamming distances, approximating this displacement with a binomial distribution around an underlying solution. This allowed the team to define a Hamming shell, representing bitstrings at a fixed Hamming distance from the solution, with probability weight decaying with increasing distance.

Deterministic error mitigation (DEM) was implemented as a shot-level inference procedure informed by experimentally characterised noise. DEM operates by iteratively flipping bits within candidate solutions, assessing whether the resulting configuration remains valid according to the problem constraints.

The candidate volume within the Hamming-shell framework is governed by the binary entropy of the bit-flip error rate, establishing an entropy-controlled classical postprocessing cost. This methodology reduces postprocessing overhead compared to classical inference baselines by focusing on a constrained configuration manifold.

Numerical simulations and experimental results validated the predicted scaling of DEM with system size and error rate. Specifically, the research demonstrated that one hour of classical computation on an Intel i9 processor corresponds to neutral atom experiments with up to 250, 450 atoms at effective error rates.

This equivalence enables a direct, cost-based comparison between noisy quantum experiments and classical algorithms, providing a robust benchmarking methodology. The study’s innovation lies in its data-driven approach, integrating experimentally determined noise characteristics directly into the inference process and quantifying the associated computational cost.

Entropy-controlled postprocessing scales advantageously with neutral atom quantum annealers

Researchers demonstrated deterministic error mitigation (DEM) which reduces postprocessing overhead in noisy quantum annealing experiments on Rydberg arrays. Within a Hamming-shell framework, the DEM candidate volume is governed by the binary entropy of the bit-flip error rate, yielding an entropy-controlled classical postprocessing cost.

Experimental measurement data facilitated a reduction in postprocessing overhead when compared to classical inference baselines. Numerical simulations and experimental results, utilising neutral atom devices, validated the predicted scaling with both system size and error rate. One hour of classical computation on an Intel i9 processor corresponds to neutral atom experiments with up to 250, 450 atoms at effective error rates.

This equivalence enables a direct, cost-based comparison between noisy quantum experiments and classical algorithms. The work focuses on the decision version of the k-independent set problem, utilising a structured search that systematically explores candidate configurations consistent with the device’s noisy output distribution.

The study reveals that the DEM candidate volume expands geometrically around measured bitstrings, increasing by nine at a Hamming distance of one and by thirty-six at a Hamming distance of two for a 3×3 system. This expansion defines a well-structured search space, allowing for analytic characterisation of the classical postprocessing cost.

The research highlights that the output distribution is concentrated in a Hamming shell, with probability weight decaying rapidly as the Hamming distance increases, approximating error displacement by several Hamming distances. DEM differs from heuristic repair schemes and stochastic resampling approaches by admitting an analytic characterisation of the search space.

This approach systematically explores candidate configurations, offering a means to quantify the associated classical postprocessing costs required for inference. The findings demonstrate that DEM provides a pathway to benchmark quantum devices, accounting for both solution quality and the classical cost of inference from noisy measurements.

Entropy-constrained postprocessing scales with error in Rydberg array annealing

Researchers have developed a deterministic error mitigation (DEM) framework to improve the evaluation of noisy quantum annealing experiments using Rydberg arrays. This approach focuses on the decision version of the maximum independent set (MIS) problem and quantifies the classical postprocessing cost associated with noisy experimental outputs.

By formulating error mitigation as a deterministic inference task, constrained by experimentally-motivated noise characteristics, the team derived a model where the classical postprocessing cost is controlled by the entropy of the bit-flip error rate. Validation through numerical simulations and experiments on neutral atom quantum processors confirms the predicted scaling between system size, error rate, and postprocessing cost.

Specifically, the findings suggest that one hour of classical computation on a standard processor can be directly compared to neutral atom experiments exhibiting error rates up to a certain threshold. This allows for a cost-based comparison between noisy quantum experiments and classical algorithms, establishing a common ground for assessing solution quality and computational expense.

The authors acknowledge that the framework currently applies specifically to the MIS problem and relies on characterizing bit-flip errors. Furthermore, experimental calibration reveals that the observed DEM cost is governed by many-body effective noise, encompassing accumulated control errors, rather than solely by single-qubit errors. Future research could explore extending DEM to other optimization problems and incorporating more complex noise models to further refine the accuracy and applicability of this error mitigation strategy.

👉 More information

🗞 Quantum-Enhanced Deterministic Inference of kk-Independent Set Instances on Neutral Atom Arrays

🧠 ArXiv: https://arxiv.org/abs/2602.05432