Researchers are tackling a fundamental challenge in image generation: how to best translate images into a format that allows artificial intelligence to learn and recreate them effectively. Bin Wu, Mengqi Huang, and Weinan Jia, from the University of Science and Technology of China, alongside Zhendong Mao et al, present a novel approach called NativeTok, which directly addresses limitations in current tokenisation methods. Existing techniques often fail to account for the relationships between different parts of an image during this initial encoding stage, hindering the generative model’s ability to produce coherent results. NativeTok enforces these crucial causal dependencies during tokenisation, embedding relational constraints directly into the token sequences and ultimately leading to more realistic and consistent image generation.

Researchers propose native visual tokenization to enforce causal dependencies during reconstruction. Existing methods fail to constrain token dependencies, meaning improved tokenization in the first stage does not necessarily enhance second-stage generation? This mismatch forces the generative model to learn from unordered distributions, leading to bias and weak coherence. The proposed approach aims to address these limitations by learning dependencies for reconstruction using a generative model?

NativeTok architecture and hierarchical training strategy enable efficient video understanding

Scientists are building on the idea of tokenization, introducing NativeTok, a framework achieving efficient reconstruction while embedding relational constraints within token sequences. NativeTok consists of a Meta Image Transformer (MIT) for latent image modeling, and a Mixture of Causal Expert Transformer (MoCET), where each lightweight expert block generates a single token conditioned on prior tokens and latent features?

Researchers further design a Hierarchical Native Training strategy that updates only new expert blocks, ensuring training efficiency. Extensive experiments demonstrate the effectiveness of NativeTok. Over the past few years, the vision community has witnessed remarkable progress in deep generative models, such as diffusion models and autoregressive models, elevating image generation quality to unprecedented levels.

Inspired by the success of large language models (LLMs), large visual models have recently attracted increasing research interest, primarily due to their compatibility with established innovations from LLMs and their potential to unify language and vision towards general artificial intelligence (AGI). Different from the natural tokenization for language such as Byte Pair Encoding (BPE), the visual tokenization, converting images into discrete tokens analogous to those used in language models, remains a longstanding challenge for VQ-Based large visual models.

Most modern large visual models follow a two-stage paradigm: first, the tokenization stage learns an image tokenizer by reconstructing images, converting high-dimensional pixels into compressed discrete visual tokens, and second, the generation stage trains a generative model to model the token distribution via next-token prediction. Recent works have focused on designing improved image tokenizers with higher compression ratios to accelerate generation process, or higher reconstruction quality to enhance generation quality.

For example, DQVAE introduces a more compact tokenizer by dynamically allocating varying numbers of tokens to image regions based on their information density. VAR proposes a multi-scale tokenizer combined with a coarse-to-fine “next-scale prediction” generation strategy. TiTok significantly accelerates generation by compressing images into shorter one-dimensional serialized representations.

In summary, existing methods primarily focus on first independently designing a better tokenizer, and then training a generative model to capture the distribution of the resulting visual tokens. However, researchers argue that existing methods overlook the intrinsic dependency between the tokenization and generation stages, optimizing each stage with separate objectives.

This leads to a fundamental misalignment between the disordered tokenization outputs and the structured dependency modeling required during generation. Specifically, the first stage typically relies on reconstruction loss for supervision during tokenization, without imposing constraints on the intrinsic relationships among tokens, while the second stage essentially models the intrinsic distributional relationships among the tokens learned in the first stage.

This misalignment prompts a critical inquiry: How can a generative model accurately learn visual token distributions if the tokens themselves are inherently disordered in nature? As a result, the generation stage can only learn a biased and incomplete distribution from these disordered visual tokens, leading to sub-optimal generative performance.

Moreover, a persistent gap exists in current two-stage generation paradigm, i.e., better tokenization performance in the first stage does not necessarily result in improved generation in the second stage, as it may lead to more complex token interrelationships that further violates the autoregressive principle. To address this challenge, researchers introduce the concept of native visual tokenization, which tokenizes images in a native visual order that inherently aligns with the subsequent generation process, leading to more effective and accurate visual token modeling.

They argue that visual information in an image also follows a global causal order. Analogous to human perception, when observing an image, we tend to first recognize its primary structural components, followed by finer details such as textures. This observation suggests that the process of visual understanding is inherently autoregressive in nature.

Built upon this concept, they propose NativeTok, a novel visual tokenization framework that disentangles visual context modeling from visual dependency modeling, thereby enabling the joint optimization of image reconstruction quality and token ordering without conflict. As a result, NativeTok is capable of producing a native visually ordered token sequence directly during the tokenization stage.

Specifically, NativeTok comprises three components: first, the Meta Image Transformer (MIT), which models image patch features with high-dimension for effective visual context modeling, followed by a dimension switcher that compresses the context output for subsequent efficient visual dependency modeling. Second, the Mixture of Causal Expert Transformer (MoCET) features a novel ensemble of lightweight expert transformer blocks, each tailored to a specific token position.

This position-specific specialization allows MoCET to model causal dependencies with greater precision, yielding an ordered token representation. Third, the ordered token representation is quantized into discrete tokens and subsequently decoded by a transformer-based decoder. Moreover, researchers propose a novel Hierarchical Native Training strategy to efficiently train NativeTok across varying tokenization lengths.

NativeTok not only accomplishes the reconstruction task of the first stage but also explicitly constrains the intrinsic relationships within the token sequence, making it easier for the second-stage generation process to capture and model these dependencies, thereby achieving a close integration between the two stages. Image Tokenization is a fundamental and crucial step in image generation.

It involves using autoencoders to compress images from high-dimensional, high-resolution space into a low-dimensional, low-resolution latent space, and then using a decoder to reconstruct the image from this latent space. CNN-based autoencoder tokenizers, such as VQ-VAEs, encode images to a discrete representation, forming a codebook of image information.

DQ-VAE focuses on the information density of different image regions and encodes images using information-density-based variable-length coding. Transformer-based autoencoder tokenizers, such as ViT-VQGAN and Efficient-VQGAN, also achieve excellent reconstruction results through the use of transformers.

TiTok and MAETok achieves excellent reconstruction results by compressing images into one-dimensional sequences with a high compression ratio. Many works further explore improvements in the vector quantization step, such as SoftVQ, MoVQ, and RQ-VAE, while FSQ propose LFQ methods. In recent work, FlexTok incorporates characteristics of continuous VAEs, utilizing flexible-length 1D encodings to enable a visually ordered reconstruction process, while GigaTok also achieve wonderful generation results.

However, these works completely neglect the dependency relationships among tokens that need to be modeled in the second stage, resulting in a fundamental discrepancy between the token dependencies established in the first stage and the image modeling approach in the second stage and do not treat the two stages as a unified process. Therefore, researchers propose NativeTok, which introduces strong constraints when modeling token dependencies to capture more accurate intrinsic relationships.

This enables the generator to more easily learn such associations during the generation process, thereby achieving a true integration of the tokenization and generation stages. Existing image generation methods typically learn and generate images from the image latent space generated in the image tokenization stage, which enhances generation efficiency.

These methods primarily include three paradigms: GANs, diffusion models, and autoregressive models. These methods predict the next token or group of tokens, based on conditions and previously generated tokens, until the entire token sequence is generated. However, previous image tokenization approaches fail to consider the inherent requirement of the second-stage generator to model the internal dependencies among tokens.

As a result, the generated unordered sequence can only lead to a biased and inherently inaccurate model. To achieve the goal of native visual tokenization and ensure tight integration between the tokenization and generation stages, researchers design the NativeTok framework. Their aim is to model the ordered relationships among tokens during the tokenization stage.

This requires the first-stage tokenization process to ultimately produce an ordered, causally token sequence that aligns with the native visual order: zi = Encoder(X, z0, z1, ., zi−1). The modeling of token zi depends only on the previous tokens z0 to zi−1 and the image information X, and is independent of any subsequent zj(j>i).

To achieve such a generation process, a naive approach is to apply a causal mask. However, this method is ineffective in practice, as the transformer must simultaneously perform image self-modeling and token generation, making it difficult to generate tokens sequentially. Moreover, its performance heavily depends on the capacity of the original model and does not yield substantial improvements, as confirmed by the experimental results presented in the subsequent ablation studies.

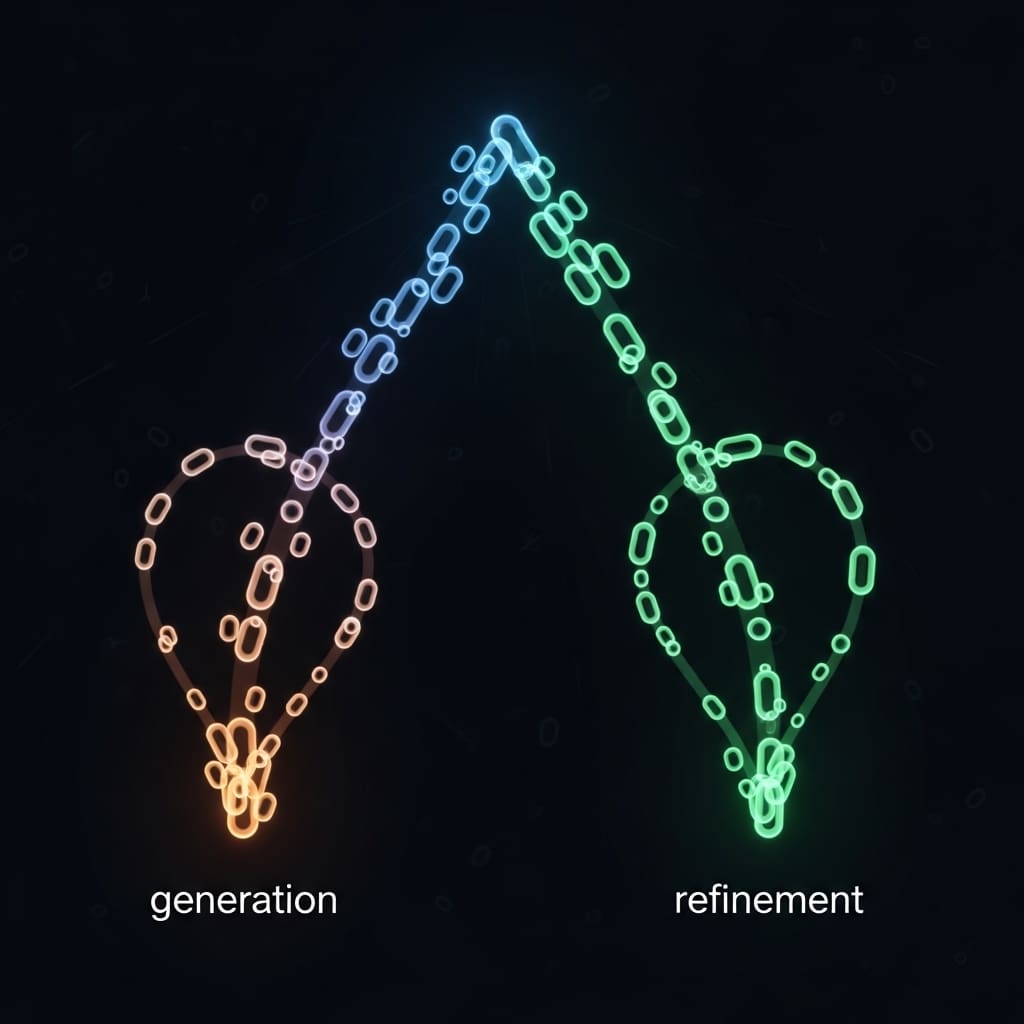

Therefore, they propose a novel framework that adopts a divide-and-conquer design. It decouples complex context modeling of image content from the dependency modeling among image tokens. In the image context modeling stage, they employ bidirectional attention to capture global representations of the image. In the token dependency modeling stage, they introduce native visual constraints for token-level dependency mode.

Causal Tokenisation via Mixture of Causal Expert Transformers improves image generation quality

Scientists have developed NativeTok, a novel framework for image generation that enforces causal dependencies during the initial tokenization stage. The research addresses a fundamental mismatch between existing tokenization methods and subsequent generative modelling, which often leads to biased and incoherent results.

NativeTok achieves efficient reconstruction while embedding relational constraints within token sequences, representing a significant step forward in visual data processing. Experiments demonstrate that NativeTok utilizes a Mixture of Causal Expert Transformer (MoCET), where each lightweight expert block generates a single token conditioned on prior tokens and latent features.

The team designed a Hierarchical Native Training strategy, updating only new expert blocks to ensure training efficiency. Specifically, the framework models token zi depending only on previous tokens z0 to zi-1 and image information X, independent of subsequent tokens. The core of NativeTok consists of a Meta Image Transformer (MIT) which models the input image X, followed by a fully connected network to obtain Xlatent, representing rich contextual information in the latent space.

This Xlatent is then locked during the generation process, ensuring consistent image information for each token generated. The MoCET comprises a sequence T of L lightweight expert transformers, each responsible for generating a single token within a sequence of length L. Measurements confirm that the ith token, zi, is generated by the ith expert transformer Ti using the equation zi = Ti(Xlatent, z0, z1, ., zi-1, zpadding).

This process concatenates the locked latent space, previously generated tokens, and a padding token, retaining a single vector as the current token. The researchers found this divide-and-conquer design effectively decouples complex image context modelling from token dependency modelling, improving performance and aligning with human visual perception.

Causal Tokenisation Improves Coherence in Image Generation models

Scientists have introduced a new framework called NativeTok, addressing limitations in current vector quantization (VQ)-based image generation techniques. Traditional methods often experience a disconnect between the tokenization and generation stages, resulting in biased and incoherent images due to a lack of constraint on token dependencies.

NativeTok proposes native visual tokenization, enforcing causal dependencies during the initial tokenization process to create ordered token sequences. The framework comprises an Image Transformer (MIT) for latent image modeling and a Mixture of Causal Expert Transformer (MoCET), where lightweight experts generate individual tokens conditioned on preceding tokens and latent features.

A Hierarchical Native Training strategy further enhances efficiency by updating only newly added expert blocks. Experiments demonstrate that NativeTok effectively encodes images into ordered token sequences, aligning with the generation stage and improving overall image generation quality, as evidenced by reduced overlap in high-probability token positions.

This research establishes a link between tokenization and generation, potentially leading to more coherent and realistic images from VQ-based models. The authors acknowledge a limitation in the scope of their experiments, focusing primarily on specific model architectures and datasets. Future work could explore the application of native visual tokenization to diverse generative models and image types, as well as investigate methods for adapting the framework to video generation. This work was supported by the National Natural Science Foundation of China under Grant 623B2094.

👉 More information

🗞 NativeTok: Native Visual Tokenization for Improved Image Generation

🧠 ArXiv: https://arxiv.org/abs/2601.22837