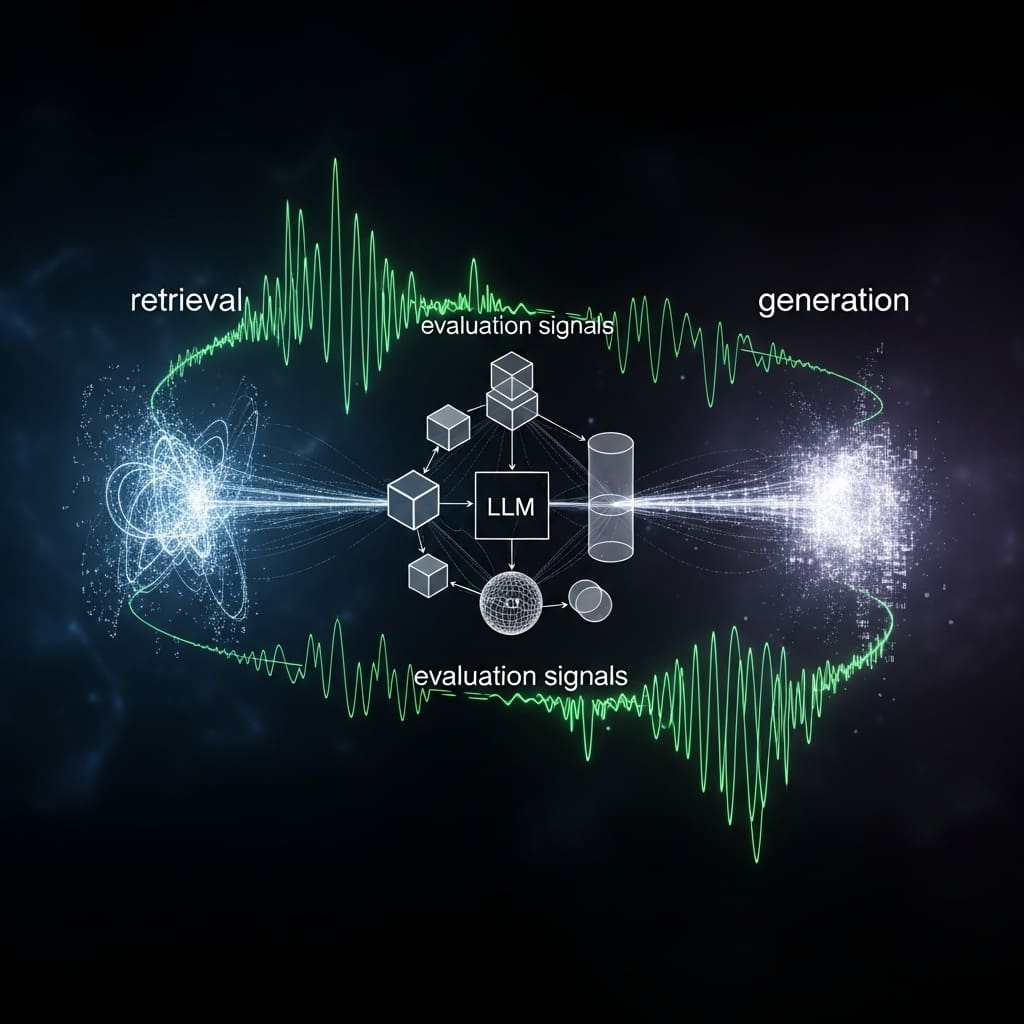

Researchers are increasingly focused on how effectively large language models (LLMs) utilise grounded knowledge in retrieval-augmented generation (RAG) systems. Yilun Hua from Cornell University, alongside Giuseppe Castellucci, Peter Schulam, Heba Elfardy, and Kevin Small from Amazon, present a new approach to quantifying this ‘content utility’ with a metric called Grounding Utility (GroGU). This work is significant because it offers a reference-free and model-specific evaluation, bypassing the need for expensive annotations and better reflecting an LLM’s inherent capabilities. The team demonstrate GroGU’s ability to accurately identify relevant documents and even use it to train a query-rewriter, achieving substantial gains in both ranking and answer accuracy, improvements of up to 18.2 and 9.4 points respectively.

Evaluating document utility in Retrieval Augmented Generation via LLM confidence scores is crucial

Scientists have developed a new metric, Grounding Generation Utility (GroGU), to assess the usefulness of documents in Retrieval Augmented Generation (RAG) systems without relying on human annotations. Quantifying how well grounding content supports a Large Language Model (LLM) has lacked a definitive specification, and current methods often ignore model-specific capabilities or require expensive labelling.

This research addresses these limitations by defining utility as a function of the downstream LLM’s confidence, measured through entropy. Despite requiring no annotations, GroGU effectively distinguishes between relevant and irrelevant documents, capturing nuances that LLM-agnostic metrics miss. The team achieved this by formulating a reference-free metric that directly assesses how much utility an LLM derives from grounding documents.

GroGU is based on the LLM’s generation confidence and employs a modified entropy calculation to ensure robust performance. Experiments demonstrate that GroGU not only mirrors general retrieval relevance but also models aspects beyond it, such as varying utility across different LLMs or identifying usefulness in seemingly less relevant documents.

This innovative approach moves beyond simple relevance scoring, acknowledging the LLM’s unique ability to process and utilise information. Researchers applied GroGU to train a query-rewriter for RAG systems by identifying high-utility preference data for Direct Preference Optimization. This involved leveraging the metric to automatically select data that improves the query-rewriting process, eliminating the need for manual annotation.

The study reveals improvements of up to 18.2 points in Mean Reciprocal Rank and up to 9.4 points in answer accuracy, demonstrating the effectiveness of this automated training approach. These gains highlight GroGU’s potential to significantly enhance RAG system performance. This breakthrough establishes a new paradigm for evaluating grounding documents, offering a model-specific and annotation-free method for quantifying utility.

The work opens avenues for more automated and adaptable RAG systems, particularly in domains where obtaining labelled data is challenging or expensive. By focusing on the LLM’s internal confidence, GroGU provides a more nuanced and accurate assessment of document usefulness, paving the way for improved knowledge integration and response generation. The research suggests a future where RAG systems can be tuned and optimised without the constraints of human annotation, enabling broader application and scalability.

Quantifying Content Utility via Large Language Model Confidence for Retrieval-Augmented Generation offers a novel approach to relevance assessment

Scientists developed Grounding Utility (GroGU), a novel reference-free metric to quantify the utility of content for Retrieval-Augmented Generation (RAG) systems, focusing on downstream Large Language Model (LLM) confidence as measured by entropy. The research team bypassed costly annotation requirements by defining utility as a function of LLM confidence, enabling a model-specific assessment of grounding content.

This approach allows for nuanced distinctions between ground-truth documents and captures variations in utility across different LLMs, addressing limitations of LLM-agnostic metrics. The study pioneered the application of GroGU to train a query-rewriter for RAG through the identification of high-utility preference data for Direct Preference Optimization.

Experiments employed both sparse and dense retrievers, demonstrating consistent improvements in retrieval performance, achieving gains of up to 18.2 points in Mean Reciprocal Rank. Furthermore, the team assessed downstream generator performance, observing improvements of up to 9.4 percentage points in answer accuracy.

Researchers harnessed LLM output entropy to determine content utility, effectively modelling aspects beyond simple relevance, such as differing utilities for various LLMs and the potential for less relevant documents to offer higher utility. The work revealed that existing relevance scores often fail as effective training signals for RAG components due to challenges in learning accurate local alignments within embedding spaces and the inherent noisiness of retrievers.

The team addressed these issues by focusing on generation confidence rather than retrieval relevance, allowing GroGU to identify preference data without manual annotation. To demonstrate GroGU’s capabilities, scientists presented a scenario involving the question “Who lives in the blue house in Balamory?” and analysed LLM responses with and without grounding documents, highlighting how a weaker model struggled with document formatting while a stronger model successfully answered the question when provided with relevant context. This analysis underscored the importance of LLM-specific utility assessment, as even an ideal relevance scorer might fail to capture the nuances of document usefulness for a particular model.

Quantifying Retrieval Utility via Language Model Confidence for Enhanced RAG Performance enables more informed chunk weighting

Scientists have developed Grounding Utility (GroGU), a novel model-specific and reference-free metric to quantify the utility of content used for grounding in Retrieval-Augmented Generation (RAG) systems. The research addresses limitations in existing metrics that often rely on costly annotations or fail to account for model-specific capabilities.

GroGU defines utility as a function of downstream Language Model (LLM) confidence, measured through entropy, eliminating the need for human annotation. Experiments revealed that GroGU effectively distinguishes between ground-truth documents and captures nuances overlooked by LLM-agnostic metrics. The team measured improvements of up to 18.2 points in Mean Reciprocal Rank and up to 9.4 points in answer accuracy when applying GroGU to train a query-rewriter for RAG using Direct Preference Optimization.

This demonstrates the potential for automated fine-tuning of RAG systems without human intervention. Researchers observed that the same document can elicit different responses from LLMs with varying capabilities, as illustrated in Figure 1. A weaker model, Qwen-2-1.5b-it, failed to utilise a challenging document format, while a stronger model, Phi-4 (14B), successfully answered the question when provided with the same document.

These tests, conducted with 50 samples under Qwen’s default configuration, consistently showed differing levels of utility. Furthermore, the study highlighted that relevance scores alone are insufficient for measuring grounding document usefulness, as they do not consider how a specific LLM will utilise the document.

Data shows that adding irrelevant documents can paradoxically improve response correctness, depending on the LLM used, a finding that contradicts the intuition of relevance scores. Scientists calculated utility based on changes in LLM generation confidence when conditioned on, versus not conditioned on, documents.

GroGU formally defines utility as GROGUθ(q, Dr) = γ(yg | q, Dr) −γ(yu | q), where γ represents the change in confidence, yu is the ungrounded generation, and yg is the grounded generation. The team explored confidence measures based on average perplexity and entropy, refining the approach by focusing on “key tokens” , those exhibiting significant entropy changes with and without grounding context, identified using a threshold α. This refined methodology allows for a more precise assessment of document utility within RAG systems.

Model confidence as a proxy for retrieval relevance in RAG systems is a promising research direction

Scientists have developed Grounding Utility (GroGU), a new reference-free metric to assess the usefulness of content for retrieval-augmented generation (RAG) systems. GroGU defines utility by measuring a language model’s confidence, based on entropy, when processing grounded content, thereby offering a model-specific evaluation.

This approach avoids costly annotations and addresses limitations found in existing, model-agnostic metrics which often fail to capture nuances in how different language models utilise information. The research demonstrates that GroGU effectively distinguishes between relevant and irrelevant documents, even in scenarios where traditional relevance scores are inadequate.

Researchers applied GroGU to train a query-rewriter for RAG systems, utilising high-utility preference data through Direct Preference Optimization. Experiments revealed improvements of up to 18.2 points in Mean Reciprocal Rank and up to 9.4 percentage points in answer accuracy, suggesting a significant enhancement in RAG performance.

The authors acknowledge that current relevance scores are often noisy due to optimisations for recall rather than precision, and that they do not reflect the quality of downstream rerankers. Future work could explore the application of GroGU to other components within RAG pipelines, potentially further optimising system performance and addressing challenges in domains where defining a “correct” answer is difficult.

👉 More information

🗞 Evaluating the Utility of Grounding Documents with Reference-Free LLM-based Metrics

🧠 ArXiv: https://arxiv.org/abs/2601.23129