Researchers are tackling a fundamental limitation in current neural networks: their inability to consistently recognise equivalent symbols. İlker Işık and Wenchao Li, from the Department of Electrical & Computer Engineering at Boston University, alongside Wenchao Li, present a new Transformer architecture demonstrably unaffected by how interchangeable tokens are named. This symbol-invariant approach utilises parallel embedding streams and aggregated information sharing, allowing the model to generalise effectively to previously unseen symbols. The significance of this work lies in its potential to substantially improve performance on open-vocabulary tasks, moving closer to truly flexible and adaptable artificial intelligence systems.

Achieving Alpha-Equivalence in Neural Networks via Structured Attention and Parallel Embeddings unlocks improved generalization and robustness

Scientists have developed a novel Transformer-based architecture demonstrably invariant to renaming interchangeable tokens, addressing a critical limitation in current neural networks. The research tackles the challenge of open-vocabulary reasoning, where models struggle to generalise to unseen symbols despite semantic equivalence.

This breakthrough employs parallel embedding streams to isolate the contribution of each interchangeable token, coupled with an aggregated attention mechanism facilitating structured information sharing between these streams. The team achieved provable invariance to alpha-equivalence, meaning the model produces identical outputs for semantically equivalent inputs differing only in symbol names.

This innovative approach bypasses the need for random embeddings, offering formal guarantees of invariance rather than statistical encouragement. Experiments confirm the theoretical underpinnings of the method and reveal substantial performance gains on open-vocabulary tasks requiring generalisation to novel symbols.

This study establishes a new standard in symbolic reasoning, particularly in domains like linear-time temporal logic where interchangeable tokens are prevalent. The architecture introduces a minimal number of additional hyperparameters and integrates seamlessly with existing Transformer designs and length-generalising positional encodings.

Empirical validation on LTL witness generation demonstrates the method not only surpasses existing baselines but also significantly outperforms GPT-5.2, highlighting the effectiveness of an explicit symbol-invariant inductive bias. Researchers summarise their key contributions as the proposal of a novel symbol-invariant Transformer architecture with an aggregated attention mechanism, a formal guarantee of invariance to alpha-renaming, and strong empirical results demonstrating improved open-vocabulary generalisation and state-of-the-art performance. The work opens new avenues for neuro-symbolic learning, enabling models to reason more effectively in symbolic domains and paving the way for more robust and generalisable artificial intelligence systems.

Parallel Embedding and Aggregated Attention for Symbol Invariance achieves robust representation learning

Scientists developed a Symbol-Invariant Transformer architecture to address the challenge of handling interchangeable tokens in neural networks. The study pioneered a method for achieving exact invariance to renaming of these tokens, a common issue in symbolic reasoning tasks such as theorem proving and mathematical reasoning.

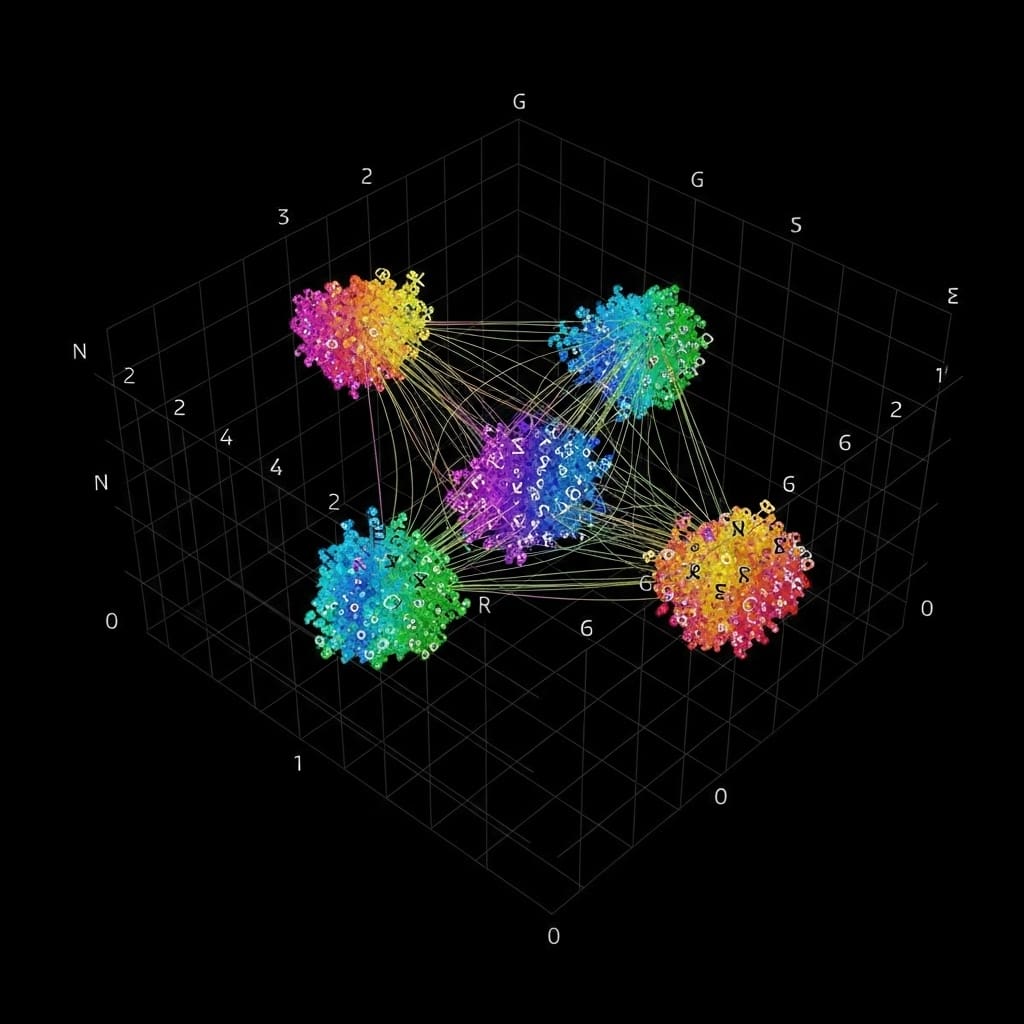

Researchers employed parallel embedding streams, dedicating one stream to each interchangeable token within the input sequence. Each stream explicitly represents its designated token, treating all others as placeholders, thereby isolating the contribution of each symbol. Experiments utilised shared Transformer layers to process these parallel streams, enabling parallel computation and efficient information processing.

An aggregated attention mechanism was then implemented to combine the outputs of these layers, preserving the original positions of tokens while facilitating structured information sharing between streams. This innovative approach prevents the model from committing to a specific naming scheme, allowing it to reason about each symbol independently.

The team tested this system on Linear-time temporal logic (LTL) problems, a domain where renaming atomic propositions does not alter formula satisfiability. The research demonstrated substantial performance gains on open-vocabulary tasks requiring generalisation to novel symbols, surpassing existing methods that rely on random embeddings.

Specifically, the Symbol-Invariant Transformer achieved exact invariance by design, unlike previous approaches which encouraged invariance statistically through stochasticity and hyperparameter tuning. This method avoids the inconsistencies arising from different random seeds producing varying predictions for alpha-equivalent inputs, offering a structurally enforced solution. The technique reveals a principled way to handle symbolic manipulation, potentially advancing neuro-symbolic learning and improving generalisation capabilities in complex reasoning tasks.

Symbol invariance improves Transformer performance on renaming-agnostic symbolic reasoning, particularly with limited data

Scientists have developed a novel Transformer-based architecture demonstrably invariant to renaming interchangeable tokens. The research introduces parallel embedding streams to isolate the contribution of each interchangeable token within the input sequence. These streams, combined with an aggregated attention mechanism, facilitate structured information sharing while preserving individual token contributions.

Experiments confirm the theoretical guarantees of this method and reveal substantial performance gains on open-vocabulary tasks requiring generalization to novel symbols. The team measured performance on Linear-time temporal logic (LTL) witness generation, a domain where renaming atomic propositions should not alter formula satisfiability.

Results demonstrate that the Symbol-Invariant Transformer outperforms existing baselines and surpasses GPT-5.2, achieving a significant advancement in symbolic reasoning. Data shows the architecture achieves exact invariance to renaming, meaning the model produces identical outputs for alpha-equivalent inputs.

This invariance is not statistically encouraged, but structurally enforced through the design of parallel embedding streams and aggregated attention. The work introduces a minimal number of additional hyperparameters and can be implemented as a lightweight modification to standard Transformer encoder, decoder architectures.

Measurements confirm the effectiveness of an explicit symbol-invariant inductive bias in domains involving alpha-equivalence. Specifically, the team’s approach enables generalization across larger or unseen sets of atomic propositions in LTL, addressing a key limitation of previous models. The breakthrough delivers a provable guarantee of invariance, a feature absent in random embedding approaches which rely on stochasticity and require careful hyperparameter tuning. This research provides a fundamentally different solution to open-vocabulary neuro-symbolic learning.

Symbol invariance enhances relational and temporal reasoning in Transformer networks, improving generalization performance

Scientists have developed a novel Transformer architecture demonstrably invariant to the renaming of interchangeable tokens, addressing a limitation in current neural networks. This research introduces a method employing parallel embedding streams to isolate contributions from each interchangeable token, alongside an aggregated attention mechanism for structured information sharing.

Experimental results validate the theoretical guarantees of this approach and reveal substantial performance gains on open-vocabulary tasks requiring generalization to novel symbols. The findings establish that symbol-invariant attention significantly improves open-vocabulary generalization, consistently outperforming random embeddings and standard baselines in both propositional logic and Linear Temporal Logic (LTL) tasks.

This inductive bias proves particularly beneficial when relational reasoning is dominant, while maintaining competitive performance when temporal reasoning presents the primary challenge. Authors acknowledge a computational scaling factor of O(SL2), where S represents the number of streams and L the sequence length, though they note practical feasibility for S = 10 with only a modest increase in processing time. Future research may focus on mitigating this scaling through techniques like stream sparsification or adaptive stream selection, and the method’s applicability extends to domains including program analysis, theorem proving, and graph reasoning.

👉 More information

🗞 Names Don’t Matter: Symbol-Invariant Transformer for Open-Vocabulary Learning

🧠 ArXiv: https://arxiv.org/abs/2601.23169