Accurate atomistic modelling of solid-state battery interfaces remains a significant hurdle in the development of next-generation energy storage, owing to the complex interplay of electrochemical and mechanical factors. Xiaoqing Liu, Xinyu Yu, and Yangshuai Wang, alongside their colleagues at Shanghai Jiao Tong University and the National University of Singapore, address this challenge with a novel fine-tuning framework called FIRE (fine-tuning with integrated replay and efficiency). Their research, detailed in this paper, demonstrates a substantial leap forward in data efficiency and accuracy, achieving root-mean-square errors an order of magnitude lower than existing models using only a tenth of the original data. By combining efficient configurational sampling with a replay-argumented continual learning strategy, FIRE not only predicts key material properties with remarkable agreement to experimental results, but also offers a generalisable approach to developing accurate interatomic potentials for a wide range of materials, potentially unlocking predictive simulations previously inaccessible via first-principles methods.

FIRE framework boosts battery interface modelling significantly

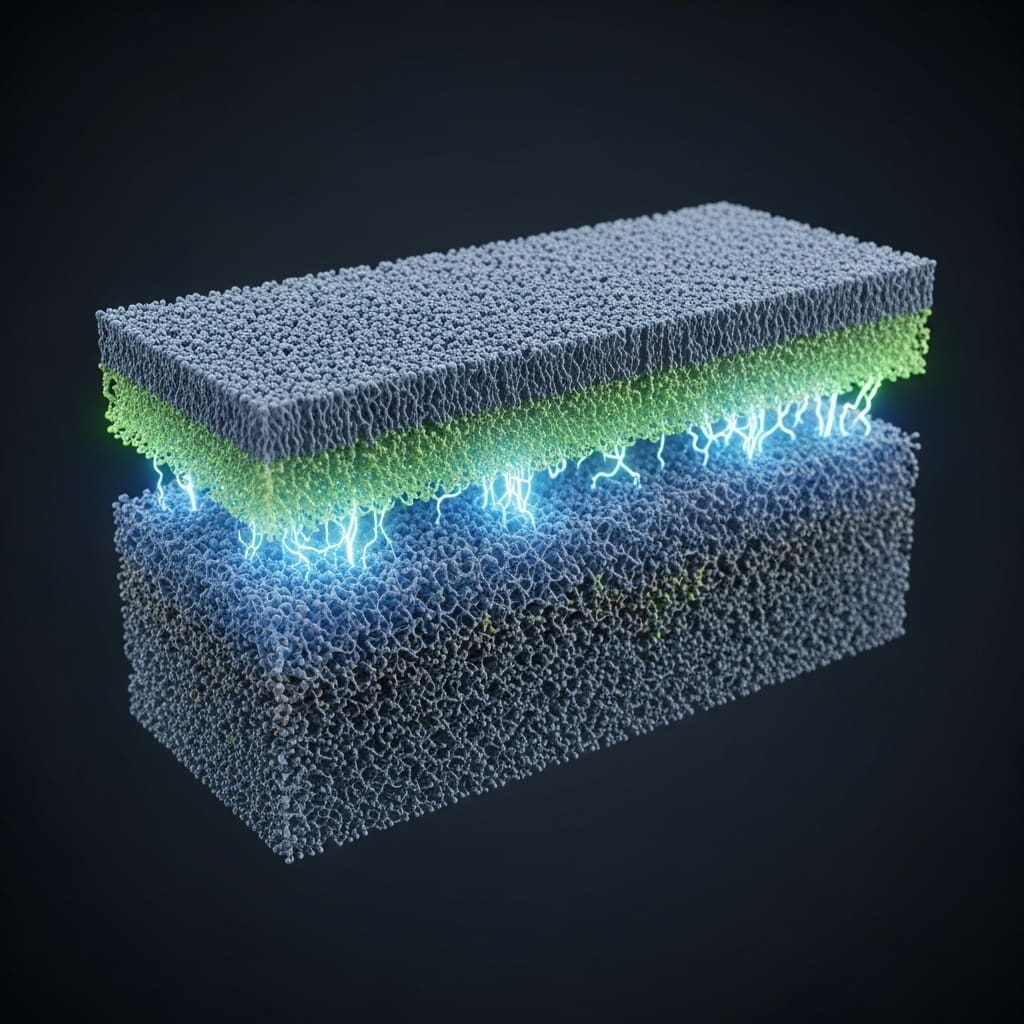

Scientists have developed a new framework called Fine-tuning with Integrated Replay and Efficiency (FIRE) to create highly accurate machine-learning Interatomic potentials for modelling solid-solid battery interfaces. Atomistic modelling of these interfaces is crucial for understanding the complex interplay of electrical, chemical, and mechanical factors within batteries, but current methods struggle with accuracy and data efficiency. The research team addressed these challenges by combining efficient configurational sampling with a replay-argumented Continual learning strategy, achieving quantum-level accuracy with significantly reduced computational cost. This innovative approach allows for predictive simulations previously unattainable with traditional first-principles methods.

The study reveals that FIRE consistently achieves root-mean-square errors in energy below 1 meV/atom and in force near 20 meV/Å across six different solid-solid battery interface systems. This represents an order-of-magnitude improvement over existing models, while requiring only 10% of the original datasets used for training. Researchers accomplished this by leveraging universal machine-learning interatomic potentials, pre-trained on vast chemical datasets, and then fine-tuning them specifically for battery interface materials. A key innovation lies in the integration of a replay mechanism, where data from the initial pre-training phase is periodically reintroduced during fine-tuning to prevent the model from ‘forgetting’ general chemical principles and to improve stability.

Experiments show the fine-tuned models accurately reproduce key mechanical and electrochemical properties of the materials, demonstrating strong agreement with experimental data. The FIRE framework employs a task-specific fine-tuning paradigm, retaining the expressive architecture of a universal foundation model while selectively updating task-relevant parameters. This strategy preserves transferability and inductive biases inherited from large-scale pretraining, substantially lowering data and training time requirements. Furthermore, the team developed a pre-fine-tuned model to generate a candidate configuration pool, enabling dimensionality reduction and clustering to prepare a refined dataset for the task-specific fine-tuning process.

The research establishes FIRE as a generalizable and data-efficient approach for developing accurate interatomic potentials applicable to diverse materials. This breakthrough opens new avenues for predictive simulations, enabling a deeper understanding of electro-chemo-mechanical coupling at solid-solid interfaces and accelerating the development of next-generation solid-state batteries. By overcoming the limitations of both traditional first-principles methods and conventional machine-learning approaches, FIRE promises to significantly advance the field of battery materials research and beyond.

Fine-tuning potentials with replay for battery interfaces offers

Scientists developed a novel approach called Fine-tuning with Integrated Replay and Efficiency (FIRE) to create accurate machine-learning interatomic potentials for solid-solid battery interfaces. The study addressed the challenges of modelling these complex systems, which include diverse configurations arising from defects and dislocations, structural complexity, and multi-physics coupling. Researchers initially employed a task-specific fine-tuning paradigm, leveraging a pre-trained universal machine-learning interatomic potential to retain its expressive architecture and transferability. This strategy significantly reduced both data and training time requirements compared to training models from scratch.

To improve generalisation and robustness, the team pioneered a replay-augmented continual fine-tuning scheme. This involved interleaving training on task-specific datasets with periodic replay of pre-training samples, effectively mitigating catastrophic forgetting during the fine-tuning process. Prior to fine-tuning, a candidate configuration pool was generated using a pre-fine-tuned model, followed by dimensionality reduction and clustering to create a refined dataset. This refined dataset, combined with subsampled pre-training data, was then used for the task-specific fine-tuning, ensuring a balanced learning process.

Across six solid-solid battery interface systems, the FIRE method consistently achieved root-mean-square errors in energy below 1 meV/atom and in force near 20 meV/Å. This represents an order-of-magnitude improvement over existing models while requiring only 10% of the original datasets. The fine-tuned models successfully reproduced key mechanical and electrochemical properties of the materials, demonstrating close agreement with experimental data. This innovative methodology enables predictive simulations beyond the reach of first-principles methods, offering a generalisable and data-efficient approach for developing accurate interatomic potentials across diverse materials.

FIRE yields accurate battery interface potentials efficiently

Scientists have developed a new approach called fine-tuning with integrated replay and efficiency, or FIRE, to create highly accurate machine-learning interatomic potentials for modelling solid-solid battery interfaces. This framework addresses the challenges of accurately simulating these complex environments while maintaining data efficiency. Across six different solid-solid battery interface systems, FIRE consistently achieved root-mean-square errors in energy below 1 meV/atom and in force near 20 meV/angstrom. These measurements represent an order-of-magnitude improvement over existing models, achieved using only 10% of the data previously required.

The research team employed a task-specific fine-tuning paradigm, retaining the expressive architecture of a universal foundation model and selectively updating task-relevant parameters. This strategy preserves transferability and reduces both data and training time requirements. To further enhance generalisation and robustness, a replay-augmented continual fine-tuning scheme was developed, interleaving training with periodic replay of pretraining samples to mitigate catastrophic forgetting. This allows for progressive adaptation to complex interfacial systems while maintaining general knowledge encoded within the foundation model, crucial for stable molecular dynamics simulations.

To construct high-quality training datasets, an efficient sampling protocol was designed, beginning with a pre-fine-tuned model for robust molecular dynamics generation. Approximately 20,000 configurations were generated and then subjected to PCA-assisted dimensionality reduction and K-means clustering using SOAP descriptors, yielding a compact yet informative training set. This process not only improves data efficiency but also ensures broad structural coverage, creating an AI-ready dataset for model fine-tuning. Evaluations were conducted on six typical solid-solid battery interfaces, including electrode, electrolyte combinations like Na/Na3SbS4 and Li/Li6PS5Cl, coating materials such as Li3PS4/Li3B11O18, solid electrolyte interphases like Li2CO3/LiF, and (electro)chemically disordered solid electrolytes including Li7La3Zr2, xMxO12 and LiCl/GaF3.

Using MACE-MP-017 as the backbone universal interatomic potential, the team measured energy and force calculations, demonstrating strong agreement with DFT calculations across all systems. Results confirm that FIRE preserves quantum-level accuracy even in heterogeneous environments, suppressing energy RMSEs to below 1 meV/atom and force RMSEs to 20 meV/Å. This establishes a practical paradigm for constructing significantly more accurate interatomic potentials, surpassing current models that typically report energy errors exceeding 3 meV/atom and force errors surpassing 100 meV/Å.

FIRE framework delivers accurate battery interface potentials

Scientists have developed a new framework, FIRE (fine-tuning with integrated replay and efficiency), for creating accurate interatomic potentials to model solid-solid battery interfaces. This approach addresses the challenges of accurately simulating these complex environments while minimising computational cost and data requirements. By combining efficient configurational sampling with a replay-argumented continual learning strategy, FIRE achieves high accuracy in predicting the energy and forces within these materials. Across six different solid-solid battery interface systems, the research demonstrates that FIRE consistently achieves root-mean-square errors below 1 meV/atom for energy and near 20 meV/angstrom for force, representing a significant improvement over existing models.

Crucially, this level of accuracy is attained using only 10% of the data typically required by other methods. The fine-tuned models successfully reproduce key mechanical and electrochemical properties, aligning closely with experimental observations, and enable simulations at larger scales than previously possible with first-principles methods. The authors acknowledge a limitation in the current computational scale, noting that first-principles methods are restricted to approximately 600 atoms due to memory constraints. Future research will focus on integrating uncertainty-aware active learning protocols with FIRE to further enhance its capabilities for long-timescale and multiscale simulations. This advancement promises to accelerate the design of next-generation energy materials by enabling more reliable and scalable atomistic modelling of complex battery systems.

👉 More information

🗞 An AI-ready fine-tuning framework for accurate machine-learning interatomic potentials in solid-solid battery interfaces

🧠 ArXiv: https://arxiv.org/abs/2601.17847