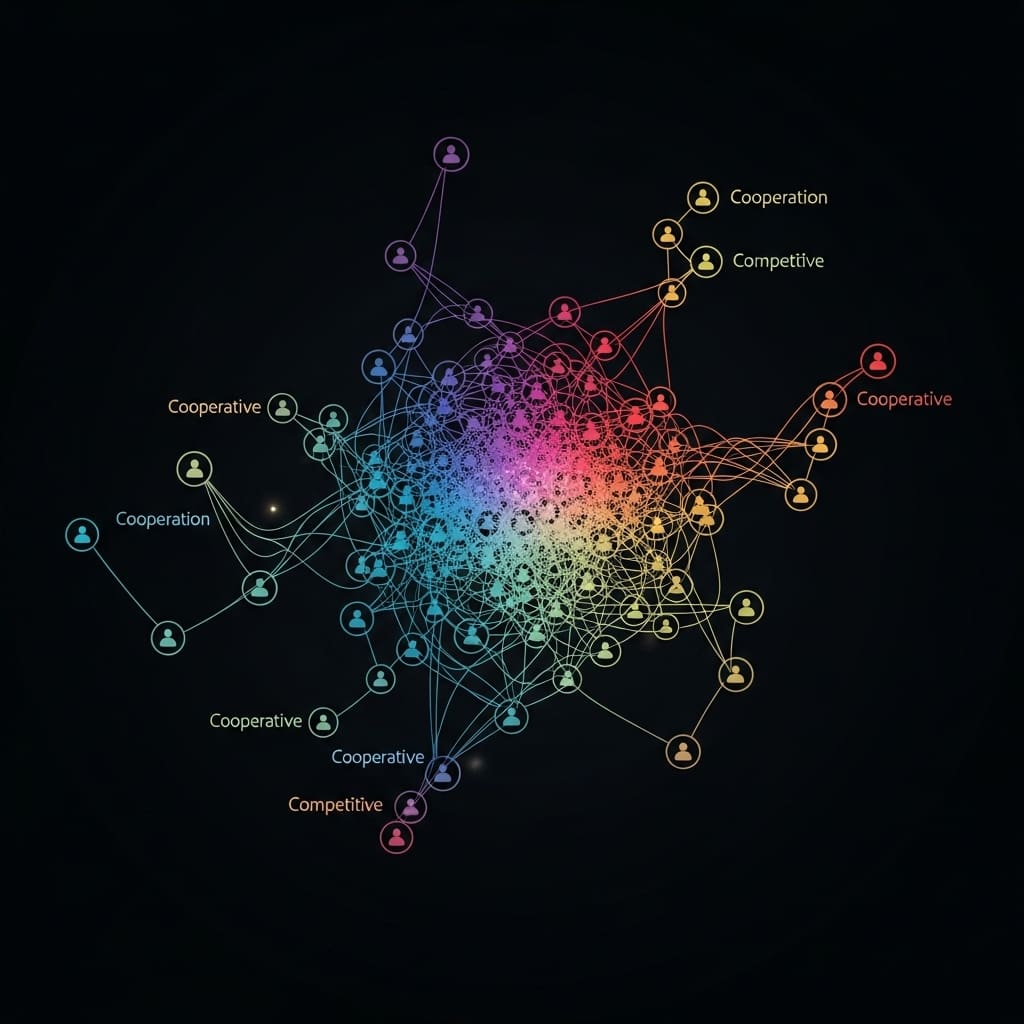

Scientists are increasingly interested in understanding how artificial intelligence impacts human cooperation within groups. Nico Mutzner from the University of Zurich, Taha Yasseri from Trinity College Dublin & Technological University Dublin, and Heiko Rauhut from the University of Zurich, alongside their colleagues, investigated whether the presence of AI alters established cooperative norms in small groups. Their research, detailed in a new study, addresses a critical gap in knowledge , how integrating AI agents affects the emergence and sustainability of cooperation beyond simple one-on-one interactions. By employing an online experiment with human participants and AI ‘bots’, the team demonstrate that cooperative behaviour stems from reciprocal dynamics and behavioural patterns, rather than relying on identifying whether a partner is human or artificial , a finding that suggests surprisingly flexible social norms and could redefine collective decision-making processes.

AI bots impact cooperative norms in groups

Scientists have demonstrated that cooperative norms readily extend to include artificial intelligence agents, blurring the lines between human and AI participation in collective decision-making. The research, published on January 29, 2026, addresses a critical gap in understanding how AI influences cooperative social norms within group settings, moving beyond previous studies focused solely on two-person interactions. Researchers conducted an online experiment employing a repeated four-player Public Goods Game (PGG) to investigate the emergence and maintenance of cooperation when human participants interacted with AI “bots”, framed either as human or AI, exhibiting distinct behavioural strategies. This innovative approach allowed the team to isolate the impact of AI presence on established cooperative dynamics, revealing surprising insights into the flexibility of social norms.

The study involved a sample of 236 participants, each assigned to a group comprising three humans and one bot, with bots programmed to follow one of three strategies: unconditional cooperation, conditional cooperation, or free-riding. Crucially, the experiment found that reciprocal group dynamics and behavioural inertia were the primary drivers of cooperation, operating identically regardless of whether the bot was labelled as human or AI. This resulted in statistically insignificant differences in cooperation levels across all conditions, challenging the notion that AI agents inherently disrupt established norms. Furthermore, the team observed consistent norm persistence in a follow-up Prisoner’s Dilemma, reinforcing the robustness of these findings and demonstrating that participants’ normative perceptions remained unaffected by the presence of AI.

Experiments show that participants behaved according to the same normative logic in both human and AI conditions, indicating that cooperation stemmed from group behaviour rather than individual partner identity. This supports a pattern of “normative equivalence,” where the mechanisms sustaining cooperation function similarly in mixed human-AI and all-human groups. The research establishes that cooperative norms are remarkably adaptable, extending to encompass artificial agents and suggesting that humans readily apply established social scripts even when interacting with non-human entities. These findings have significant implications for a future increasingly populated by AI, particularly in domains requiring collective action, such as addressing climate change, managing resources, and navigating geopolitical challenges.

The work opens exciting possibilities for integrating AI into cooperative systems without disrupting established social dynamics, potentially enhancing collective problem-solving capabilities. Nine out of ten organisations already report regular AI use, and two-thirds of people anticipate significant impacts within the next three to five years, highlighting the urgency of understanding human-AI interaction in group settings. By demonstrating that AI agents can be seamlessly integrated into existing cooperative frameworks, this study paves the way for designing AI systems that foster, rather than hinder, collaborative efforts. This research suggests that the key to successful human-AI collaboration lies not in altering fundamental social norms, but in leveraging their inherent flexibility to accommodate these novel participants.

AI Bots in Public Goods and Dilemma Games

Scientists investigated how artificial intelligence impacts cooperative behaviour within human0.95 for the 17-minute session. This innovative methodology enabled the researchers to demonstrate that cooperative norms function similarly in mixed human-AI groups as they do in all-human groups, supporting the concept of normative equivalence and advancing our understanding of human-AI interaction.

AI Bots Do Not Alter Human Cooperation

Scientists conducted a study examining the influence of artificial intelligence on cooperative behaviour within human groups. Researchers employed a repeated four-player Public Goods Game (PGG) to investigate how the introduction of bots affects the emergence and maintenance of cooperative norms. The experiment involved 236 participants distributed across various conditions, each group comprising three humans and one bot programmed to exhibit either unconditional cooperation, conditional cooperation, or free-riding behaviour. Data shows that reciprocal group dynamics and behavioural inertia were the primary drivers of cooperation, operating consistently across all conditions.

Experiments revealed that cooperation levels did not differ significantly between groups labelled with human versus AI designations. The team measured norm persistence using a follow-up Prisoner’s Dilemma, finding no evidence of differences between human and human-AI hybrid groups. Participants’ behaviour adhered to the same normative logic regardless of whether group members were human or AI, demonstrating that cooperation depended on overall group behaviour rather than individual partner identity. Measurements confirm that contributions followed predictable patterns: responsiveness to others’ contributions, inertia in individual behaviour, and a gradual decline over time.

Results demonstrate a pattern of normative equivalence, where the mechanisms sustaining cooperation function similarly in mixed human-AI and all-human groups. Scientists recorded that post-task norm perceptions and expectations were closely aligned across all conditions, indicating a shared understanding of acceptable behaviour. The study’s design was a between-subjects experiment, with participants playing ten rounds of the PGG followed by a single Prisoner’s Dilemma. Groups were assigned to one of six conditions, crossing agent label (human vs AI) with bot strategy (Unconditional Cooperator, Conditional Cooperator, or Free-Rider).

The breakthrough delivers evidence that cooperative norms are flexible enough to extend to artificial agents, potentially blurring the lines between humans and AI in collective decision-making. Tests prove that group behaviour is the strongest predictor of individual cooperation, irrespective of the bot’s label or strategy. The research established a theoretical baseline for future work introducing more adaptive and communicative AI agents, and suggests that transparent, norm-consistent behaviour may be more effective in fostering social integration than anthropomorphic design. Participants received a fixed payment of £6 for their involvement in the study.

AI Bots Mirror Human Cooperative Dynamics

Scientists have demonstrated that cooperative norms function similarly in groups containing artificial intelligence (AI) as they do in all-human groups. Researchers conducted an online experiment using a repeated four-player Public Goods Game to investigate how AI participants influence cooperative behaviour. The study involved 236 participants assigned to groups with either human or AI-labelled bots, which followed strategies of unconditional cooperation, conditional cooperation, or free-riding. Findings revealed that reciprocal group dynamics and behavioural inertia were the primary drivers of cooperation, operating consistently across all conditions.

Notably, cooperation levels did not differ significantly between groups with human versus AI-labelled partners, nor were there differences in norm persistence observed in a subsequent Prisoner’s Dilemma. Participants’ behaviour adhered to the same normative logic regardless of whether they interacted with humans or AI, suggesting that cooperation is determined by group behaviour rather than individual partner identity. This supports the concept of normative equivalence, where the mechanisms sustaining cooperation remain consistent when AI agents are introduced. The authors acknowledge a limitation in that the AI agents were not adaptive, communicative, or high in social presence, which may limit generalizability to more complex interactions. Future research should explore how these factors might affect the observed equivalence. This work establishes a baseline for understanding human-AI interaction in collective decision-making and suggests that transparent, norm-consistent AI behaviour may be more effective for social integration than attempts at human-like design.

👉 More information

🗞 Normative Equivalence in human-AI Cooperation: Behaviour, Not Identity, Drives Cooperation in Mixed-Agent Groups

🧠 ArXiv: https://arxiv.org/abs/2601.20487