Researchers are increasingly focused on the reliability of complex systems built with foundation models, yet these systems often suffer from issues like hallucination and flawed reasoning due to ad-hoc design practices. Minh-Dung Dao (University College Cork), Quy Minh Le (Vietnam National University), and Hoang Thanh Lam, alongside Duc-Trong Le, Quoc-Viet Pham (Trinity College Dublin) and Barry O’Sullivan, tackle this problem with a new system-theoretic framework for engineering robust agentic systems. Their work is significant because it moves beyond superficial taxonomies of design patterns, offering a rigorous methodology that deconstructs systems into five core subsystems and maps these to twelve reusable design patterns. By providing a foundational language and structured approach, this research promises to standardise communication and ultimately build more modular, understandable, and dependable autonomous systems.

Their work is significant because it moves beyond superficial taxonomies of design patterns, offering a rigorous methodology that deconstructs systems into five core subsystems and maps these to twelve reusable design patterns.

Five Subsystems for Robust Agentic AI are planning

The team achieved a principled methodology to move beyond the ad-hoc design of current systems, which frequently leads to unreliable and brittle applications. These subsystems provide a foundational language for standardising design communication amongst researchers and engineers, fostering more modular and understandable Autonomous systems. The research establishes a comprehensive taxonomy of challenges in agentic AI, directly mapping to a collection of 12 reusable design patterns. Experiments show that these patterns, such as the Integrator for data consistency and the Controller for ethical oversight, provide concrete solutions applicable to a wide range of agentic AI challenges.

The work opens new avenues for creating more reliable and adaptable AI agents capable of tackling complex tasks and interacting effectively with dynamic environments. Specifically, the study unveils a detailed analysis of the ReAct framework, demonstrating how the proposed design patterns can rectify systemic architectural deficiencies. By applying the framework, researchers were able to identify and address weaknesses in ReAct’s design, leading to improved performance and robustness. This case study highlights the practical utility of the system-theoretic approach and its potential to enhance existing agentic AI systems.

The framework not only provides a theoretical foundation but also a practical methodology for implementing and evaluating agent designs, ensuring a more systematic and reliable development process. Furthermore, this work connects agentic design patterns to well-established software design patterns, bridging the gap between AI research and traditional software engineering. This connection allows for the application of proven software engineering principles to the development of agentic AI systems, resulting in more maintainable and scalable solutions. This innovative approach promises to accelerate the development of truly intelligent and trustworthy AI agents for a variety of real-world applications.

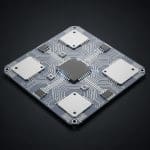

Agentic AI Decomposition into Functional Subsystems enables modular

The research team meticulously mapped a comprehensive taxonomy of agentic challenges directly onto this architectural decomposition, enabling the creation of 12 reusable design patterns. The study pioneered a detailed analysis of the ReAct framework, employing it as a case study to demonstrate how the proposed patterns rectify systemic architectural deficiencies. Specifically, the team identified instances where ReAct’s design lacked explicit components for perception or long-term learning, and then applied corresponding design patterns to address these shortcomings. Experiments involved a systematic decomposition of ReAct’s functionality, mapping each component to one of the five core subsystems.

Scientists then assessed the original architecture against the identified challenges, noting areas of vulnerability and potential failure. The team subsequently implemented the relevant design patterns, modifying the ReAct framework to incorporate explicit modules for perception, grounding, and adaptive learning. Performance was evaluated through qualitative assessment of the modified system’s robustness and reliability in handling complex tasks. By grounding agent design in systems theory, the study moves beyond ad-hoc approaches and facilitates the creation of agents with improved reasoning, reduced hallucination, and enhanced adaptability, ultimately paving the way for more trustworthy and effective AI applications.

Five Subsystems Enable Robust Autonomy

Experiments revealed that this architecture provides a stable yet flexible foundation for agent design, offering sufficient granularity for analysis while maintaining conceptual clarity. The team measured the dynamic interaction flows between these subsystems, demonstrating a cyclical process beginning with Raw Inputs processed into Structured Percepts by the Perception & Grounding subsystem. Measurements confirm that this deconstruction provides a deliberate trade-off, balancing analytical detail with conceptual clarity for improved agent design. Researchers also presented a catalogue of 12 Agentic Design Patterns (ADPs), systematising concepts like reflection, skill acquisition, and tool use into a cohesive set of architectural solutions for LLM-based agents.

The team established a standardised vocabulary and representational structure, including Intent, Problem, and Solution, to describe component interactions and practical implications. Tests prove that the Integrator pattern addresses cognitive data quality by defining a validation pipeline within the Perception & Grounding subsystem, ensuring consistency of incoming information. The Tool Use pattern ensures effective tool use by acting as a Proxy and Adapter for all external tool calls within the Action Execution subsystem, while the Controller pattern addresses value alignment by establishing a continuous monitoring loop as an Observer of the agent’s behaviour.

Agent Design Patterns for Robust Autonomy

The significance of this research lies in its shift from ad-hoc development practices to a principled engineering approach for agentic AI. The practical utility of this framework was demonstrated through a case study analysing the ReAct framework, identifying systemic weaknesses and proposing patterns for enhanced robustness and adaptability. Authors acknowledge limitations including the potential computational overhead introduced by sophisticated patterns and the fact that the work does not address broader societal impacts like accountability. Future research should investigate the trade-offs associated with pattern implementation and explore the ethical implications of large-scale autonomous systems.

👉 More information

🗞 Agentic Design Patterns: A System-Theoretic Framework

🧠 ArXiv: https://arxiv.org/abs/2601.19752