Acoustic echo cancellation remains a significant challenge in real-world communication systems, hindering clear audio transmission. Yiheng Jiang, Biao Tian, and Haoxu Wang, all from Tongyi Lab at Alibaba Group, alongside Shengkui Zhao, Bin Ma, and Daren Chen, present a novel end-to-end neural network approach , E2E-AEC , that tackles this problem without the need for conventional linear prediction or precise delay estimation. Their research demonstrates a streaming inference method, progressively learning to suppress echoes and leveraging knowledge transferred from pre-trained linear AEC models. Crucially, a weight-based loss function ensures accurate signal alignment, while voice activity detection further refines speech quality , representing a substantial advance towards robust and efficient echo removal in diverse acoustic environments.

Their research demonstrates a streaming inference method, progressively learning to suppress echoes and leveraging knowledge transferred from pre-trained linear AEC models. Crucially, a weight-based loss function ensures accurate signal alignment, while voice activity detection further refines speech quality, representing a substantial advance towards robust and efficient echo removal in diverse acoustic environments.

Neural network streamlines acoustic echo cancellation

This breakthrough research, conducted by a team at Alibaba Group’s Tongyi Lab, introduces a neural network-based approach that promises to significantly improve audio quality in voice interaction systems. The team achieved substantial progress by implementing progressive learning, a strategy that gradually enhances echo suppression through staged training, first focusing on dominant echo removal, then refining the output to eliminate residual echo, noise, and reverberation. This approach allows the model to incrementally improve speech quality, concentrating on increasingly subtle acoustic imperfections. This pre-training provides a strong foundation for the neural network to build upon, improving both robustness and performance.

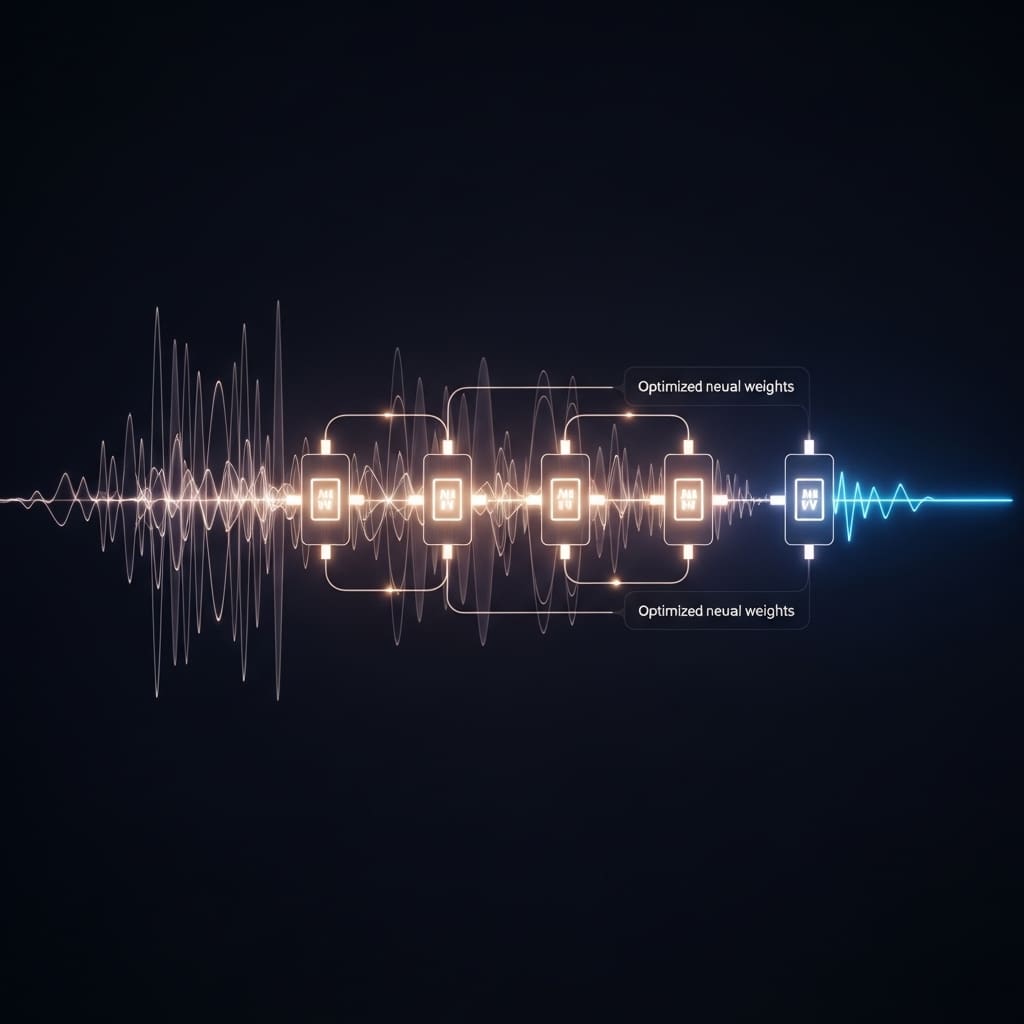

This precise alignment is crucial for effective echo cancellation, particularly in scenarios with varying time delays. The proposed E2E-AEC model takes short-time Fourier transform (STFT) features as input, utilising a frame length of 20ms and a frame shift of 10ms. Each recurrent neural network (RNN) block consists of two gated recurrent unit (GRU) layers, following a TF-GridNet design to capture both full-band and sub-band dependencies, with a hidden dimension of 64 and a kernel size of 4. The model, containing 1.2 million parameters, was validated through experiments on public datasets, demonstrating the potential of E2E approaches and contributing to advancements in this challenging field.

Neural Network Echo Cancellation with Progressive Learning improves

The research team engineered a system that progressively enhances echo suppression through refined progressive learning strategies, gradually improving performance over time. Experiments employed short-time Fourier transform (STFT) features as input, processing the microphone signal modelled as y(n) = r(n) ∗ hr(n) + x(n) ∗ hx(n) + v(n), where ‘*’ denotes convolution, ‘n’ indexes time samples, and hr(n) and hx(n) represent acoustic transfer functions. This innovative approach enables the network to learn alignment implicitly, mitigating performance degradation in scenarios with significant time delays. The team validated the effectiveness of this E2E-AEC framework through experiments conducted on public datasets, demonstrating its ability to suppress echo, mitigate reverberation, and reduce background noise simultaneously. The architecture, as depicted in Figure 1, takes STFT features as input and the system delivers an anechoic target speech x(n) by jointly tackling noise reduction and echo cancellation.

Neural network cancels echo without linear processing

All audio data was downsampled from 48kHz to 24kHz, a change deemed perceptually negligible, before being upsampled back to 48kHz for evaluation. Further optimisation with VAD training (Exp 5) slightly enhanced speech quality for the double talk (DT) subset, and when combined with a masking process (Exp 6), it significantly boosted ERLE to 78.69 dB, demonstrating remarkable echo suppression in far-end single talk (FarST) scenarios. Detailed analysis of time alignment, using loss functions like MSE and cross-entropy, revealed that the Attention+MSE model achieved the smallest estimation errors, with a mean of -3ms and a variance of 94ms. Investigating the impact of VAD predictions from different layers, the team found that utilising the fifth layer for VAD prediction and masking yielded the best performance, with ERLE reaching 78.69 dB, indicating the highest level of echo suppression.,.

Neural Network Achieves Streaming Echo Cancellation with remarkable

This approach incorporates progressive learning to refine echo suppression gradually, alongside knowledge transfer initialised from a pre-trained linear AEC model, leveraging existing insights. Experiments on public datasets validated the effectiveness of this E2E-AEC system, demonstrating improvements in both AECMOS and ERLE scores across diverse acoustic conditions. The authors acknowledge that convergence requires additional time during specific transitions in the test data, such as at 6 and 10 seconds. The demonstrated improvements in key performance metrics highlight the model’s ability to handle complex acoustic scenarios effectively. Future research could explore optimising convergence speed during transitions and investigating the impact of utilising intermediate layers for voice activity detection and masking, as the fifth layer yielded the best performance in their experiments.

👉 More information

🗞 E2E-AEC: Implementing an end-to-end neural network learning approach for acoustic echo cancellation

🧠 ArXiv: https://arxiv.org/abs/2601.16774