Researchers are tackling the persistent challenge of achieving precise camera control in AI-generated videos. Wenhang Ge, Guibao Shen from HKUST(GZ), and Jiawei Feng from HKUST, alongside colleagues including Luozhou Wang, Hao Lu, and Xingye Tian from the Kling Team at Kuaishou Technology, present CamPilot , a novel approach leveraging reward feedback learning to dramatically improve how well cameras align with video content. This work is significant because current methods struggle to accurately assess video-camera alignment and are computationally expensive, often neglecting crucial 3D geometric information. CamPilot introduces an efficient, camera-aware 3D decoder that quantifies reward by transforming video data into 3D representations, optimising pixel-level consistency and ultimately enabling more realistic and controllable video generation.

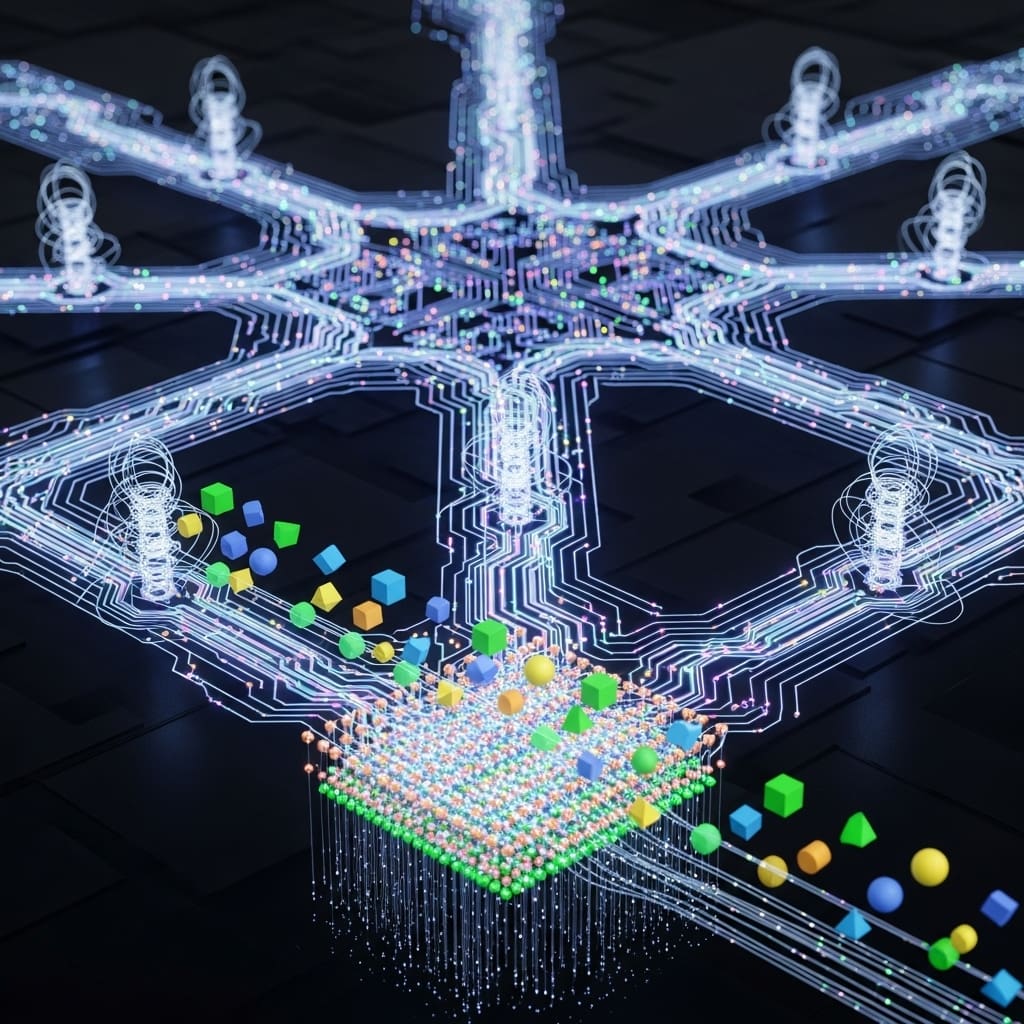

3D Gaussian Decoder for Camera Control

Scientists have recently achieved a breakthrough in camera control for video diffusion models, significantly enhancing the ability to generate realistic and customizable video content. The team at HKUST and Kuaishou Technology addressed limitations in existing camera controllability by building upon Reward Feedback Learning (ReFL) techniques. This work introduces an efficient camera-aware 3D decoder that transforms video latent data into 3D representations, specifically 3D Gaussians, for precise reward quantification. Crucially, the camera pose is integrated not only as input but also as a projection parameter within this decoding process, enabling assessment of alignment between the video content and camera movements.

The core innovation lies in the decoder’s ability to detect misalignment; discrepancies between video latent and camera pose manifest as geometric distortions in the 3D structure, resulting in blurry renderings. Researchers then explicitly optimise pixel-level consistency between rendered novel views and ground-truth images, using this distortion as a reward signal. To accommodate the stochastic nature of diffusion models, a visibility term was introduced, selectively focusing supervision on deterministic regions derived through geometric warping, ensuring creative freedom isn’t stifled. This approach circumvents the computational burden of decoding latent data into full RGB videos for reward calculation, offering a substantial efficiency gain.

Experiments conducted on the RealEstate10K and WorldScore benchmarks demonstrate the effectiveness of this method, proving its capacity to generate high-quality, temporally coherent videos with precise camera control. The research establishes a novel framework for world-consistent video generation and 3D scene reconstruction, allowing for custom camera trajectories and efficient 3D scene reconstruction from generated video frames. By leveraging 3D Gaussians, the team achieved a computationally efficient evaluation of video-camera consistency, overcoming limitations of previous approaches that neglected underlying 3D geometric structure. This breakthrough opens exciting possibilities for applications in virtual reality, robotics, game development, and architectural visualisation, where both visual fidelity and accurate 3D representation are paramount. The CamPilot project, accessible at [https://a-bigbao. github. io/CamPilot/](https://a-bigbao. github. io/CamPilot/), showcases the potential of this technology to create user-friendly and highly customizable content creation tools. Future work could explore extending this framework to more complex scenes and dynamic environments, further solidifying its role in the advancement of controllable video generation.

Camera-aware 3D Gaussian decoding for video ReFL

Scientists tackled the challenge of limited camera controllability in video generation by pioneering a Reward Feedback Learning (ReFL) approach tailored for video diffusion models. The study directly addresses three key limitations of existing ReFL methods: the inability to assess video-camera alignment, substantial computational overhead from decoding latent variables into RGB videos, and the neglect of 3D geometric information during video decoding. Researchers engineered a camera-aware 3D decoder that efficiently translates video latent variables and camera poses into 3D Gaussians (3DGS) for reward quantification. Specifically, the team decoded video latent alongside camera pose information into 3D Gaussians, leveraging the camera pose not only as input but also as a projection parameter.

Misalignment between the video latent and camera pose intentionally induces geometric distortions within the 3D structure, manifesting as blurry renderings, a crucial design element for reward signal generation. To quantify this distortion, the research optimised pixel-level consistency between rendered novel views and corresponding ground-truth images, establishing a direct link between visual quality and camera alignment. To account for inherent stochasticity, scientists introduced a visibility term that selectively focuses supervision on deterministic regions derived through geometric warping, enhancing the robustness of the reward signal. The system delivers a computationally efficient evaluation of video-camera consistency, circumventing the need for resource-intensive computations typically associated with video decoders.

Experiments employed the RealEstate10K and WorldScore benchmarks to rigorously demonstrate the effectiveness of the proposed method, achieving significant improvements in camera controllability and video quality. This innovative approach enables precise control over camera trajectories, supporting user-friendly and customisable content creation, and bridging the gap between 2D video generation and 3D/4D reconstruction. The study pioneers a method where the 3D Gaussian representation supports efficient novel view rendering, utilising photometric loss for robust supervision and ultimately facilitating high-quality, geometrically consistent video generation.

3D Gaussian Decoding Quantifies Video-Camera Misalignment

Scientists have developed an efficient camera-aware 3D decoder to improve video-camera alignment and controllability. The team addressed limitations in existing reward feedback learning (ReFL) approaches by focusing on accurate reward assessment and computational efficiency. Experiments revealed that decoding video latent into 3D representations, specifically 3D Gaussians, allows for reward quantization based on geometric distortions, blurriness resulting from misalignment between video latent and camera pose. This innovative approach enables the optimization of pixel-level consistency between rendered novel views and ground-truth sequences as a quantifiable reward signal.

Researchers measured misalignment by leveraging the camera pose not only as an input but also as a projection parameter within the 3D decoder. The study demonstrated that discrepancies between the video latent and camera pose directly manifest as geometric distortions in the reconstructed 3D structure, providing a clear metric for alignment. To account for the stochastic nature of video generation, a visibility term was introduced, selectively supervising only deterministic regions derived through geometric warping, effectively focusing reward computation on areas where accurate alignment is crucial. Data shows this refined reward formulation avoids penalizing creative generation in unconstrained areas.

Tests conducted on the RealEstate10K and WorldScore benchmarks demonstrate the effectiveness of the proposed method. The breakthrough delivers a framework that lifts video latent and camera pose into 3D Gaussians, supporting 2D image rendering and enabling precise evaluation of alignment. Measurements confirm that minimizing pixel-level differences between rendered and ground-truth videos, specifically within deterministic regions, significantly improves camera controllability and visual quality. The team achieved this by designing a visibility-aware reward objective that restricts reward computation to visible, deterministic regions, avoiding penalties in hallucinated or occluded areas.

Furthermore, scientists rendered depth maps from the 3D Gaussians, combining them with camera poses to determine pixel visibility across frames via geometric warping. Results demonstrate that this camera-aware 3D decoder enables efficient 3D scene reconstruction in a feed-forward manner, simultaneously serving as a medium for reward computation.

👉 More information

🗞 CamPilot: Improving Camera Control in Video Diffusion Model with Efficient Camera Reward Feedback

🧠 ArXiv: https://arxiv.org/abs/2601.16214