Scientists are tackling the challenge of improving text-video retrieval, where current systems frequently fail to focus on the most important visual elements. Zequn Xie, Xin Liu, and Boyun Zhang, from Zhejiang University and Southwestern University of Finance and Economics, alongside et al., present a new approach inspired by human vision in their paper, ‘HVD: Human Vision-Driven Video Representation Learning for Text-Video Retrieval’. Their innovative Human Vision-Driven (HVD) model addresses the problem of ‘blind’ feature interaction by mimicking how humans selectively attend to key visual information , first identifying important frames, then compressing patch features into meaningful entities. This research is significant because it not only achieves state-of-the-art performance on five benchmarks, but also demonstrates a system capable of capturing human-like visual focus, promising more effective and intuitive video search.

This innovative approach allows the model to focus on key visual elements, significantly improving retrieval accuracy. The core of the HVD model lies in its ability to simulate both macro and micro-perception, mirroring human visual attention. FFSM, the frame selection module, operates at a macro level, identifying and selecting key frames to eliminate temporal redundancy and irrelevant scenes, a process akin to our initial, broad scan of a video.

This granular approach ensures that the model focuses on the most informative parts of the video, enhancing the accuracy of cross-modal feature alignment. The team employed the CLIP model as a backbone, extracting patch and frame features, and then implemented the FFSM to select relevant frames based on similarity to the text query. Crucially, the FFSM effectively reduces redundant information while preserving essential patch features for fine-grained alignment, achieving a level of feature accuracy at the macro level previously unseen. Further innovation lies within the PFCM, which compresses patch features from the selected frames, extracting visual entities that align with textual word features.

Utilising a K-Nearest-Neighbor-based density peaks clustering algorithm, DPC-KNN, the module aggregates patch features, while importance scores and attention weights re-represent these features, emphasising crucial patches and their spatial relationships. This collaborative operation of the two modules eliminates the need for complex text alignment strategies, streamlining the retrieval process and boosting efficiency. The research establishes a new paradigm for text-video retrieval, paving the way for more intelligent and intuitive video search applications.

Frame and Patch Feature Selection Modules are key

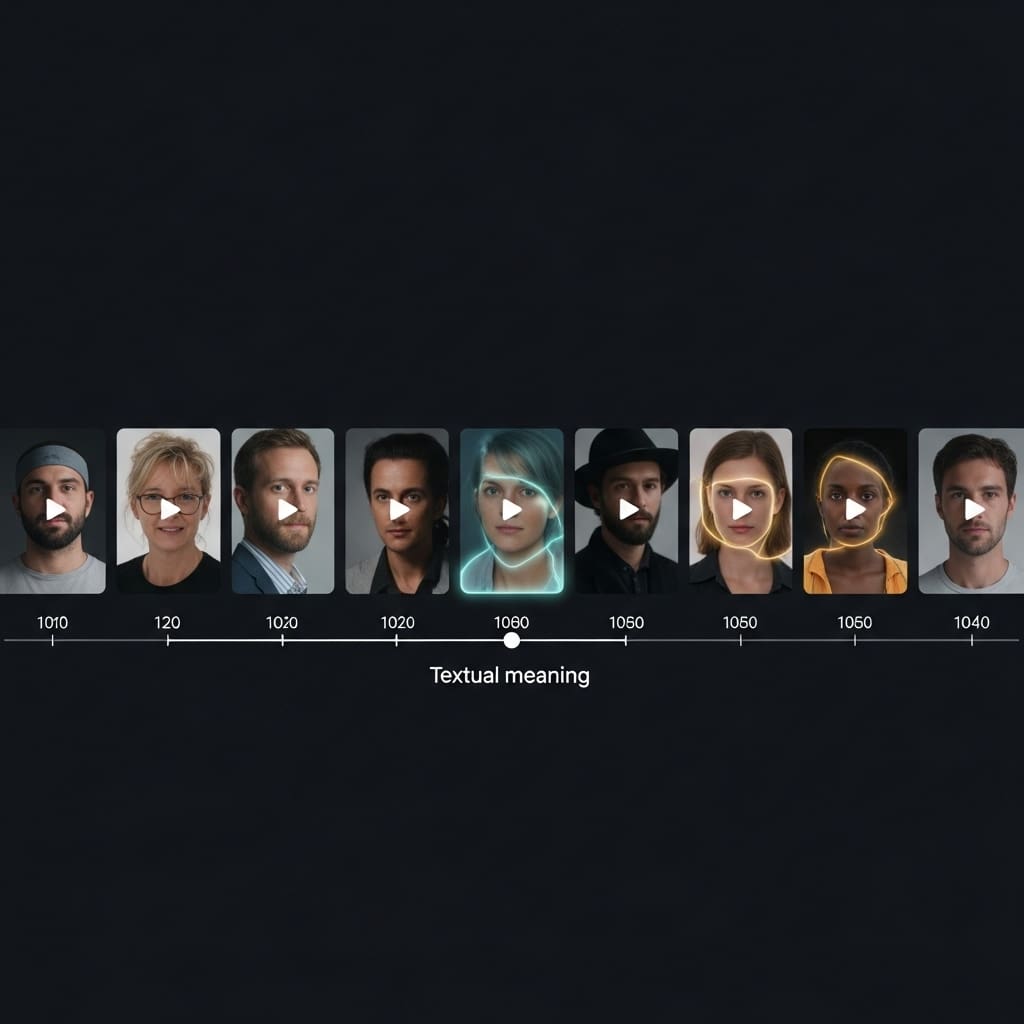

Initially, the FFSM mimics human macro-perception by intelligently selecting key frames to reduce temporal redundancy within video sequences. This process prioritises frames most relevant to the textual query, effectively eliminating irrelevant scenes and focusing computational resources on crucial visual data. The study pioneers a method of discerning key visual content, as demonstrated in Figure 1, where relevant frames are highlighted with blue borders while irrelevant frames receive yellow borders. This innovative approach enables precise, entity-level matching between textual keywords and visual components within the selected key frames.

Researchers harnessed this technique to repeatedly compare keywords with visual entities, such as “man” or “baseball”, refining feature interactions and ensuring accurate cross-modal feature alignment. The team validated the approach against existing methods like UCoFiA and T-Mass, highlighting HVD’s superior ability to handle distracting visual cues and sparse textual information. This work addresses the robustness and precision limitations of current feature enhancement and interaction techniques, delivering a significant advancement in text-video retrieval technology.

Human Vision Model Boosts Video Retrieval significantly

The research team tackled the issue of “blind” feature interaction, where models often fail to differentiate important visual elements from background noise due to sparse textual queries. The FFSM component simulates human macro-perception, selectively choosing key frames to reduce temporal redundancy within videos. When parameters were set to (ħ, λ) = (0.50, 0.50), HVD achieved peak retrieval performance, demonstrating that selecting an appropriate granularity of feature interaction is vital for optimal results. Visualizations of the training process, as shown in Fig0.3, confirm that the non-red regions represent frames actively used for text interaction, highlighting the model’s focus.

Data shows that the PFCM progressively reduces the number of features involved in interaction across three consecutive operations, refining the visual representation for improved accuracy. The breakthrough delivers a substantial advancement in video representation learning, integrating frame selection and patch compression to enhance text, video retrieval. Measurements confirm that the proposed modules, FFSM and PFCM, jointly improve video representations at both frame and patch levels, addressing both coarse- and fine-grained alignment challenges. Ablation studies further validated the effectiveness and intuitiveness of these modules, alongside detailed analysis of hyper-parameter settings. Researchers recorded significant improvements in retrieval accuracy, paving the way for more effective video search and analysis applications. This work hopes to inspire further innovation within the video retrieval community, offering a novel approach to cross-modal representation learning.

Human Vision Model Achieves State-of-the-Art Retrieval performance

Researchers evaluated HVD on five benchmark datasets, Charades, and achieved state-of-the-art retrieval performance. Ablation studies confirmed the effectiveness of both FFSM and PFCM, while visualizations demonstrated the model’s ability to select appropriate granularity for feature interaction. The findings suggest that aligning video representations at both frame and patch levels, guided by human perceptual principles, significantly enhances text-video retrieval accuracy. The authors acknowledge that the performance gains are dependent on selecting appropriate hyper-parameters for the compression of patch features. Future work could explore adaptive methods for determining the optimal granularity of feature interaction, potentially further refining the model’s performance. This research offers a valuable contribution to the video retrieval community by introducing a human-vision-inspired approach that improves the accuracy and intuitiveness of text-video search systems.

👉 More information

🗞 HVD: Human Vision-Driven Video Representation Learning for Text-Video Retrieval

🧠 ArXiv: https://arxiv.org/abs/2601.16155