Scientists are increasingly focused on building world models , systems capable of predicting how environments change in response to actions, allowing for proactive planning. Zhikang Chen and Tingting Zhu, alongside their colleagues, demonstrate that current models often prioritise visually realistic simulations over genuine understanding of physical laws and causal relationships. Their research highlights a critical flaw: impressive video generation doesn’t necessarily equate to reliable prediction, leading to failures when faced with interventions or complex decision-making scenarios. This work is significant because it reframes world models not as ‘visual engines’, but as ‘actionable simulators’, demanding structured design, adherence to constraints, and rigorous closed-loop testing , a point powerfully illustrated through the high-stakes example of medical decision-making where accurate foresight is paramount.

This work is significant because it reframes world models not as ‘visual engines’, but as ‘actionable simulators’, demanding structured design, adherence to constraints, and rigorous closed-loop testing, a point powerfully illustrated through the high-stakes example of medical decision-making where accurate foresight is paramount.

AI moves from images to simulation

Scientists have demonstrated a critical shift in artificial intelligence, moving beyond simply generating realistic images to creating actionable simulators that truly understand and predict environmental dynamics. This breakthrough research addresses a fundamental flaw in current world models , visual conflation, where high-fidelity video generation is mistakenly equated with genuine comprehension of physical and causal relationships. The team advocates for developing these models as simulators rather than merely visual engines, with a focus on structured 4D interfaces, constraint-aware dynamics, and rigorous closed-loop evaluation. Experiments show that while modern models excel at pixel prediction, they frequently violate invariant constraints, fail when subjected to intervention, and ultimately break down in safety-critical decision-making scenarios.

Researchers propose that effective world models must maintain stability over extended periods, enabling robust long-horizon foresight and accurate prediction of future states based on actions taken. This work establishes a new conceptual framework, synthesizing recent advances to emphasize the transition from generating appearances to simulating dynamics, and organizing progress around four key challenges: developing structural interfaces, enabling self-evolution, anchoring models in physical laws, and achieving generalization through structured imagination. Furthermore, the research introduces a novel approach to self-evolution, where world models refine their internal dynamics using generated rollouts as feedback signals, moving beyond static prediction towards adaptive simulation. The scientists argue that adaptability alone is insufficient without grounding in physical reality, and demonstrate how physics-informed constraints are essential to prevent unbounded drift and ensure consistency with the real world.

Using medical decision-making as a stringent test case, where trial-and-error is impossible and errors are irreversible, the study proves that a world model’s true value lies not in the realism of its outputs, but in its capacity to support counterfactual reasoning, intervention planning, and robust long-horizon foresight. This innovative work opens new avenues for AI development, particularly in areas where safety and reliability are paramount, such as autonomous driving and healthcare. The team calls for a shift in evaluation paradigms, advocating for closed-loop, decision-oriented assessment as the most principled method for distinguishing a truly predictive world model from a sophisticated generative engine. Ultimately, this research asserts that the next generation of world models should be judged not by how they look, but by how reliably they enable effective action in complex and uncertain environments.

Actionable Simulators Evaluated via Five Dimensions

Scientists are increasingly focused on evaluating world models not by visual fidelity, but by their capacity to support robust decision-making and causal reasoning. The research pioneers a reframing of these models as actionable simulators, moving beyond purely visual engines and towards systems capable of long-horizon foresight. To rigorously assess these capabilities, the study employed a multi-faceted evaluation framework encompassing five complementary dimensions, moving beyond simple perceptual metrics. Initially, perceptual generation quality was quantified using established metrics like FID/FVD, LPIPS/SSIM, CLIPScore, and VBench, which capture visual realism and short-range temporal consistency.

Researchers then developed and harnessed automated and constraint-based metrics to assess physical and commonsense consistency, directly measuring violations of known invariants within generated rollouts. Physics adherence scores, alongside automated plausibility checks penalising impossible dynamics, were used to quantify the model’s respect for fundamental physical laws and everyday reasoning. Furthermore, the team investigated language and multimodal alignment, evaluating instruction-following accuracy, question answering capabilities, and counterfactual reasoning benchmarks to determine if the model correctly associates language with world states. Crucially, the study pioneered the use of closed-loop decision-making as a primary evaluation paradigm, treating learned worlds as interactive surrogate environments.

Executing robotic policies within these simulated worlds allowed scientists to correlate performance rankings with real-world deployment outcomes, offering a scalable alternative to physical testing, exemplified by WorldEval and WorldGym, prioritising actionable rollouts over purely realistic visuals. In domain-specific contexts, such as healthcare, evaluation incorporated clinically grounded criteria, including anatomical consistency, treatment-conditioned disease progression accuracy, and risk prediction error, demonstrating the model’s utility in complex, high-stakes scenarios. This comprehensive methodology establishes a decisive shift from generative evaluation towards assessing world models as predictive, causal, and decision-support systems.

Invariant failures reveal flawed world model understanding

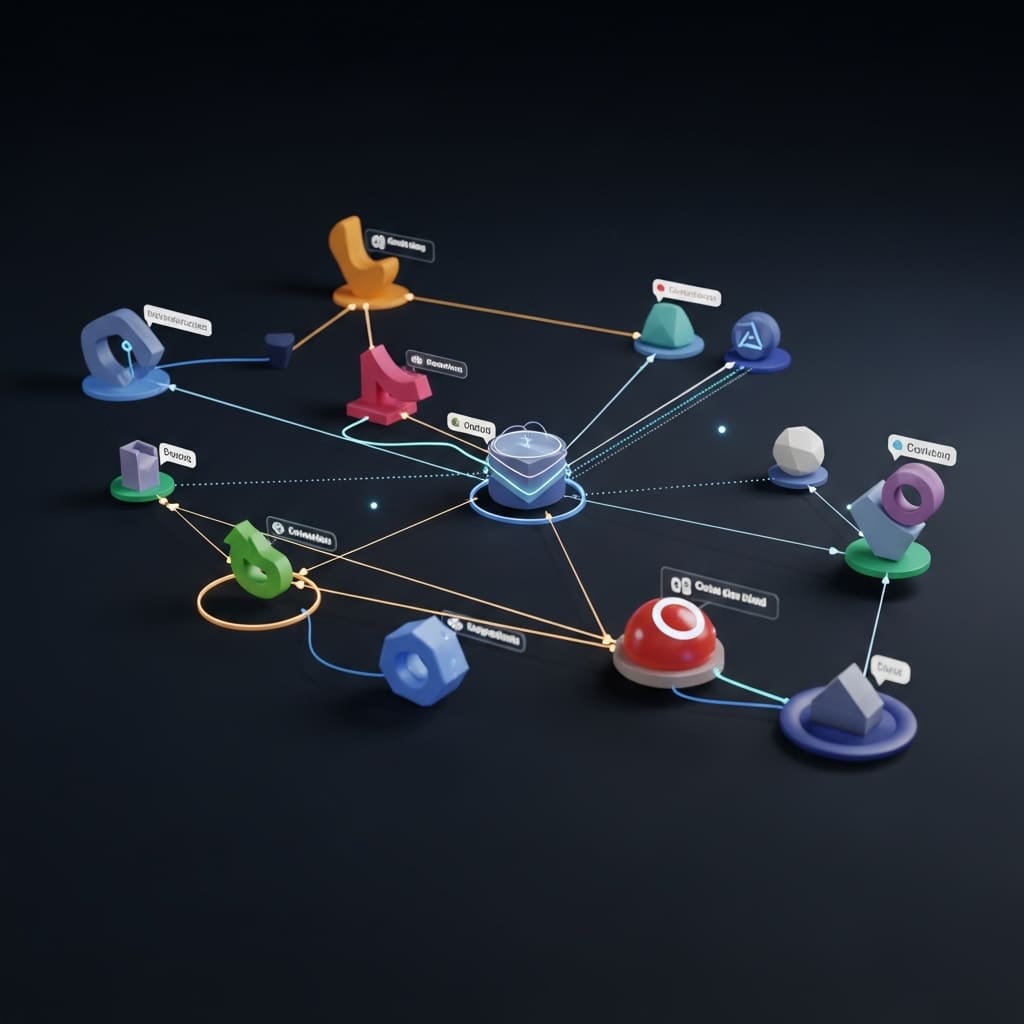

Scientists have demonstrated that current world models, despite excelling at pixel prediction, frequently violate invariant constraints and fail under intervention. The research reveals a critical flaw: visual realism is an unreliable indicator of genuine world understanding, highlighting the need for models that encode causal structure and respect domain-specific constraints. Experiments conducted using medical decision-making as a stress test confirmed that a world model’s true value lies not in realistic rollouts, but in its capacity for counterfactual reasoning, intervention planning, and robust long-horizon foresight. The team measured the evolution from 2D pixel-level extrapolation towards structured 4D dynamic meshes, persistent memories, and causal interaction graphs, observing a clear shift in focus from visual engines to actionable simulators.

Data shows that these structured 4D interfaces ensure the world state is explicitly exposed for long-horizon reasoning, moving beyond mere rendering to facilitate deeper understanding. Furthermore, the study analysed how world models maintain long-term consistency through closed-loop self-evolution, refining internal dynamics using generated rollouts as feedback signals. Measurements confirm that adaptability, without grounding in physical laws, leads to unbounded drift, necessitating the incorporation of physics-informed constraints, ranging from explicit differentiable dynamics to implicit intuitive physics, to anchor self-evolution to reality. Researchers recorded that world models act as data engines in interaction-starved regimes, acquiring transferable skills through uncertainty-aware imagination without the risks of real-world trial-and-error.

In the medical domain, where errors are irreversible, the work demonstrates that world modeling isn’t simply an enhancement, but a prerequisite for autonomous decision-making. The breakthrough delivers a new framework for evaluating world models, asserting that closed-loop, decision-oriented assessment is the most principled criterion for distinguishing predictive models from sophisticated generative engines. Specifically, the study highlights the progression from 2D image generation to structured 3D and 4D representations, such as dynamic meshes, which expose spatial and geometric regularities for coherent multi-step prediction. SPARTAN, a sparse transformer world model, was shown to learn time-dependent local causal graphs, enabling stable long video rollouts by attending only to causally relevant interactions, mitigating compounding errors and achieving improved performance.

Beyond Realistic Rendering, Towards Physical Correctness

Scientists argue that current world models, despite excelling at generating realistic visuals, often fail to grasp fundamental physical and causal dynamics. These models frequently violate established constraints, struggle when subjected to interventions, and falter in critical decision-making scenarios, indicating that visual realism is an unreliable indicator of genuine world understanding. Researchers propose a shift in focus from creating visually appealing simulations to developing “actionable simulators” that prioritise encoding causal structures, respecting domain-specific rules, and maintaining stability over extended periods. This work highlights a crucial distinction between world models that merely look realistic and those that are physically correct, particularly within high-stakes domains like medicine.

The authors demonstrate, using medical decision-making as a test case, that a world model’s true value lies in its capacity to facilitate counterfactual reasoning, intervention planning, and accurate long-term forecasting, not simply in generating convincing imagery. While acknowledging the rapid advancements in generative AI, the study points out the necessity of grounding these models in structural and physical realities, potentially through explicit geometry, causal graphs, or programmatic rules. The authors note a limitation in relying solely on open-loop metrics for evaluation, advocating for task-centric assessments like WorldEval to better differentiate between models that appear functional and those that are genuinely reliable. Future research should concentrate on closed-loop utility and refining evaluation paradigms to assess a model’s ability to perform effectively in real-world applications.

👉 More information

🗞 From Generative Engines to Actionable Simulators: The Imperative of Physical Grounding in World Models

🧠 ArXiv: https://arxiv.org/abs/2601.15533