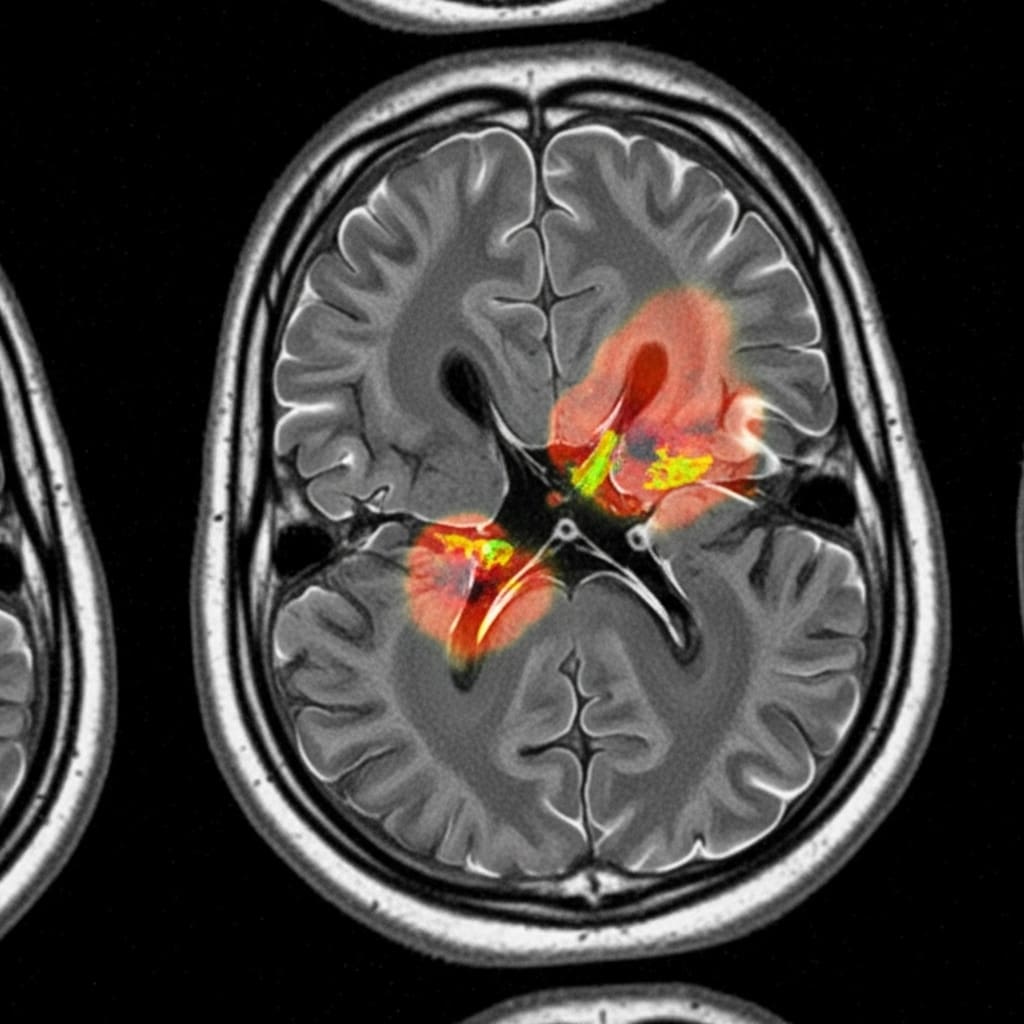

Scientists are tackling the crucial problem of effectively utilising foundation models for multi-modal medical imaging, a field currently hampered by difficulties in fusing information from diverse sources and adapting to complex tissue characteristics. Shadi Alijani, Fereshteh Aghaee Meibodi, and Homayoun Najjaran, all from the University of Victoria, present a novel framework addressing these challenges with sub-region-aware modality attention and adaptive prompting. This research is significant because it allows the model to intelligently combine imaging modalities specific to each area of a tumour, refining segmentation accuracy and demonstrably outperforming existing methods on the challenging BraTS 2020 dataset , particularly within the necrotic core. Their principled approach promises more accurate and robust foundation model solutions for medical image analysis, ultimately improving diagnostic capabilities.

This research introduces two key innovations, sub-region-aware modality attention and adaptive prompt engineering, to overcome these limitations and enhance segmentation accuracy. The attention mechanism allows the model to dynamically learn the optimal combination of imaging modalities for specific sub-regions within a tumor, while the adaptive prompting strategy leverages the inherent capabilities of foundation models like MedSAM to refine segmentation results.

Experiments conducted using the BraTS 2020 brain tumor segmentation dataset reveal that this approach significantly outperforms baseline methods, particularly in the notoriously difficult task of delineating the necrotic core sub-region. The team achieved this breakthrough by focusing on a principled and effective method for multi-modal fusion and prompting, paving the way for more accurate and robust foundation model-based solutions in medical imaging. This work establishes a new state-of-the-art performance in MedSAM-based brain tumor segmentation, offering a substantial improvement over existing techniques. The sub-region-aware modality attention mechanism enables the model to focus on the most informative data for each tumor component, necrotic core, edema, and enhancing tumor, improving the precision of segmentation.

Furthermore, the adaptive prompt engineering utilizes sub-region-specific prompts to guide the model’s attention, resulting in enhanced delineation accuracy and a more refined understanding of tumor boundaries. Through extensive experimentation, researchers provide a comprehensive analysis of the impact of these components, offering valuable insights into the effective design of multi-modal fusion and prompting strategies for foundation models in medical imaging. This research makes several key contributions to the field, introducing a novel framework and demonstrating its effectiveness on the challenging BraTS 2020 dataset. The study unveils a new approach to adapting foundation models, moving beyond traditional supervised learning methods that require extensive annotated data and struggle with generalization. By leveraging the inherent promptability of MedSAM and incorporating sub-region-specific information, the team has created a system capable of achieving superior segmentation performance and providing a more detailed analysis of brain tumor characteristics, ultimately advancing the potential for improved clinical diagnosis and treatment planning.

Sub-region attention for multi-modal brain tumour imaging improves

Scientists developed a novel framework to adapt foundation models for multi-modal medical imaging, addressing the challenge of effectively fusing information from diverse sources and accommodating heterogeneous pathological tissues. The research team engineered sub-region-aware modality attention and adaptive prompt engineering as key technical innovations within this framework, enabling dynamic focus on the most informative data for specific tumor regions. This approach allows the model to learn the optimal combination of modalities, such as MRI and CT scans, for each sub-region of the tumor, including the necrotic core, edema, and enhancing tumor, significantly improving delineation accuracy. Experiments employed the BraTS 2020 brain tumor segmentation dataset to validate the framework’s performance, utilising a dataset comprised of multi-modal brain scans.

The team implemented the sub-region-aware modality attention mechanism by dividing each tumor into distinct sub-regions and training the model to assign varying weights to each modality based on its relevance to that specific region. Adaptive prompt engineering was achieved by leveraging the promptability of the MedSAM foundation model, crafting sub-region-specific prompts to guide the model’s attention and refine segmentation boundaries. This technique harnesses the inherent capabilities of MedSAM, tailoring its performance to the nuances of each tumor sub-region. The study pioneered a method for dynamically adjusting the model’s focus, allowing it to prioritise information from different modalities depending on the characteristics of each tumor sub-region.

Researchers meticulously evaluated the performance of their framework against baseline methods, focusing on the particularly challenging necrotic core sub-region where accurate segmentation is crucial for treatment planning. Quantitative analysis revealed significant performance gains, demonstrating that the proposed approach outperforms existing methods in delineating the necrotic core and improving overall segmentation accuracy. This work delivers a principled and effective approach to multi-modal fusion and prompting, achieving state-of-the-art performance in MedSAM-based brain tumor segmentation. Through extensive experimentation, the team provided a comprehensive analysis of the impact of each component, offering valuable insights into designing effective multi-modal fusion and prompting strategies for foundation models in medical imaging, ultimately paving the way for more accurate and robust solutions.

BraTS Necrotic Core Segmentation Performance Gains are significant

Scientists have developed a novel framework for adapting foundation models to multi-modal medical imaging, addressing the critical challenge of effectively fusing information from diverse sources and accommodating the variability of pathological tissues. Experiments utilising the BraTS 2020 brain tumor segmentation dataset demonstrate significant performance gains, particularly in segmenting the challenging necrotic core sub-region. The team measured Dice scores and Intersection over Union (IoU) to quantify segmentation accuracy, employing a 5-fold cross-validation strategy to ensure robust evaluation and reporting mean and standard deviation across folds. Statistical significance was assessed using a two-sided Wilcoxon signed-rank test, with p Initial experiments revealed that FLAIR achieved the highest single-modality Dice score of 0.8547 ±0.091, consistent with its clinical utility in highlighting pathological tissue.

However, a naive multi-modal approach, using simple channel concatenation, underperformed with a Dice score of only 0.8009 ±0.076, underscoring the difficulties of multi-modal fusion without adaptation. Crucially, a fine-tuned multi-modal model achieved a Dice score of 0.8778 ±0.063, a substantial improvement over both the naive approach and the best single modality, demonstrating the importance of fine-tuning for cross-modal learning. These results pave the way for the team’s advanced, attention-based framework. An ablation study confirmed the effectiveness of the sub-region-aware modality attention and adaptive prompt engineering.

The developed method attained a whole tumor Dice score of 0.900, competitive with the state-of-the-art nnU-Net (0.890). More impressively, the method demonstrated superior performance on enhancing tumor segmentation, achieving a Dice score of 0.900 compared to 0.820 for nnU-Net, representing a 9.8% improvement. The full framework, combining attention and prompting, achieved a Dice score of 0.9012 ±0.051, a significant improvement over the baseline fine-tuned multi-modal model. Detailed sub-region performance analysis revealed critical disparities, motivating the proposed framework. The enhancing tumor was segmented with high accuracy across modalities, while the necrotic core presented a significant challenge, with single modalities achieving Dice scores as low as 0.61. Multi-modal fusion provided the largest improvement for the necrotic core, increasing the Dice score to 0.64 ±0.14, but performance remained substantially lower than other sub-regions, highlighting the need for specialized approaches. Measurements.

👉 More information

🗞 Sub-Region-Aware Modality Fusion and Adaptive Prompting for Multi-Modal Brain Tumor Segmentation

🧠 ArXiv: https://arxiv.org/abs/2601.15734