Medical image synthesis offers a promising solution to the challenges posed by limited contrast medium availability in radiological imaging, with the potential to improve tumor detection and diagnosis. Researchers Yifan Chen, Fei Yin, and Hao Chen from the University of Cambridge, alongside Jia Wu from MD Anderson Cancer Center and Chao Li from the University of Cambridge and University of Dundee, have addressed a critical gap in this field by introducing PMPBench, a novel, fully paired, pan-cancer medical imaging dataset spanning eleven human organs.

This benchmark overcomes the limitations of existing resources, which are often brain-focused, incomplete, or privately held, by providing complete dynamic contrast-enhanced (DCE) MRI sequences and paired non-contrast/contrast-enhanced CT acquisitions. PMPBench enables rigorous evaluation of image translation techniques and is expected to accelerate the development of safe and effective contrast synthesis methods, with significant implications for multi-organ oncology imaging workflows.

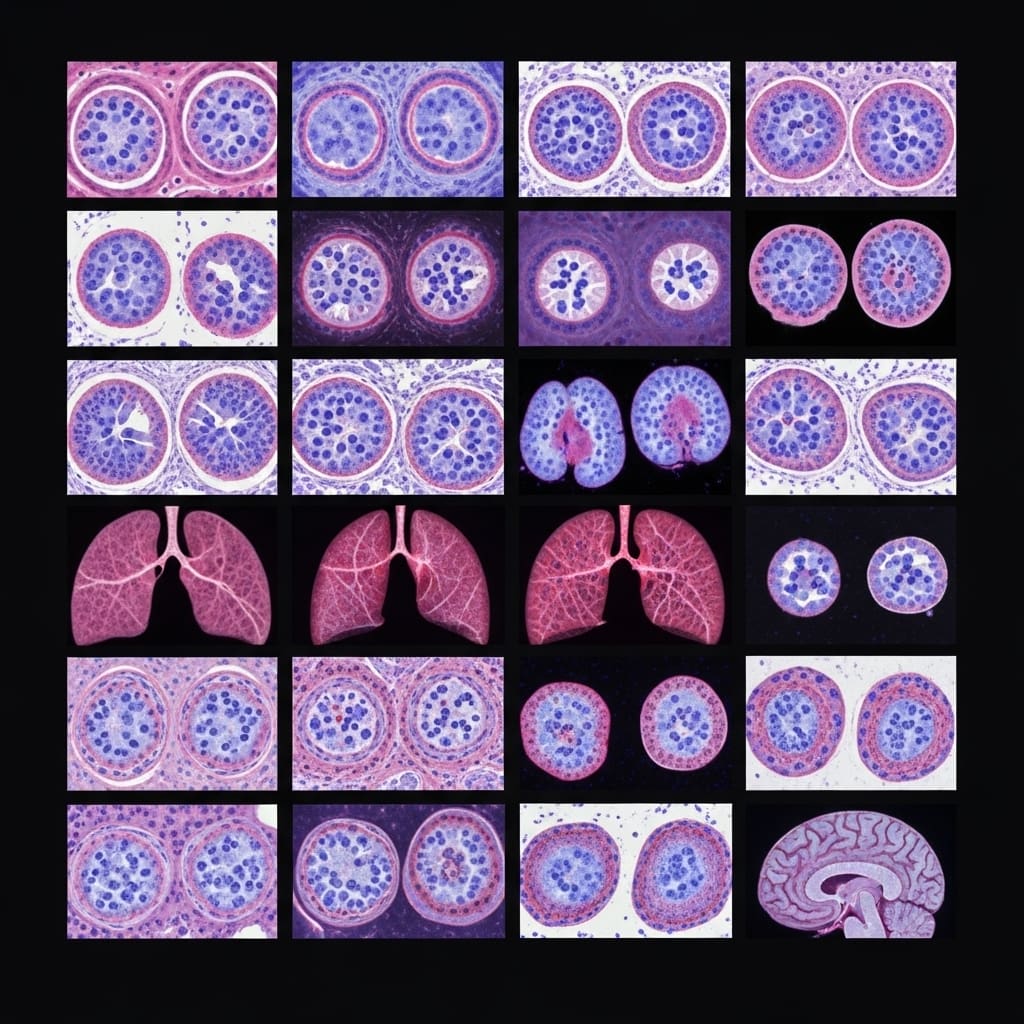

Figure 1 illustrates a representative organ system with paired contrast and non-contrast scans, demonstrating the organ-wise composition and modality balance of the dataset. The data curation process involves template-based transformation and spatial registration, ensuring anatomical consistency across modalities. In particular, this work contributes a cleaning and pairing standard for urinary system data, providing high-quality, clinically relevant benchmarks for future research in multi-organ image synthesis and AI-driven contrast enhancement.

AI Synthesis of Contrast-Enhanced MR Images

Scientists play a pivotal role in radiological imaging, as it enhances lesion conspicuity and improves detection for the diagnosis of tumor-related diseases. However, depending on the patient’s health condition or available medical resources, the use of contrast medium is not always feasible. Recent research has explored AI-based image translation to synthesize contrast-enhanced images directly from non-contrast scans, aiming to reduce side effects and streamline clinical workflows. Progress in this direction has been constrained by data limitations: (1) existing public datasets focus almost exclusively on brain-related paired MR modalities; (2) other collections include partially paired data but suffer from missing modalities or timestamps and imperfect spatial alignment; (3) explicit labeling of CT vs CTC or DCE phases is often absent; and (4) substantial resources remain private.

To address these limitations, we establish a comprehensive benchmark. We report results from representative baselines of contemporary image-to-image translation, and our code and dataset are publicly available at https://github.com/YifanChen02/PMPBench. Accurate diagnosis often depends on how clearly subtle tissue differences can be visualized. Contrast medium amplifies these differences, facilitating more reliable detection. Given one or more input modalities X={x1,x2,…,xn}X = \{x_1, x_2, \dots, x_n\} and one or more target modalities Y={y1,y2,…,ym}Y = \{y_1, y_2, \dots, y_m\}, a generative model ff produces synthesized images Y^\hat{Y} that approximate the ground-truth targets:

Y^=f(X)\hat{Y} = f(X)

In this study, we consider widely used imaging modalities: Computed Tomography (CT), contrast-enhanced CT (CTC), and multiple Magnetic Resonance Imaging (MRI) sequences, including Dynamic Contrast-Enhanced MRI (DCE).

To comprehensively evaluate generative models, we design benchmark tasks reflecting increasing levels of difficulty and common clinical scenarios involving missing modalities. Three representative settings are considered:

-

1-to-1 Translation: Single input modality to a single target (e.g., CT → CTC or DCE1 → DCE2). This setting tests a model’s ability to capture modality-specific features and preserve anatomical correspondence.

-

N-to-1 Translation: Multiple input modalities to a single target (e.g., DCE1, DCE3 → DCE2). This evaluates how well models integrate complementary anatomical information while maintaining structural fidelity and modality consistency. In DCE imaging, this setting also probes the ability to reconstruct an intermediate phase from neighboring phases, capturing temporal dynamics of contrast uptake.

-

1-to-N Translation: A single input modality to multiple targets simultaneously (e.g., DCE1 → DCE2, DCE3). This assesses whether models can jointly capture inter-modal dependencies and generate anatomically consistent outputs across domains, which is clinically important for contrast enhancement.

Targeted organs and imaging modalities: Based on three criteria—(i) availability of paired CE/NCE scans, (ii) prevalence in oncology imaging studies, and (iii) clinical importance of contrast enhancement for lesion delineation—we selected 11 organs spanning both CT (adrenal gland, liver, lung) and MRI (breast).

Data selection and preprocessing: All scans were sourced from publicly available repositories, such as The Cancer Imaging Archive (TCIA) and related datasets. Collections with paired CE/NCE scans or reliable metadata indicating contrast phase were identified, and raw images were retrieved. Spatial alignment was performed using ITK and the Elastix back-end, employing mutual-information metrics and a multi-resolution pyramid schedule, with detailed parameters provided in the supplementary material. Organ-level bounding box cropping and resampling to isotropic spacing (1×1×1 mm) were applied. For CT scans, Hounsfield Unit (HU) windowing w

Scientists Results

Researchers established a comprehensive benchmark utilising this resource, reporting results from representative baselines of contemporary image-to-image translation methods. The team developed FlowMI, a flow-matching model designed to capture complex. Researchers established three benchmark tasks and reported initial results using representative generative models, demonstrating the potential of this resource to advance the field. PMPBench addresses critical limitations in existing datasets, which often focus solely on brain imaging, lack complete modality pairings, or suffer from inconsistencies in data acquisition and labelling. While acknowledging biases inherent in public data sources and the variability of clinical imaging, the authors posit that PMPBench provides a solid foundation for developing robust multimodal translation methods and improving clinically reliable decision support systems. The authors suggest future work could expand the dataset with additional modalities and larger patient cohorts, alongside the exploration of more advanced synthesis techniques to further enhance generalisation and applicability.

👉 More information

🗞 PMPBench: A Paired Multi-Modal Pan-Cancer Benchmark for Medical Image Synthesis

🧠 ArXiv: https://arxiv.org/abs/2601.15884