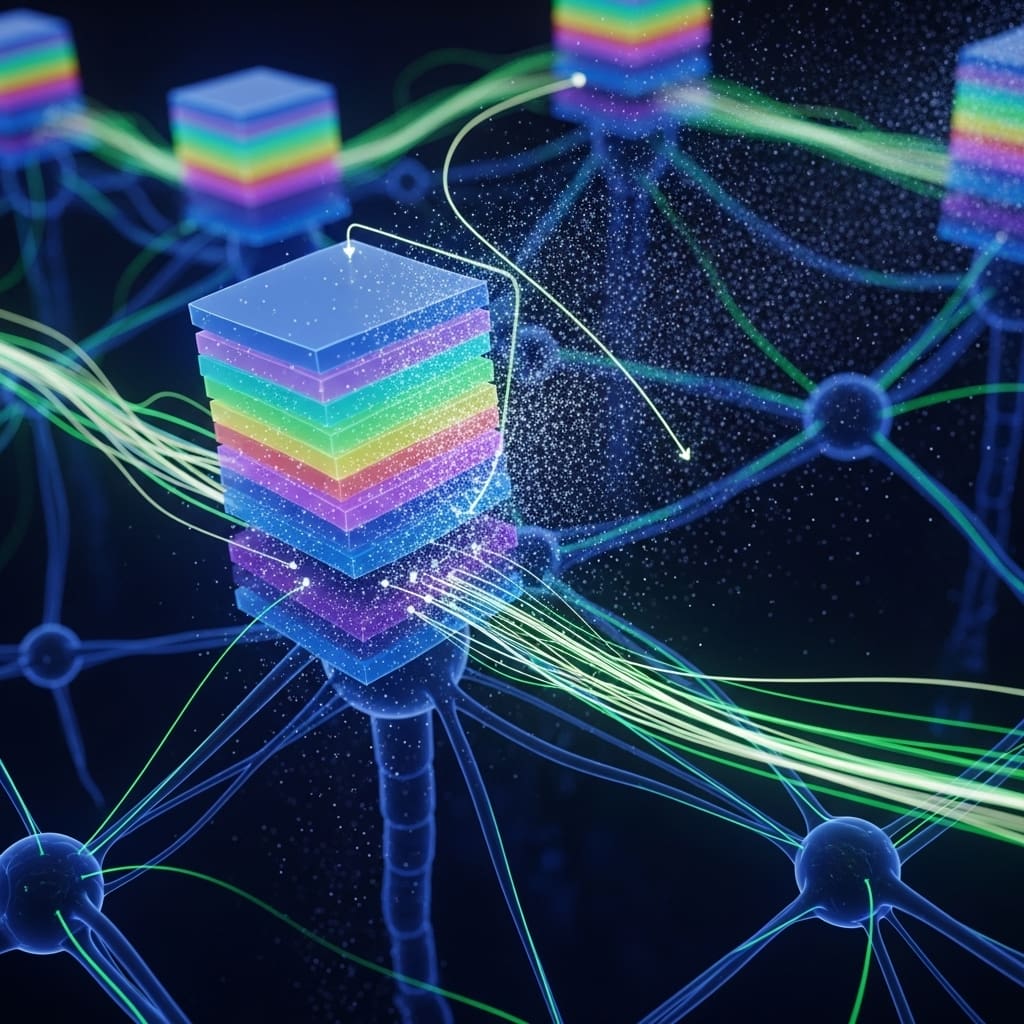

Researchers are addressing the challenge of deploying speech recognition on resource-constrained devices, where fluctuating computational conditions demand adaptable models. Abdul Hannan from the University of Trento and Fondazione Bruno Kessler, together with Daniele Falavigna, Shah Nawaz, and colleagues, present a novel framework called Distillation-based Layer Dropping (DLD) to create dynamic speech networks that maintain high accuracy even under limited resources. Existing approaches often suffer performance degradation with either significant or no layer dropping, which impedes the balance between inference speed and recognition precision. In contrast, DLD integrates knowledge distillation into an end-to-end framework to overcome these limitations. Extensive experiments using conformer and WavLM models on established benchmarks demonstrate that DLD substantially reduces word error rates (WER) while also decreasing training time, representing a significant step toward efficient and robust edge speech processing.

Experiments were conducted using both conformer and WavLM architectures on three publicly available benchmarks. This work pioneers the integration of knowledge distillation and layer dropping within a single, end-to-end framework, achieving state-of-the-art performance for dynamic speech recognition. Dynamic models were trained with varying layer-dropping rates, and their impact on WER and training time was carefully evaluated. In this setup, a large static model acts as a ‘teacher,’ transferring knowledge to a dynamic ‘student’ model through distillation. This process guides the student to maintain performance even as layers are dropped, with embedding supervision applied across all encoder layers of the teacher to align the dynamic model’s embeddings.

Performance metrics indicate that DLD reduces WER by 9.32% in high layer-dropping scenarios and by 2.25% in cases with no layer dropping. The key innovation lies in mitigating performance loss across both extremes of the dropping spectrum, unlike previous methods that struggled under high or low dropping rates. This approach enables a robust and adaptable dynamic architecture suitable for devices with variable computational capabilities. During training, random layer dropping was employed, allowing the model to learn which layers are most critical for accuracy. This technique also introduces regularization and improves execution speed. Compared to prior work, which relied on static models or two-step training frameworks, DLD offers a streamlined, end-to-end solution for building dynamic speech networks.

Distillation dropping improves speech recognition accuracy significantly

The team measured performance using both the Conformer and WavLM-base architectures on the LibriSpeech-1000, TED-LIUM v3, and LibriSpeech datasets. Results demonstrate that the DLD framework outperforms existing random dropping (RD) based baseline methods when trained on LibriSpeech 1000. Further analysis showed that with 10 parameters, WER was 5.90% with DLD, versus 7.28% and 6.95% for the baselines. The team meticulously recorded WER across varying numbers of dropped layers (nDS), revealing consistent performance gains with DLD, even with minimal or no dropping. Tests prove that the framework delivers a superior performance-computation trade-off, achieving up to 2x computational speed-up on the TED-LIUM v3 test split with nDS = 6, while maintaining improved WER scores of 2.35% and 0.89%. Measurements confirm that the WavLM-base model, when enhanced with DLD, achieved a 4.83% and 7.29% better WER on LibriSpeech and TEDLIUM datasets respectively, compared to the baseline with random dropping. However, the authors acknowledge that the framework’s performance is contingent on the quality of the teacher network and the specific characteristics of the speech data used for training. Future research could explore the application of DLD to other domains beyond speech recognition, as the framework’s effectiveness extends beyond this specific task. Additionally, investigating methods to further optimise the knowledge distillation process and reduce the computational overhead of dynamic layer dropping could enhance the framework’s efficiency and scalability. This work represents a significant step towards creating more adaptable and efficient speech processing systems for resource-constrained devices.

👉 More information

🗞 Distillation-based Layer Dropping (DLD) Effective End-to-end Framework for Dynamic Speech Networks

🧠 ArXiv: https://arxiv.org/abs/2601.16117