Scientists are tackling the challenge of reliably decompiling binary code using large language models (LLMs), which currently treat code as simple text and overlook crucial control flow graphs. Yonatan Gizachew Achamyeleh, Harsh Thomare, and Mohammad Abdullah Al Faruque, all from the University of California, Irvine, introduce \textsc{HELIOS}, a novel framework that transforms LLM-based decompilation into a structured reasoning process. By summarising a binary’s control flow into a hierarchical text representation , detailing basic blocks, successors, loops and conditionals , \textsc{HELIOS} significantly improves both the syntactic and logical consistency of the decompiled output, particularly for complex, optimised binaries. Their research demonstrates a substantial leap in object file compilability, boosting performance from 45.0% to 85.2% with Gemini 2.0 and 71.4% to 89.6% with GPT-4.1 Mini on the HumanEval-Decompile benchmark, and even exceeding 94% with compiler feedback , making it a potentially transformative tool for reverse engineering and security analysis across diverse hardware platforms.

Scientists Background

Scientists have unveiled a groundbreaking framework called \textsc{HELIOS} that fundamentally alters how large language models approach binary decompilation, moving beyond treating code as simple text. The research establishes a new paradigm by explicitly incorporating a binary’s control flow and function calls into a hierarchical text representation, enabling more accurate and logically consistent decompilation, particularly for complex, optimized binaries. This innovative approach reframes LLM-based decompilation as a structured reasoning task, providing the model with crucial contextual information previously ignored. \textsc{HELIOS} summarises the intricate control flow and function calls of a binary, translating them into a textual format that details basic blocks, their connections, and high-level patterns like loops and conditional statements. The team achieved significant improvements in object file compilability using \textsc{HELIOS} in conjunction with both Gemini~2.0 and GPT-4.1~Mini on the HumanEval-Decompile benchmark for \texttt{x86_64} architecture.

Specifically, \textsc{HELIOS} elevated the average compilability rate from 45.0\% to 85.2\% when used with Gemini~2.0, and from 71.4\% to 89.6\% with GPT-4.1~Mini. Furthermore, integrating compiler feedback into the process pushed compilability beyond 94\%, while simultaneously boosting functional correctness by up to 5.6 percentage points compared to traditional text-only prompting methods. This demonstrates a substantial leap in the reliability and usability of LLM-driven decompilation. Experiments show that \textsc{HELIOS} not only enhances accuracy but also promotes consistency across diverse hardware platforms.

Across six architectures, x86, ARM, and MIPS, the framework effectively reduces variations in functional correctness while maintaining consistently high syntactic correctness, all without requiring any fine-tuning of the language model. This adaptability is crucial for reverse engineering workflows targeting a wide range of devices and systems. The research establishes \textsc{HELIOS} as a practical and versatile tool for security analysts, providing them with recompilable, semantically faithful code across various hardware targets. This breakthrough reveals a pathway towards automating the demanding task of reverse engineering, which is essential for software security, malware analysis, vulnerability triage, and maintaining legacy systems. By bridging the gap between the graph-based nature of binary code and the text-native processing of LLMs, \textsc{HELIOS} opens up new possibilities for automated program understanding and analysis, potentially alleviating the shortage of skilled reverse engineers and accelerating security research. The work offers a significant advancement in the field, promising to reshape how we approach binary analysis and software security in the future.

Scientists Method

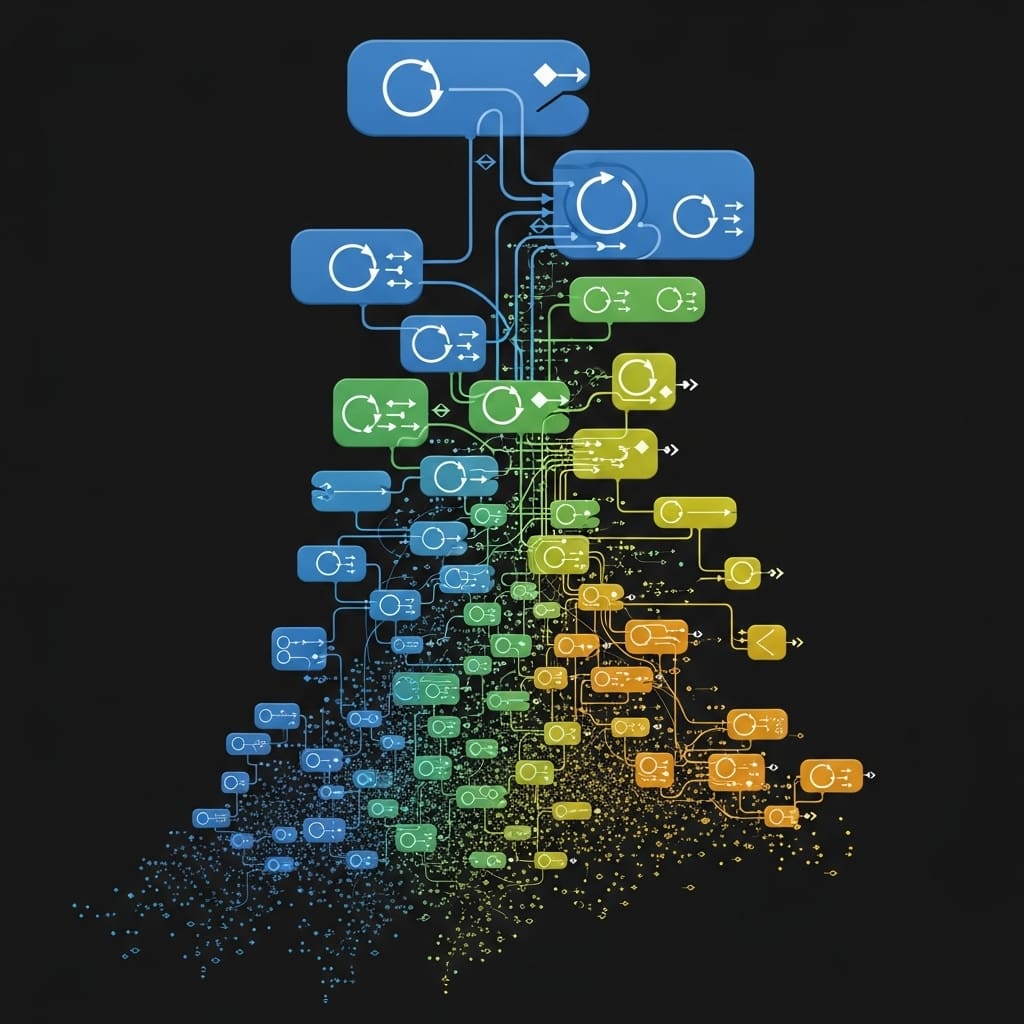

Scientists developed \textsc{HELIOS}, a novel framework that reimagines LLM-based binary decompilation as a structured reasoning task, addressing limitations in existing approaches that treat code as mere text. The study pioneered a method for encoding control flow and call graphs into a hierarchical textual representation, detailing basic blocks, successors, and high-level patterns like loops and conditionals, to provide LLMs with crucial structural context. This innovative representation is then supplied to a general-purpose LLM alongside raw decompiler output, optionally incorporating a compiler-in-the-loop system that flags compilation errors, a crucial feedback mechanism for refinement. Researchers engineered a static analysis backend to derive both control flow and call graphs, forming the foundation for the textual encoding process.

The team then meticulously crafted prompts containing a summary of the function’s role, a concise description of the CFG and its primary paths, and block-level details linking basic blocks to the initial decompiler output. A set of natural language rules guides the LLM’s interpretation of this structure, ensuring effective utilization of control flow information, mirroring the analytical approach of human experts. This technique achieves a fine-tuning-free, architecture-agnostic pipeline, encouraging the model to leverage control flow data for more accurate decompilation. Experiments employed the HumanEval-Decompile benchmark for \texttt{x86_64} architecture, demonstrating substantial improvements in object file compilability, raising the average from 45.0\% to 85.2\% when using Gemini~2.0 and from 71.4\% to 89.6\% with GPT-4.1~Mini.

The inclusion of compiler feedback further elevated compilability beyond 94\%, while simultaneously improving functional correctness by up to 5.6 percentage points compared to text-only prompting methods. The system delivers consistent syntactic correctness alongside reduced variance in functional correctness across six architectures, x86, ARM, and MIPS, without requiring any model fine-tuning. This work extends beyond mere performance gains; the study pioneered a cross-architecture evaluation of structure-aware LLM decompilation, showcasing the framework’s ability to generalize across diverse instruction sets. Scientists harnessed this capability to demonstrate that a single prompt design effectively operates on x86, ARM, and MIPS, a critical advantage for security analysis of firmware and IoT devices where diverse hardware targets are common. The resulting recompilable, semantically faithful code positions \textsc{HELIOS} as a practical tool for reverse engineering workflows in security settings, enabling automated bug finding, function prediction, and fuzzing applications.

HELIOS boosts LLM binary decompilation success significantly

Scientists have developed \textsc{HELIOS}, a novel framework that fundamentally alters how large language models (LLMs) approach binary decompilation. The research reframes LLM-based decompilation as a structured reasoning task, moving beyond treating code as simple text and instead incorporating the crucial graph structures governing program control flow. Experiments revealed a significant increase in object file compilability, raising the average from 45.0\% to 85.2\% for Gemini~2.0 and from 71.4\% to 89.6\% for GPT-4.1~Mini on the HumanEval-Decompile benchmark for \texttt{x86_64} architecture. This breakthrough delivers a substantial improvement in the reliability and usability of decompiled code.

The team measured the impact of \textsc{HELIOS} by summarizing a binary’s control flow and function calls into a hierarchical text representation, detailing basic blocks, successors, and high-level patterns like loops and conditionals. This representation, combined with raw decompiler output and optional compiler feedback, was then supplied to a general-purpose LLM. Results demonstrate that with compiler feedback, compilability exceeded 94\%, indicating a near-perfect rate of generating code that can be successfully built into an executable program. Furthermore, functional correctness improved by up to 5.6 percentage points over text-only approaches, confirming the enhanced logical consistency of the decompiled code.

Scientists recorded a reduction in the spread of functional correctness across six architectures, x86, ARM, and MIPS, while maintaining consistently high syntactic correctness. Measurements confirm that \textsc{HELIOS} achieves these improvements without requiring any fine-tuning of the LLM, showcasing its adaptability and generalizability. The framework constructs a hierarchical textual representation encompassing function-level summaries, logical flow sections detailing intra-function control flow, and block-level views providing low-level evidence. This multi-level approach mimics the analytical process of a human expert, synthesizing information from various representations to achieve a holistic understanding of the program.

Tests prove that \textsc{HELIOS}’s ability to provide a general-purpose LLM with explicit descriptions of the control flow graph and related context significantly improves recompilability and functional correctness. The work utilizes Ghidra Software Reverse Engineering Framework to extract pseudo-C code, control-flow graphs, and metadata for each function. Data shows that this framework is a practical building block for reverse engineering workflows in security settings, enabling analysts to obtain recompilable, semantically faithful code across diverse hardware targets. The breakthrough offers a powerful new tool for security professionals and researchers alike.

HELIOS improves LLM binary decompilation significantly, achieving state-of-the-art

Scientists have developed \textsc{HELIOS}, a novel framework addressing limitations in applying large language models (LLMs) to binary decompilation. The core innovation lies in reframing the task as structured reasoning, where the LLM receives a hierarchical textual representation of the binary’s control flow and function calls, alongside raw decompiler output. This approach significantly improves the quality of decompiled code, particularly for optimised binaries, by providing crucial structural context previously ignored by LLM-based methods. Experiments on the HumanEval-Decompile benchmark, across six processor architectures, demonstrate substantial gains in both compilability and functional correctness using \textsc{HELIOS}.

Specifically, the framework increased average object file compilability to 85.2\% for Gemini~2.0 and 89.6\% for GPT-4.1~Mini, a marked improvement over text-only prompting. Furthermore, incorporating compiler feedback pushed compilability beyond 94\% and enhanced functional correctness by up to 5.6 percentage points. The authors acknowledge a limitation in not explicitly incorporating data flow information, which could be beneficial for code with complex memory layouts. Future work could explore tighter integration with reverse engineering platforms via interfaces like the Model Context Protocol, and comparison against established non-LLM decompilers for tasks like type and data structure recovery.

👉 More information

🗞 HELIOS: Hierarchical Graph Abstraction for Structure-Aware LLM Decompilation

🧠 ArXiv: https://arxiv.org/abs/2601.14598