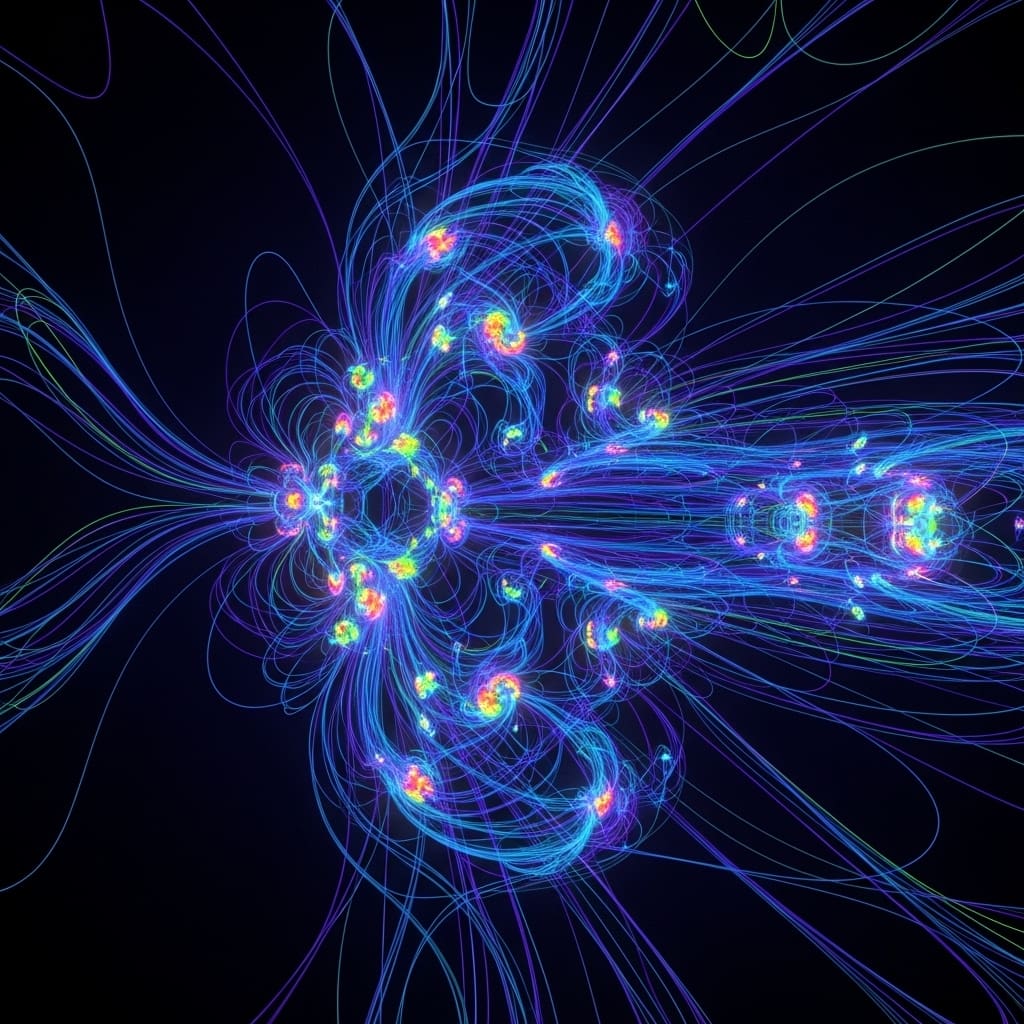

Gyrokinetic plasma simulations are essential for understanding complex behaviours within fusion energy devices, yet remain computationally demanding. Giorgio Daneri from the Max Planck Institute for Plasma Physics (IPP), alongside colleagues, present significant advances in accelerating the TRIangular MEsh based Gyrokinetic (TRIMEG) code using graphical processing units (GPUs). Their research details a portable implementation leveraging the OpenMP API, successfully offloading computationally intensive particle operations to NVIDIA GPUs and overcoming key compiler limitations. This work is particularly noteworthy as it demonstrates a pathway to substantially reduce simulation times , crucial for both interpreting experimental results and designing future fusion reactors , whilst maintaining code portability across diverse hardware architectures.

This breakthrough addresses the critical need for accelerated computational methods in modelling complex plasma phenomena, such as instabilities and turbulence, which are notoriously difficult to study theoretically. The research team meticulously restructured and optimised the TRIMEG code, a high-accuracy particle-in-cell simulation tool for tokamak geometries, to leverage the parallel processing capabilities of GPUs, dramatically reducing execution time. The core of this work involved offloading computationally intensive portions of the TRIMEG code, specifically, particle pushing and grid-to-particle operations, onto GPU accelerators.

This approach, facilitated by OpenMP, ensures code portability across diverse architectures, including both AMD and NVIDIA GPUs. Overcoming compiler limitations required careful code restructuring, and the team employed profiling tools to analyse kernel performance, meticulously evaluating resource occupancy and memory usage. Detailed GPU grid size exploration and scalability studies were conducted to optimise performance and assess the efficiency of a hybrid MPI-OpenMP parallelisation strategy. Experiments demonstrate a substantial speedup of the GPU implementation compared to the traditional CPU version, achieved through rigorous performance analysis and optimisation.

The researchers calculated this speedup using multiple rationales, ensuring a comprehensive evaluation of the acceleration gains. Crucially, the correctness of the GPU-accelerated TRIMEG code was verified by simulating the Ion Temperature Gradient (ITG) mode, confirming the accuracy of the results in terms of energy growth rate and two-dimensional mode structures, validating the physics of the simulation. This innovation unlocks new possibilities for high-fidelity plasma simulations, enabling more detailed investigations into fusion energy research and advanced plasma control schemes. The ability to accurately model plasma behaviour is fundamental to designing and optimising next-generation fusion devices, and this GPU-accelerated TRIMEG code provides a powerful tool for achieving this goal. The portability afforded by OpenMP further enhances the impact of this work, allowing researchers to utilise a wider range of computational resources and accelerate scientific discovery in the field of plasma physics.

TRIMEG GPU Acceleration via OpenMP Implementation significantly improves

Scientists increasingly depend on simulations to investigate complex plasma phenomena, including instabilities and turbulence, which are challenging to study theoretically. Accurate simulations are vital for designing experiments and interpreting results from next-generation devices, yet high-fidelity gyrokinetic simulations demand substantial computational resources. Overcoming compiler limitations required careful code restructuring to optimise performance for GPU acceleration, a key methodological innovation. Kernel performance was then rigorously evaluated through GPU grid size exploration and scalability studies, identifying optimal configurations for maximum efficiency.

The study pioneered a hybrid parallelization strategy combining Message Passing Interface (MPI) with OpenMP, further enhancing computational throughput. Efficiency was assessed by comparing the GPU implementation against a pure CPU version, utilising multiple rationales to ensure accurate performance metrics. Specifically, the Ion Temperature Gradient (ITG) mode was simulated using the GPU-accelerated TRIMEG code, allowing scientists to verify the correctness of the accelerated implementation. Verification involved comparing the energy growth rate and two-dimensional mode structures obtained from the GPU simulation with established benchmarks.

The results confirmed the accuracy of the GPU-accelerated code, demonstrating its ability to faithfully reproduce the expected behaviour of the ITG mode. This approach ensures code portability across both AMD and NVIDIA architectures, broadening its applicability and impact.

The team meticulously ported both the particle pushing and grid-to-particle operations to GPU platforms, overcoming compiler limitations through strategic code restructuring. Kernel performance was rigorously evaluated via GPU grid size exploration and scalability studies, providing detailed insights into resource occupancy and memory usage. Measurements confirm that the hybrid MPI-OpenMP offloading parallelization strategy significantly improves computational efficiency. Specifically, the speedup of the GPU implementation was calculated and benchmarked against the pure CPU version using multiple methodologies, demonstrating substantial gains in processing speed.

Results demonstrate the successful simulation of the Ion Temperature Gradient (ITG) mode using the GPU-accelerated TRIMEG code. Data shows that the energy growth rate and two-dimensional mode structures obtained from the GPU implementation precisely match those of the CPU version, validating the correctness of the accelerated code. The energy growth rate was verified to be consistent with established theoretical predictions, confirming the accuracy of the simulation results. Tests prove the robustness of the methodology, paving the way for more complex and realistic plasma simulations. Furthermore, the study assessed the efficiency of data transfers between the CPU and GPU, optimising data handling to minimise bottlenecks and maximise performance.

Measurements indicate a substantial reduction in simulation time for complex scenarios, enabling researchers to explore a wider range of plasma parameters and configurations. The breakthrough delivers a powerful tool for advancing fusion energy research, allowing for more detailed investigations into plasma behaviour and improved design of next-generation devices. Through careful porting of particle pushing and grid-to-particle operations, alongside overcoming compiler limitations and restructuring code, they achieved significant performance gains, demonstrating the potential of GPU acceleration for complex plasma simulations. This advancement is particularly significant because gyrokinetic simulations are computationally demanding, hindering both fundamental research and the efficient design of future fusion devices.

By successfully offloading key computational tasks to GPUs, researchers can substantially reduce simulation execution time, enabling more detailed investigations of plasma instabilities, turbulence, and nonlinear behaviours. The use of OpenMP ensures code portability across different GPU architectures, broadening the applicability of this acceleration technique. Verification of the GPU-accelerated code was performed by simulating the Ion Temperature Gradient (ITG) mode, confirming the accuracy of energy growth rates and two-dimensional mode structures. The authors acknowledge that achieving complete agreement between GPU and CPU simulation results proved challenging, particularly in low-β and low-n scenarios, which are inherently less numerically stable.

They identified discrepancies stemming from differing hardware implementations of arithmetic operations on GPUs and the complexities introduced by the OpenMP abstraction. Furthermore, initial performance bottlenecks related to memory transfers between the host and device required extensive debugging and code optimization. Future research will likely focus on refining the GPU implementation to address these remaining discrepancies and further improve performance, potentially exploring alternative parallelization strategies or lower-level programming approaches to maximize GPU utilization.

👉 More information

🗞 GPU Acceleration and Portability of the TRIMEG Code for Gyrokinetic Plasma Simulations using OpenMP

🧠 ArXiv: https://arxiv.org/abs/2601.14301