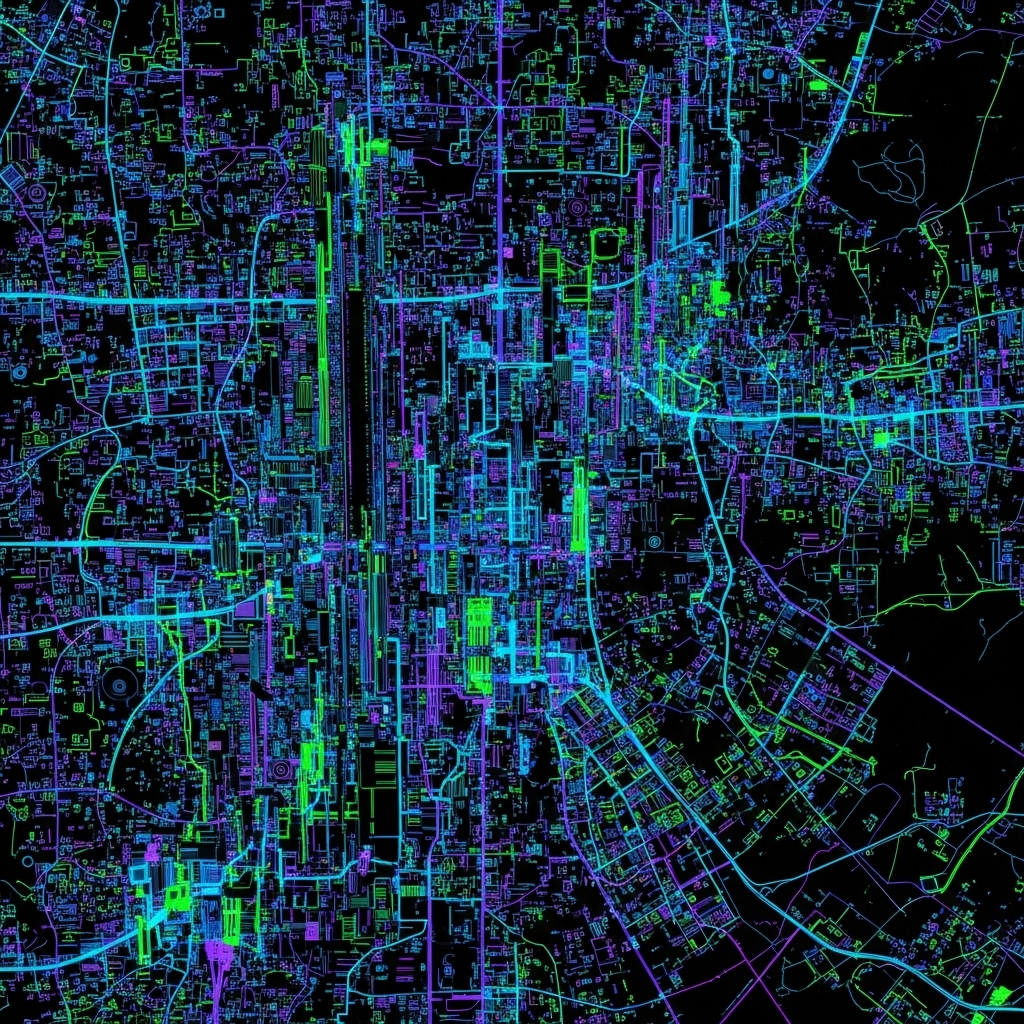

Scientists are increasingly focused on understanding and quantifying the elusive concept of urban identity, and a new framework called Virtual Urbanism (VU) offers a promising solution. Glinskaya Maria from The University of Tokyo, alongside colleagues, presents a novel, AI-driven approach to assess what makes a city , or part of a city , uniquely recognisable, utilising synthetic urban replicas generated through diffusion models. Their pilot study, “Virtual Urbanism and Tokyo Microcosms”, successfully recreated nine Tokyo areas, achieving an impressive 81% accuracy in human identification tests, and importantly, establishes a means of measuring ‘Urban Identity Level’ (UIL). This research signifies a substantial step towards automated, multi-parameter metrics for urban analysis, potentially revolutionising how we understand and preserve the character of cities worldwide.

Researchers present the feasibility study, Virtual Urbanism and Tokyo Microcosms. A pipeline integrating Stable Diffusion and LoRA models was used to produce synthetic replicas of nine Tokyo areas rendered as dynamic synthetic urban sequences, excluding existing orientation markers to elicit core identity-forming elements. Human-evaluation experiments assessed perceptual legitimacy of replicas, quantified area-level identity, and derived core identity-forming elements. Results showed a mean identification accuracy of ~81%, confirming the validity of the replicas. The Urban Identity Level (UIL) metric enabled assessment of identity levels across areas, while semantic analysis revealed.

AI for quantifying and preserving urban identity

Scientists are increasingly adapting machine-learning techniques to urban-perception research. Recent advances in Artificial Intelligence (AI) are reshaping analytical methodologies across architectural and urban studies, allowing researchers to move beyond conventional modes of urban study. Contemporary urban development is often shaped by globalization and economic optimization, where locally embedded urban identity, a configuration of perceptual cues, tends to be treated as secondary. This has heightened the importance of developing computational Methods capable of documenting and preserving urban identity.

Existing approaches often rely on limited outputs from generative AI, failing to leverage its potential for high-dimensional variation. This work addresses these limitations by introducing Virtual Urbanism (VU), a multimodal AI-driven analytical framework for quantifying urban identity through synthetic urban replicas. Synthetic urban replicas are defined as generatively produced, non-metric reconstructions of real urban environments, functioning as an analytical medium. The main analytical aspect of these replicas is their dynamic quality, a state of constant fluctuation in visual representation enabled by AI-driven synthetic generative variation.

A dynamic synthetic urban sequence is defined as a series of images rendered within the replica and re-generated through a diffusion model, undergoing image-to-image transformation to introduce maximal AI-driven variation. These frames are assembled into a video sequence, producing dense perceptual fluctuation and serving as the primary analytical tool for identifying core urban identity forming elements. This builds on the interrepresentational approach originally introduced by Marcos Novak, who proposed that essence emerges through a sequence of appearances governed by internal logic. The VU framework leverages generative AI to construct synthetic replicas endowed with dynamic quality, rendering the internal logic of urban identity perceptible through systematic repetition.

The present pilot study operationalizes this conceptual logic in a preliminary form. At a long-term level, the VU framework aims to advance the development of computationally tractable identity metrics, encompassing the formalization of urban identity as a measurable metric and the quantification of its defining elements. This paper investigates three objectives: (a) the capacity of generative models in replicating real urban settings; (b) the perceptual validity of these replications through human evaluation; (c) the applicability of synthetic replicas as an analytical medium for studying urban identity at the streetscape level. Section 2 reviews prior research.

Section 3 introduces the pilot study. Section 4 describes the method for constructing synthetic urban replicas, corresponding to objective (a). Section 5 outlines the method for extracting analytical data through human evaluation, addressing objective (b). Section 6 analyses the acquired data to generate insights addressing objective (c). Section 7 reflects on methodological and technical constraints.

Section 8 summarizes the outcomes and outlines directions for future development. Generative AI is finding growing implementation within architectural discourse, ranging from automated ideation to simulation and design evaluation. Vision-based generative models have shifted from Generative Adversarial Networks (GANs) to Denoising Diffusion Models (DDMs), such as Latent Diffusion, featuring text-to-image generation and simplified user interfaces. This transition has catalyzed a shift from niche science to mainstream application.While mostly utilized during the Schematic Design stage, applications of DMs range from early-stage urban analysis to controllable floorplan generation and three-dimensional spatial generation.

However, computational approaches often overlook the intangible social, cultural, and perceptual dimensions that constitute the essence of place. This work responds by contributing to the empirical exploration of generative models’ capacity to articulate contextual qualities and gauge urban identity, enabling qualitative factors to be incorporated into measurable parameters. A parallel trajectory of AI integration has emerged within urban perception studies, where machine learning is employed to evaluate how humans perceive built environments. Representative studies include Zhang et al., predicting perceptual attributes from street-view imagery; Liu et al., employing geo-tagged photos to reassess Lynch’s imageability elements; and Ordonez and Berg, demonstrating cross-city prediction of perceptual judgments from visual data. What earlier theorists pursued as qualitative exploration of city atmospheres, such as the Situationist International’s psychogeographic maps, is now being reawakened and operationalized, translating affective qualities into quantifiable data. Despite the integration of AI-driven Methods, limited studies have examined qualitative urban characteristics through artificially generated media and their alignment with human perception.

AI Recreates Tokyo, 81% Perceptual Realism Achieved

Scientists have developed Virtual Urbanism (VU), a novel analytical framework leveraging AI to quantify urban identity through synthetic urban replicas. The research team constructed dynamic synthetic urban sequences of nine Tokyo areas using a pipeline integrating Stable Diffusion and LoRA models, deliberately excluding orientation markers to isolate core identity elements. Human-evaluation experiments were conducted to rigorously assess the perceptual legitimacy of these replicas, quantify area-level identity, and pinpoint the elements that contribute most to a sense of place. Results demonstrated a mean identification accuracy of approximately 81%, conclusively validating the perceptual realism of the generated urban environments.

The team measured urban identity using a newly developed Urban Identity Level (UIL) metric, enabling comparative assessment of identity strength across the nine Tokyo areas. Semantic analysis of the synthetic sequences revealed that culturally embedded typologies consistently emerged as the core identity-forming elements, highlighting the importance of local architectural and spatial characteristics. Experiments revealed that the dynamic nature of the synthetic sequences, a constant fluctuation in visual representation, proved crucial for identifying these recurring perceptual cues. These sequences, generated through AI-driven image-to-image transformations, created a dense perceptual fluctuation that allowed researchers to isolate the features most strongly associated with each area’s unique identity.

Measurements confirm that the VU framework successfully generates synthetic environments capable of eliciting robust perceptual responses from human evaluators. Data shows that the framework’s ability to create dynamic sequences, with each frame undergoing generative transformation, is key to revealing underlying patterns in urban identity. The breakthrough delivers a viable framework for AI-augmented urban analysis, paving the way for automated, multi-parameter identity metrics and a deeper understanding of how cities are perceived. This approach moves beyond static imagery, offering a new methodology for exploring the complex relationship between urban form, cultural context, and human experience.

Scientists recorded that the primary visual analytical materials and fine-tuned generative models produced during the study are publicly available, facilitating further research and application of the VU framework. The work positions generative AI not as a supplement to existing analytical workflows, but as a core methodological driver, enabling systematic revelation of previously inaccessible patterns in urban identity. This research outlines a path toward computational urban identity archiving and the identification of identity-rich urban environments, offering significant potential for applied design practice and urban planning.

Tokyo’s identity quantified via synthetic urban replicas offers

Researchers have developed Virtual Urbanism (VU), a new analytical framework for quantifying urban identity using synthetic replicas of cities. The pilot study, focusing on Tokyo, demonstrates a pipeline integrating Stable Diffusion and LoRA models to generate dynamic urban sequences, effectively recreating areas without relying on obvious landmarks.Human evaluation confirmed the perceptual legitimacy of these replicas, achieving approximately 81% identification accuracy, and enabled the creation of an Urban Identity Level (UIL) metric to assess identity strength across different locations. The findings establish VU as a viable method for computationally analysing urban environments, moving beyond purely measurable parameters to incorporate perceptual qualities.

Semantic analysis of the synthetic Tokyo areas revealed culturally embedded typologies as key elements forming urban identity, suggesting a pathway towards automated, multi-parameter identity metrics. The authors acknowledge limitations related to the scope of the study, focusing on a single city, and the potential for bias in human evaluations. Future research should explore the application of VU to diverse urban contexts and investigate the integration of additional data sources, such as soundscapes and pedestrian movement patterns, to refine the framework further.

👉 More information

🗞 Virtual Urbanism: An AI-Driven Framework for Quantifying Urban Identity. A Tokyo-Based Pilot Study Using Diffusion-Generated Synthetic Environments

🧠 ArXiv: https://arxiv.org/abs/2601.13846