Researchers are increasingly finding that component systems , from genomes and cities to texts and software , display strikingly regular statistical patterns. Andrea Mazzolini (Università di Torino & I.N.F.N), Mattia Corigliano (IFOM ETS), Rossana Droghetti (IFOM ETS) and colleagues demonstrate that these patterns may often arise simply from basic combinatorial constraints, rather than revealing unique system-specific mechanisms. Their work introduces a unifying mathematical framework to compare modular systems across diverse fields, identifying common ‘null’ trends and, crucially, offering tools to isolate genuinely informative features , potentially unlocking deeper understanding of the underlying generative processes and causal relationships that drive these complex systems. This innovative approach, combining statistical mechanics and machine learning, promises to refine our ability to distinguish between universal principles and truly novel system behaviours.

This breakthrough establishes a method for disentangling inherent constraints from the unique mechanisms driving system formation, offering a powerful tool for inferring hidden processes. The study, published January 21, 2026, and available as arXiv:2601.13985v1, leverages statistical mechanics and machine learning to explain the origins of general regularities and isolate informative features for understanding system-specific generation.

The team achieved a comprehensive description of component systems, representing them as realizations assembled from modular components, such as genes in genomes or words in texts. This representation is encoded in a component matrix, quantifying the occurrences of each component within each realization, allowing for a standardized analysis across disparate domains. By introducing a common notation based on summations and binarized counts from this matrix, the researchers defined key observables like component abundance, realization size, and component occurrence, facilitating comparisons between systems as diverse as evolutionary genomics and urban studies. This framework allows for the application of network theory tools, but importantly, provides a specific notation tailored to the unique interpretation of component systems and the meaning of the introduced quantities.

Experiments show that these systems consistently exhibit statistical regularities, often described as “laws”, despite differing microscopic details, including Zipf’s law for component frequencies and Heaps’ law relating diversity to system size. The research establishes that these patterns are not merely coincidental, but rather emerge from fundamental combinatorial and sampling constraints inherent in the modular structure of these systems. This approach allows for the identification of important components and realizations, as well as the discovery of groups with similar compositions using community detection and topic modeling. The work opens new avenues for understanding complex systems by providing a means to “subtract” general regularities from observed data, effectively isolating the informative features that reveal hidden generative processes. This innovative approach allows researchers to move beyond simply observing patterns and begin to infer the underlying mechanisms driving system formation, with potential applications ranging from designing more efficient software to understanding the evolution of genomes. The unifying framework presented promises to promote fruitful exchange of ideas and data analysis techniques between fields, ultimately advancing our understanding of the organizational principles governing complex systems across all domains of science.

Modular Systems and LLM-based Inference

Scientists investigated component systems, collections of parts forming larger entities, across diverse fields including biology, ecology, and social sciences, seeking to understand the origins of statistical regularities observed within them. The research team engineered a unifying mathematical framework to compare these modular systems, identifying both universal “null” trends and system-specific characteristics indicative of underlying generative dynamics. This framework facilitated the application of statistical mechanics and machine-learning tools with a twofold objective: explaining the prevalence of general regularities and isolating informative features for inferring hidden generative processes. Experiments employed Large Language Models (LLMs) as both analytical tools and empirical systems, leveraging their ability to internalize statistical patterns from massive datasets of language, code, and biological sequences.

Researchers harnessed LLMs to extract latent structures, such as embeddings reflecting dependency relations, and to probe the statistical and generative principles these models have learned. The study pioneered a method of subtracting universal regularities from data, effectively isolating system-specific features and revealing mechanistic and causal information previously obscured. This approach enables a shift in focus from simply cataloging empirical laws to quantitatively comparing generative mechanisms and identifying unique system trends. Notably, the work demonstrated that Zipf-like distributions, where frequency is inversely proportional to rank, can arise in machine learning during inference processes under conditions of limited sampling and high dimensionality.

Scientists implicitly assumed these distributions also occur within component systems due to heterogeneous components and modular structure. The research suggests that observing a Zipf law may indicate the relevance of measured variables, rather than a direct indicator of system behaviour, but joint marginal laws remain observable. To further refine this understanding, the team advocates for a unified statistical mechanical theory classifying generative models, bridging equilibrium sampling, history-dependent processes, and rule-based assembly. This innovative methodology, combining null models, mechanistic generative models, and LLM-based representation learning, promises a coherent theory of component systems and the potential to clarify the origins of observed regularities and guide data-driven discovery across scientific domains.

Component system analysis reveals universal statistical patterns

Scientists have developed a unifying mathematical framework for analysing component systems, revealing robust statistical regularities that govern their complexity across a wide range of domains. The study shows that systems as diverse as genomes, written texts, and cities exhibit patterns reminiscent of physical laws, motivating deeper investigation into whether these regularities arise from underlying generative mechanisms or from fundamental combinatorial constraints. By introducing a common notation for comparing modular systems across disciplines, the researchers identify both universal trends and system-specific signatures that reflect distinct generative dynamics.

The framework begins with the definition of a matrix ( n_{ij} ), which represents the count of component ( i ) in realization ( j ). From this, the realization size ( m_j ) is computed as the total number of components in each realization, and normalized to obtain the component frequency ( f_{ij} ). Component abundance ( a_i ) is defined by summing occurrences across all realizations and is further normalized to yield the ensemble frequency ( g_i ). These quantities together provide a complete description of the system at both the component and realization levels. Additional measures include the sharing number ( o_i ), which captures how many realizations contain a given component, and the vocabulary size ( h_j ), defined as the number of distinct components within a realization.

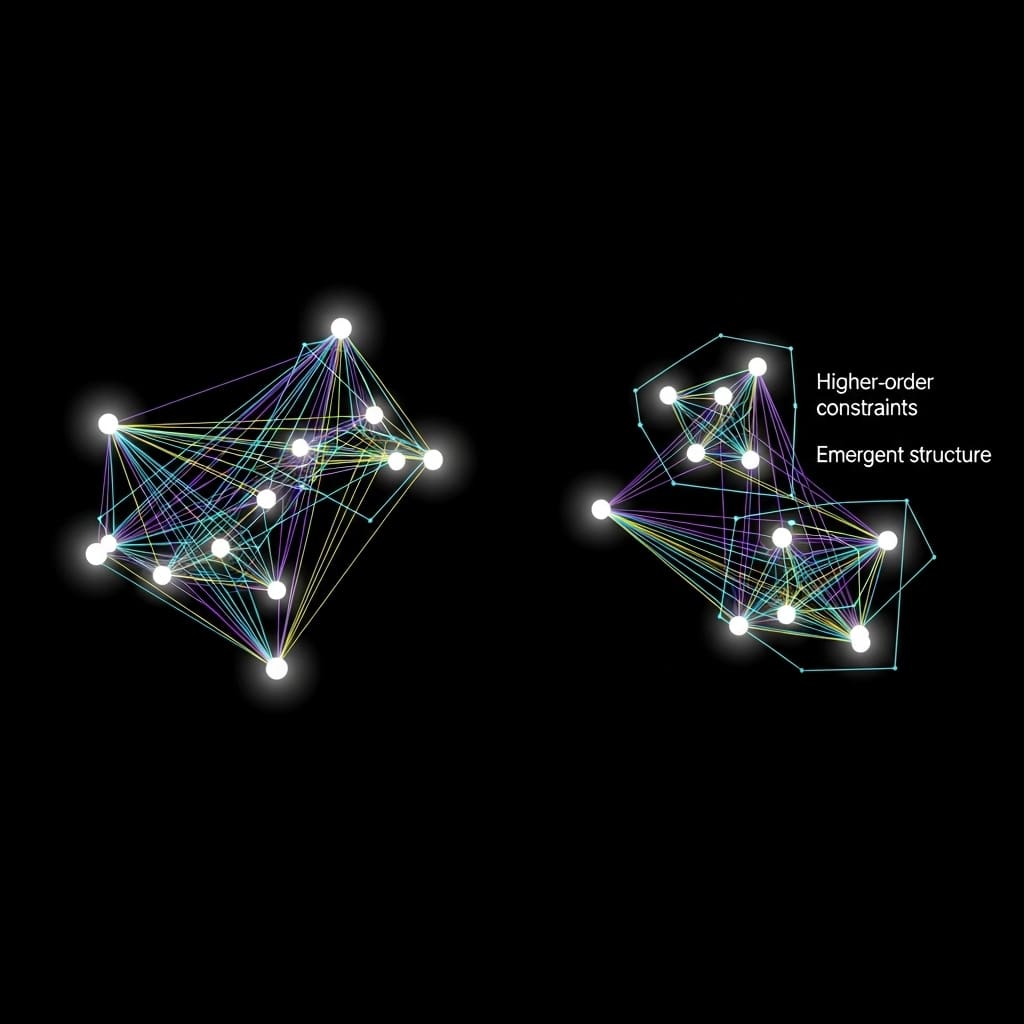

Taken together, these measures define a weighted bipartite structure that enables the application of network-theoretic tools such as centrality analysis and community detection. However, the authors argue that component systems possess conceptual and structural features that extend beyond standard bipartite networks, including explicit distinctions between layers, realizations, and components. Empirical results show that such systems often display hierarchical organization, with components grouped into classes and realizations structured across multiple levels, introducing constraints and observables not captured by simpler network abstractions. The analysis further reveals dependencies among components that influence their co-occurrence within realizations, as illustrated by software package dependencies.

The study also demonstrates the widespread emergence of well-known statistical regularities, including Zipf’s law and the so-called “U” law, within component systems. These patterns are clearly observed in the LEGO dataset, where component frequencies follow predictable distributions. Notably, a random sampling model generates artificial data that closely reproduces the empirical observations, suggesting that these statistical laws may arise primarily from sampling constraints rather than detailed system-specific mechanisms. This finding supports the view that universal combinatorial principles play a central role in shaping the observable structure of complex component systems.

statistical laws.

Universal Baseline for Modular System Analysis

Scientists have demonstrated a unifying mathematical framework for analysing component systems, which are prevalent across diverse fields like biology, ecology, and social sciences. This framework allows comparison of modular systems and distinguishes between common, null trends and system-specific characteristics indicative of underlying generative dynamics. The research establishes that robust, large-scale regularities arise from basic principles of sampling, combinatorics, and growth, providing a universal baseline across domains. The significance of these findings lies in a shift from simply observing empirical laws to quantitatively comparing generative mechanisms and inferring hidden structures within systems.

By “subtracting” these baseline regularities from data, researchers can isolate informative features revealing mechanistic and causal processes. The authors acknowledge limitations in fully classifying diverse generative models and systematically studying component granularity across scales, a key theoretical gap remains. Future research should focus on developing a unified statistical mechanical theory bridging equilibrium sampling, history-dependent processes, and rule-based assembly, alongside methods for studying component granularity across scales. Furthermore, the application of Large Language Models offers a novel perspective, potentially serving as both tools for extracting latent structures and empirical systems for probing generative principles.

👉 More information

🗞 Component systems: do null models explain everything?

🧠 ArXiv: https://arxiv.org/abs/2601.13985