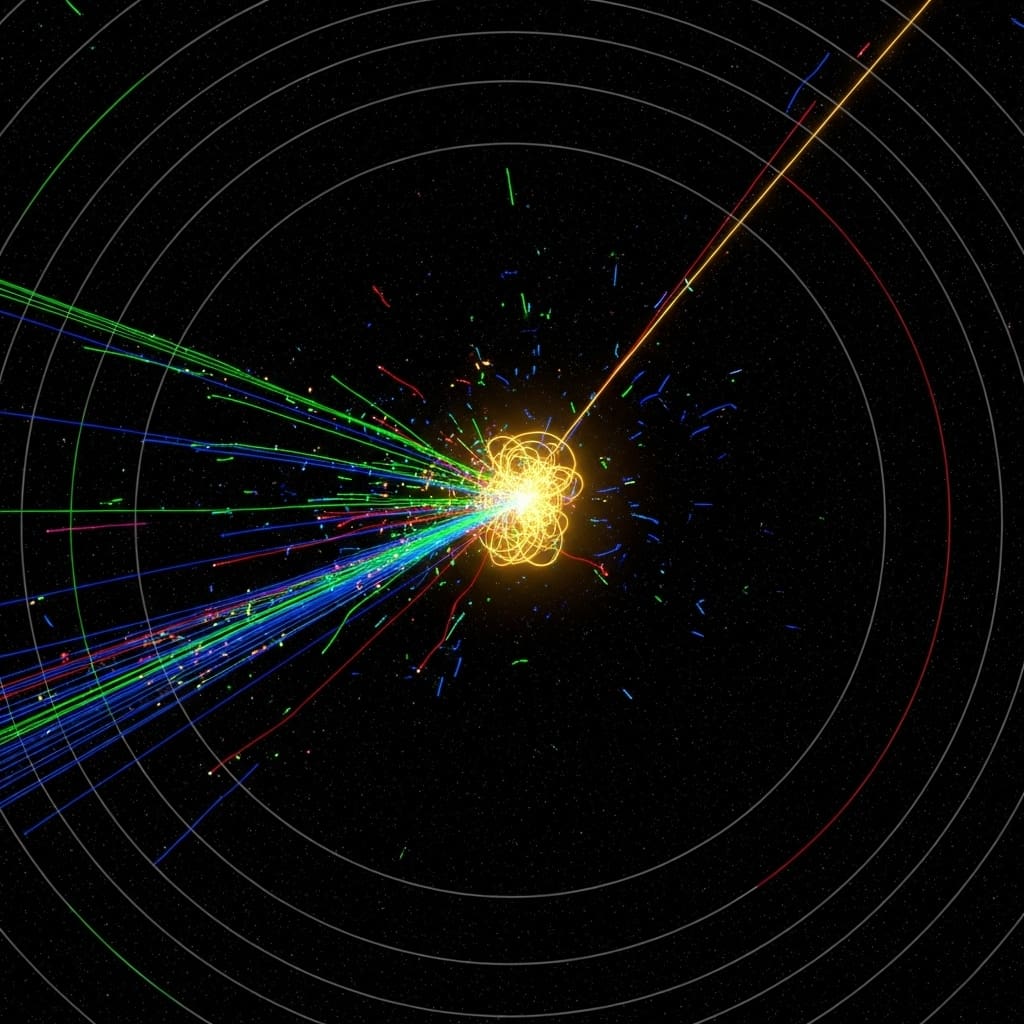

Scientists are tackling the immense data challenges faced by High-Energy Physics experiments like those at the Large Hadron Collider, and are exploring whether quantum machine learning can offer a solution. Fatih Maulana from the School of Computing, Universiti Utara Malaysia, alongside colleagues, investigated Variational Quantum Classifiers (VQCs) for detecting Higgs Boson signals, utilising data from the ATLAS Higgs Boson Machine Learning Challenge 2014. Their research, detailed in a new paper, demonstrates that increasing the depth of quantum circuits , adding more entanglement layers , significantly boosts performance, achieving 56.2% accuracy, whereas simply increasing the number of qubits actually reduced accuracy due to optimisation difficulties. This finding is significant because it suggests that, for current quantum computers, prioritising circuit complexity over sheer qubit count is key to unlocking the potential of quantum machine learning for particle physics.

A key innovation lies in the careful balancing of circuit depth and qubit count to optimise performance on current noisy quantum hardware.

Researchers implemented a dimensionality reduction pipeline utilising Principal Component Analysis (PCA) to map the initial 30 physical features of particle collisions into both 4-qubit and 8-qubit latent spaces. This pre-processing step is essential for adapting high-dimensional classical data to the limitations of existing quantum computers. Three distinct VQC configurations were then meticulously benchmarked: a shallow 4-qubit circuit, a deeper 4-qubit circuit with increased entanglement, and an expanded 8-qubit circuit. The team’s experiments revealed that increasing the depth of the quantum circuit, adding more layers of entanglement, substantially improved classification accuracy, surpassing the baseline performance of 51.9% .

Notably, the deep 4-qubit circuit (Configuration B) achieved the highest accuracy of 56.2%, demonstrating the power of enhanced circuit complexity. Conversely, simply increasing the number of qubits to 8, without a corresponding increase in circuit depth, resulted in a performance decrease to 50.6%. This degradation was attributed to optimisation challenges arising from “Barren Plateaus”, a phenomenon where the gradient of the loss function vanishes in high-dimensional Hilbert spaces, hindering the training process. The study establishes that, for near-term quantum hardware, prioritising circuit depth and entanglement capability is more effective than merely increasing qubit count for anomaly detection in high-energy physics data.

This work opens exciting possibilities for utilising quantum machine learning to analyse the ever-increasing data streams from the LHC and future colliders. By carefully designing quantum circuits that maximise expressibility without falling victim to optimisation bottlenecks, scientists can potentially unlock new insights into fundamental particle physics. The findings suggest a pathway towards developing efficient quantum models capable of identifying subtle signals and improving the precision of measurements in high-energy physics experiments. The. . Following data encoding, a RealAmplitudes ansatz, characterised by layers of Ry rotation gates and CNOT entangling gates,. Experiments revealed that increasing circuit depth, rather than simply adding qubits, yields substantial improvements in classification accuracy. Specifically, a deep 4-qubit circuit (Configuration B) outperformed both a shallow 4-qubit circuit and an expanded 8-qubit circuit, achieving the highest accuracy recorded in this study.

The team implemented a dimensionality reduction pipeline employing Principal Component Analysis (PCA) to map 30 physical features into both 4-qubit and 8-qubit latent spaces. Measurements confirm that the deep 4-qubit circuit, with increased entanglement layers, delivered an accuracy of 56.2%, a notable improvement over the baseline accuracy of 51.9%. Conversely, scaling to an 8-qubit circuit resulted in a performance decrease to 50.6%, attributed to optimization challenges arising from Barren Plateaus within the expanded Hilbert space. Data shows that these plateaus hinder effective training on larger quantum systems.

Researchers meticulously measured the performance of three distinct configurations: a shallow 4-qubit circuit (A), a deep 4-qubit circuit (B), and an expanded 8-qubit circuit (C). The ZZFeatureMap was used to encode classical data into the quantum Hilbert space, while the RealAmplitudes ansatz served as the trainable variational layer. Tests prove that the optimization process, utilising the COBYLA optimizer within the Qiskit SDK, was crucial in identifying the optimal circuit parameters. The loss function employed was Cross-Entropy, guiding the algorithm towards minimizing classification errors. Results demonstrate that prioritizing circuit depth and entanglement capability is more critical than increasing qubit count for effective anomaly detection in high-energy physics data.

The study highlights the importance of expressibility and trainability in near-term quantum hardware. By carefully balancing these factors, scientists can unlock the potential of quantum machine learning for tackling complex data analysis challenges in particle physics, potentially accelerating the discovery of new phenomena at the LHC. The study benchmarked three VQC configurations, shallow and deep 4-qubit circuits, and an 8-qubit circuit, to assess the impact of circuit depth versus qubit count on performance. Experimental results revealed that a deep 4-qubit circuit achieved the highest accuracy of 56.2%, significantly outperforming the baseline of 51.9% and exceeding the performance of the 8-qubit circuit, which suffered a decline to 50.6% .

This finding highlights the critical importance of prioritizing circuit depth and entanglement capability over simply increasing qubit count for near-term quantum hardware in anomaly detection tasks. The authors acknowledge that classical algorithms currently achieve higher absolute accuracy, but emphasize that the primary goal was to benchmark quantum architectural strategies. Furthermore, dimensionality reduction was identified as a crucial error mitigation strategy for Noisy Intermediate-Scale Quantum (NISQ) devices. However, the results are based on fixed-seed simulations, and future work should focus on statistical validation across multiple random seeds to assess performance robustness. Additional research will explore Quantum Kernel Methods (QSVM), integrate Quantum Error Mitigation (QEM) techniques, and investigate alternative optimizers like SPSA to enhance resilience against barren plateaus. The availability of the dataset and source code promotes transparency and reproducibility within the research community.

👉 More information

🗞 Impact of Circuit Depth versus Qubit Count on Variational Quantum Classifiers for Higgs Boson Signal Detection

🧠 ArXiv: https://arxiv.org/abs/2601.11937